Gaming Level-Up: Benefits Of Upgrading Integrated Graphics With EVGA & ASUS

Because our integrated graphics solutions are just not up to the task of playing any of our gaming tests at 1080p, even on the lowest settings, we ran our baseline tests at 720p. That means on top of the higher frame rates we hope to see, the discrete cards are responsible for rendering around twice as many pixels per frame with much higher detail. We think this is representative of how most folks are gaming (or trying to game) on integrated graphics setups. Based on prior experience, we expect these solutions to fall comically behind their discrete brethren. That's not true for 3DMark, however, so those tests are a direct apples-to-apples comparison.

Enough chatter; on with the tests.

|

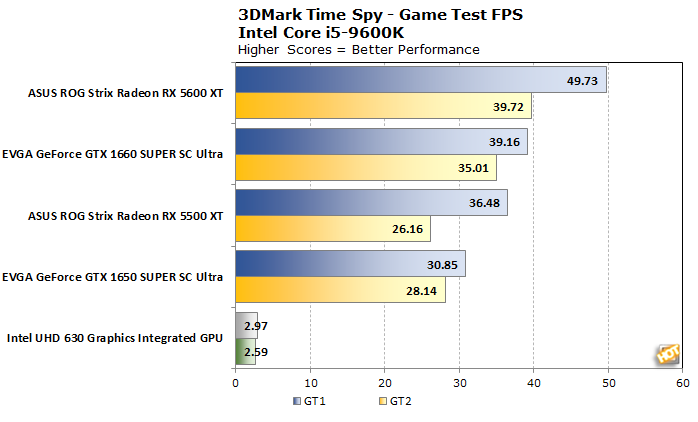

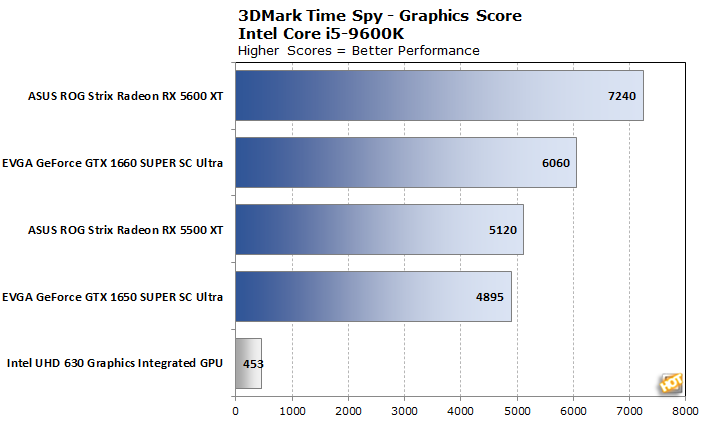

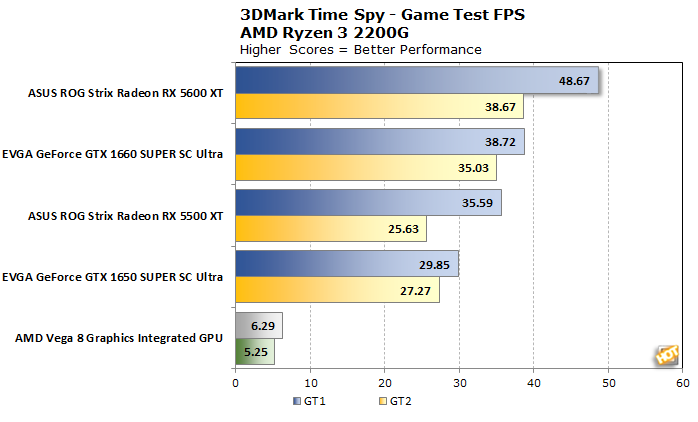

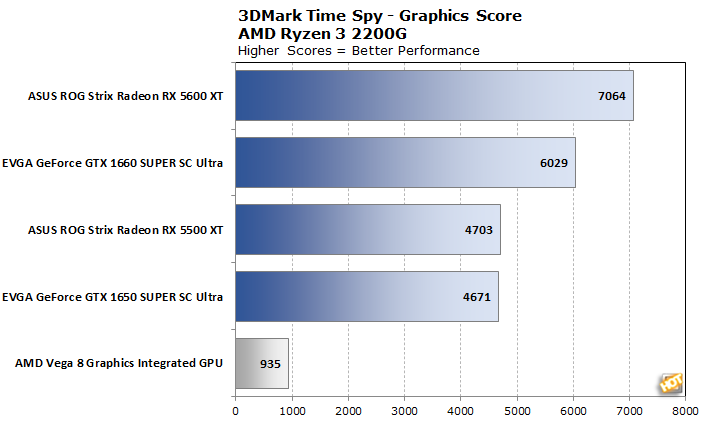

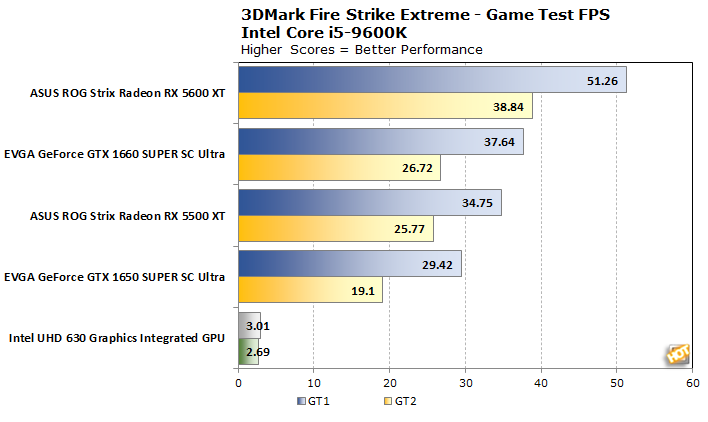

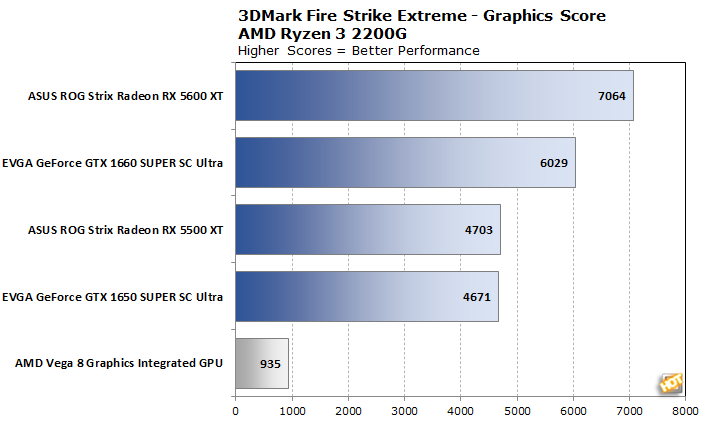

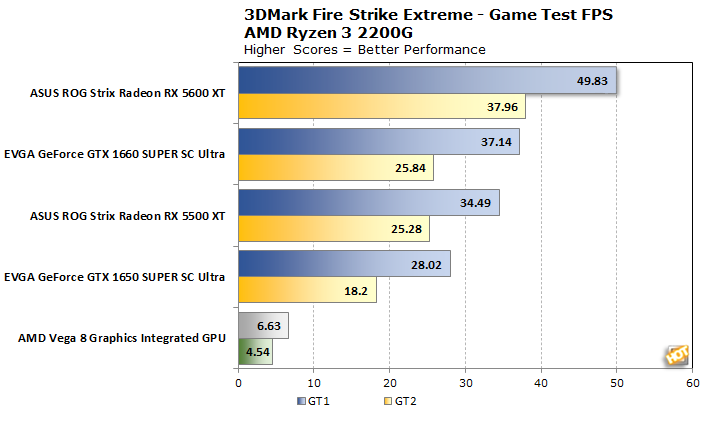

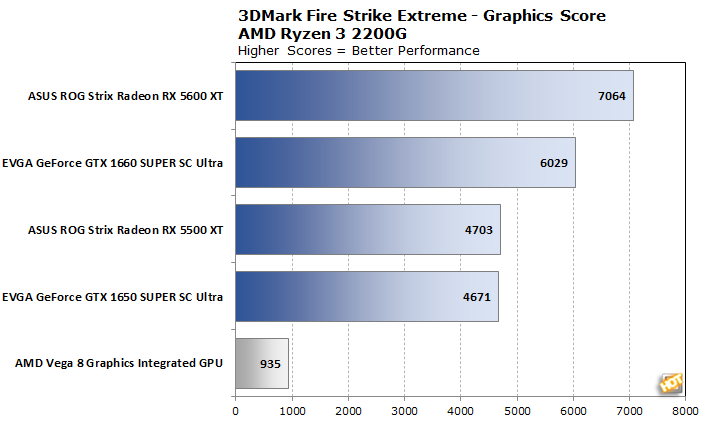

We ran two of 3DMark's most strenuous tests on our systems: Time Spy and Fire Strike Extreme. Since we used the same settings for the integrated GPUs on our processors, this will give us a rough estimate of how even an inexpensive GPU scales. This should be pretty wild, so strap in.

As expected, the Intel integrated graphics processor is way, way behind any of these discrete cards. If you're trying to play any game more detailed than Solitaire on integrated Intel graphics, it's time to go to Amazon, and buy one of these cards. Even the cheapest card in this shootout, EVGA's GeForce GTX 1650 SUPER SC Ultra, is more than ten times faster by any measure: both game tests and the overall graphics score.

The same is true for our ASUS ROG Strix Radeon RX 5500 XT, although this card is a little more expensive. If you refer back to our RX 5500 XT review, you'll see that 3DMark is not very kind to the smallest Navi GPU. However, despite a less powerful processor in our midrange test rig, the ASUS card is a bit faster than the Sapphire Pulse card we tested. Since both cards have the same maximum boost clock, that likely comes down to the beefy cooler on the ROG Strix.

As expected, the best overall score comes from the most expensive card on the list, ASUS's ROG Strix RX 5600 XT. In the first graphics test, it's a full 50% faster than the smaller Navi, and also delivers a good thumping to the EVGA cards. In our full RX 5600 XT review, this GPU was faster than even the price-equivalent GeForce RTX 2060, and around 7% faster than the Sapphire Pulse we reviewed at launch.

When we look at these same tests on the Ryzen 3 2200G, our options all fall in line again. Never mind that the Vega 8 graphics thump Intel's integrated GPU, even though the Ryzen was saddled with DDR4-3000 instead of the faster DDR4-3200 of our Core i5-9600K rig. Even the lowly EVGA GTX 1650 SUPER SC Ultra is pushing pixels around five times faster. By the time we get up to the ASUS ROG Strix RX 5600 XT, that factor stretches to eight times faster. All of these graphics cards represent a huge increase in performance over integrated solutions.

Fire Strike Extreme is also an incredibly punishing test even today, and especially for these midrange graphics cards. Let's take a look at the results.

Again, Intel's integrated graphics are just not meant for intensive 3D graphics. In this test, EVGA's GeForce GTX 1650 SUPER SC Ultra is eight to ten times faster than integrated once again. The equivalently-priced EVGA GeForce GTX 1660 SUPER SC Ultra is quite a bit faster than the equivalently-priced card. On the high end, though, ASUS gets the last laugh, beating the EVGA GTX 1660 SUPER by 50%, exceeding its 44% higher price tag. The ROG Strix RX 5600 XT is more than just its big, beefy cooler and RGB bling; there's some serious value for the performance here, too.

As before, the Ryzen 3's pecking order is basically the same, but thanks to a weaker CPU with just four cores, no SMT, and a lower clock speed, these results are just a hair lower than the Intel rig. As before, it's a very tight race between the $170 EVGA GeForce GTX 1650 SUPER SC Ultra and the $230 ROG Strix Radeon RX 5500 XT, which isn't good news for the Radeon. Fortunately, we play games and not 3DMark, so this isn't as important as it would be in a later test. The Vega 8 again does better than Intel's integrated UHD 630, but we're still looking at between five and eight times the performance out of our discrete graphics solutions.

|

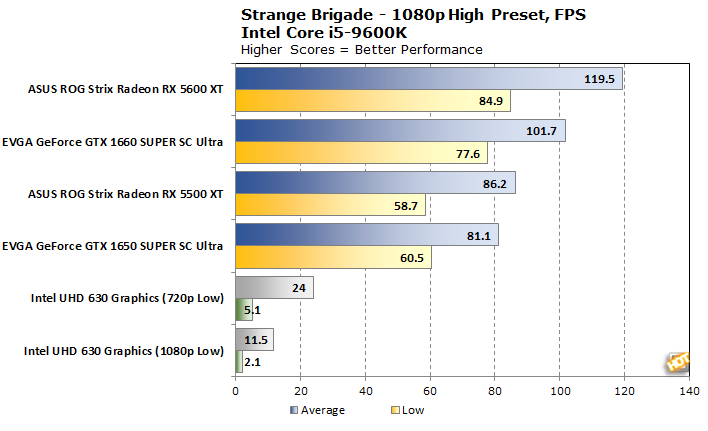

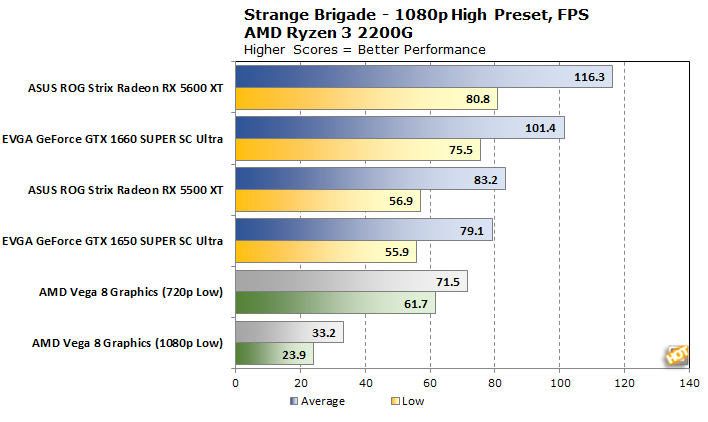

To start out our gaming tests, we'll take a look at our DirectX 12 benchmark, Strange Brigade. If you haven't played this title, take Left 4 Dead's four-player co-op and replace all the horror and zombies with 1930s British imperialism and over-the-top alliteration and humor. It's a pretty good game on its own. We ran the built-in benchmark at the High preset and reported the Average and Low fps results here.

As it turns out, this game isn't all that CPU-limited. There's a big swing between the $170 EVGA GeForce GTX 1650 SUPER SC Ultra and the $340 ASUS ROG Strix Radeon RX 5600 XT. The cards all slot in where you'd expect, though, with the similarly-priced ROG Strix Radeon RX 5500 XT and GTX 1660 SUPER SC Ultra sandwiched in between. For about the cost of an Xbox (for varying values of Xbox, between the Xbox One S Digital Edition and the Xbox One X) we turned our PC into a gaming machine that has absolutely no trouble with Strange Brigade.

For the Intel rig, the game isn't playable, even at 720p on low settings. Even the cheapest card here will let you bump up the resolution, crank the details, and exceed 80 frames per second on average. That's the difference between not playing the game (or not enjoying it if you try, anyway) and excellent visuals. For our Ryzen system, the game is at least playable at low settings and 720p. Still, the level of resolution detail afforded by any of our graphics cards make them a worthwhile purchase.

|

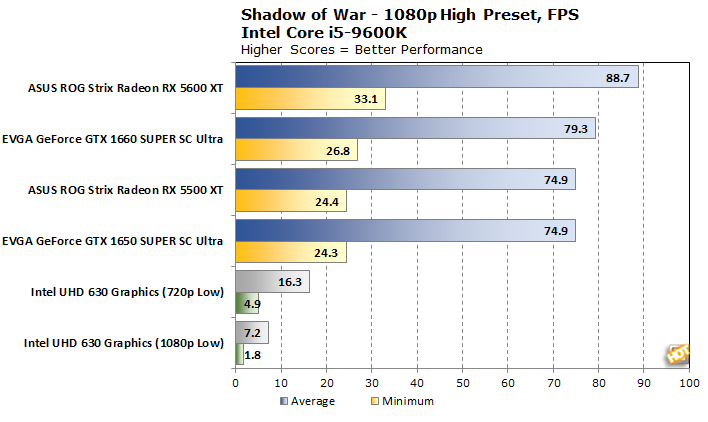

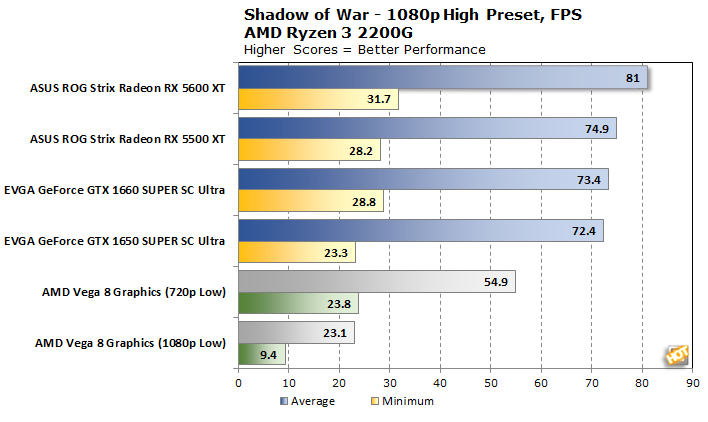

Middle Earth: Shadow of War is the second open-world action title set as a prequel to the books in J.R.R. Tolkien's Lord of the Rings universe. The landscapes are varied and just beautiful to look at, though the tone is a bit darker than the first title, Shadow of Mordor. Let's see how it runs.

Again, we've got average and low frame rates. As it turns out, Shadow of War runs really well on any of our graphics cards. There's not as big of a swing between the EVGA GTX 1650 SUPER SC Ultra and the ASUS ROG Strix Radeon RX 5600 XT on the averages. However, the more expensive Radeon has a distinct advantage in the low frame rate. As before, Shadow of War is not playable on Intel integrated graphics, so any of these cards represents big value to folks looking to play the latest games.

Our Ryzen 3 2200G is starting to show some of its weakness and leaves some performance on the table. Our budget system is around 12% slower than the Intel setup when the two PCs drive the ROG Strix Radeon RX 5600 XT. Interestingly, with the AMD CPU, the ASUS ROG Strix RX 5500 XT beats out the EVGA GTX 1660 SUPER SC Ultra, turning the tables from the Intel test platform. Otherwise, there aren't many surprises in the AMD system's test runs.

To this point, both the EVGA GeForce and ASUS ROG Strix graphics cards have acquitted themselves nicely. Beyond just providing the benefit of actually making games playable over integrated graphics, these cards are all faster than the stock versions we reviewed at launch. That's partly due to drive enhancements, but they're all clocked quite a bit faster than the reference speeds defined by AMD and NVIDIA. Despite that, we haven't seen a hiccup or crash caused by heat yet. Let's see how that trend continues.

In this next round, we'll take a deep dive into two big blockbusters: Shadow of the Tomb Raider and Gears 5. In particular, Gears is one of the biggest titles in terms of hype and attention over the last few months, bringing a big open world to the land of chest-high walls and set pieces. We've seen how it runs on high-end graphics cards, but this is the first time we'll see how it scales down to handle the midrange. Shadow of the Tomb Raider concludes the three-part Tomb Raider reboot that started in 2013, and each iteration of Lara Croft's series looked better than the last.

|

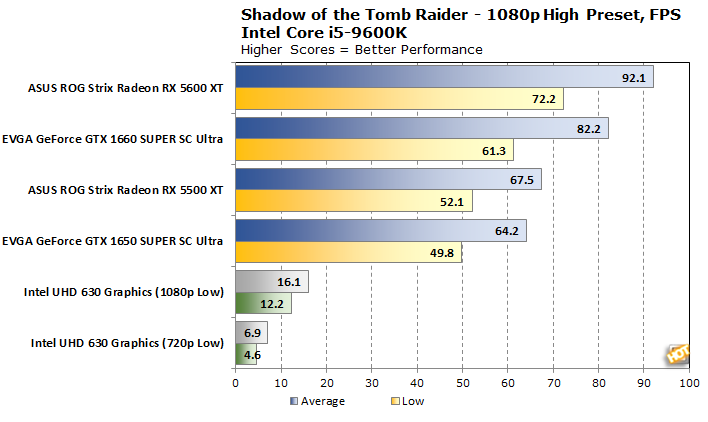

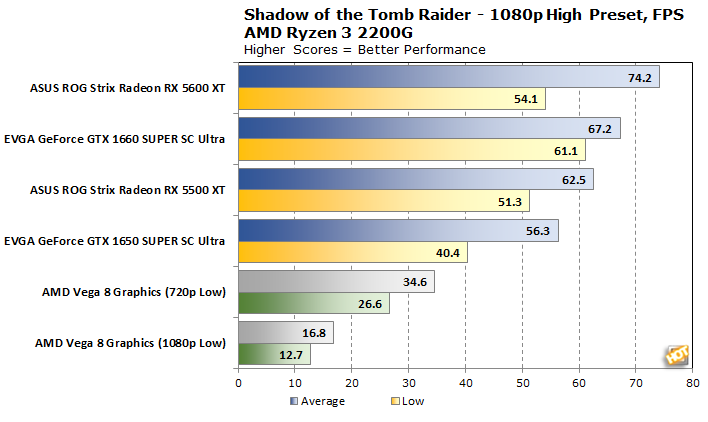

To test Shadow of the Tomb Raider, we used the built-in benchmark, which cycles through several scenes including a couple of villages and a mountainside in a variety of lighting conditions. Once again, we tested our integrated graphics at 720p on the lowest settings, but then we cranked the detail up to the maximum preset along with increasing the resolution to 1080p for the discrete cards.

The Intel system was once again totally incapable of producing playable frame rates without the aid of a discrete card. Once that card is in place, however, all four of our contestants were perfectly capable of delivering solid average frame rates. The EVGA GeForce GTX 1650 SC Ultra started at 64 frames per second and the bidding increased from there. The ASUS ROG Strix Radeon RX 5500 XT wasn't able to keep up with the EVGA GTX 1660 SUPER SC Ultra, which is a little disappointing considering their similar price point. On the other hand, the ROG Strix Radeon RX 5600 XT justified its higher price with performance high enough that it would likely satisfy most gamers at 1440p, not just 1080p.

On our Ryzen 3 2200G, it once again seems that the faster cards outstrip the CPU's ability to feed them data to generate frames. While the EVGA GTX 1650 SUPER SC Ultra didn't hit a 60 fps average it was darn close. On the other hand, the CPU's slower processing leveled out the frame rates up towards the higher end, and the ASUS ROG Strix RX 5600 XT gave up 20 fps of performance compared to the Intel card. We're starting to see a pattern, that in CPU-intensive games, you might let some GPU horsepower go to waste on a slower CPU.

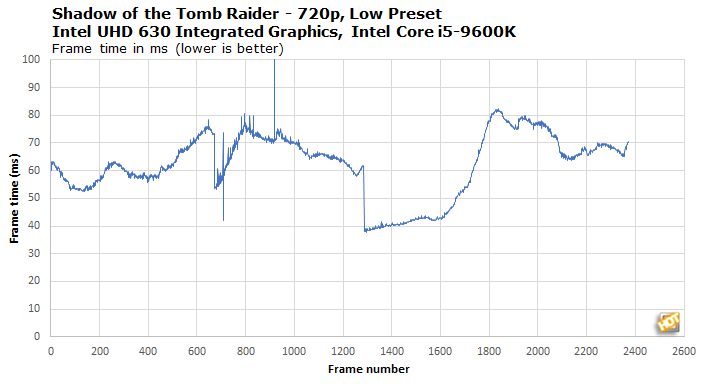

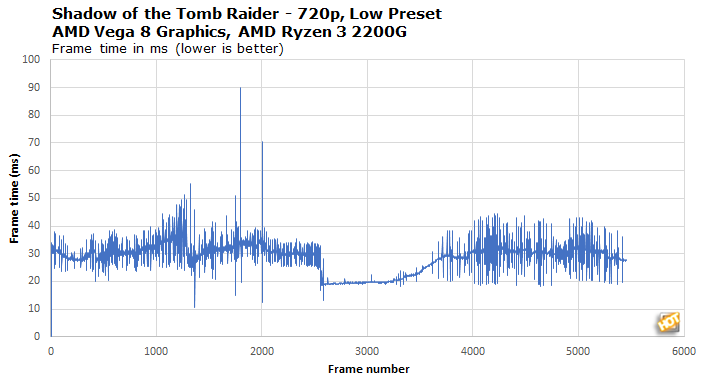

Since there are several scenes involved here, we thought it'd be appropriate to look at frame times in the newest Tomb Raider's benchmark. These graphs show how long each frame took to render over the course of the run. What we hope to see is a relatively tight grouping low on the scale. That means 16.7 milliseconds equates to roughly 60 fps, while a relatively minor decrease to 12.5 milliseconds would be 80 fps, and so on. A large spike in frame time comes from an extended pause, and those detract from the experience so we're hoping for as few of those as possible.

This first group is from our Intel system.There are a couple of interesting trends across all four graphs. The first is a big spike around a quarter of the way through the run. That affected Radeons and GeForces alike, and also seems to have affected the Intel system. This manifested itself as an obvious hitch in playback. Since it happened on every configuration, this appears to be an issue with the game, not drivers or GPU architecture. Also, the last quarter of the run looks kind of fuzzy with frame time oscillating in a relatively tight window. That looks worse than it really is. For example, the ROG Strix RX 5600 XT's frame times never got higher than 15 millliseconds or so, and as a result, we never lost a full 60 fps. Even the EVGA GeForce GTX 1650 SUPER SC Ultra stayed below 25 millliseconds throughout, meaning at worst you might see an instantaneous frame rate of 40 fps.

All of our cards came out of this test smelling like roses. Let's see how they handle somewhat adverse conditions, like when the cards are faster than the CPU feeding them. Our faster cards left a good amount of performance on the table since the Ryzen 3 2200G couldn't quite keep up.

All of the cards had little hitches in their giddyups, so to speak. The weaker graphics cards had a bit of a problem with the final scene, which takes place in a South American marketplace. The frame times again oscillated like they did on the Intel system, but the magnitude was much greater. This is almost assuredly caused by the CPU trying to sort through the scene and keep the graphics card fed.

The EVGA cards suffered a bit worse than their ASUS counterparts when it came to outright stutters. This is because the Ryzen 3 2200G failed to draw all of the mountain scene right at the start. There was obvious texture pop-in as the camera panned away from a mountain peak, and it looked pretty ugly. This was consistent throughout multiple runs, and never happened on the Intel system. It also never happened to the Radeons, so it's unclear exactly what's going on. For whatever reason, AMD's CPU had an easier time with the AMD-based graphics cards, apparently.

|

Gears 5 is one of my personal favorite games of 2019. In developer The Coalition's latest title, classic Gears gameplay meets an open world full of mystery and conspiracy. It also doesn't hurt that, thanks to Unreal Engine 4 and some excellent art, it looks great. It also ran quite nicely on higher-end hardware, so let's see how it scales downward.

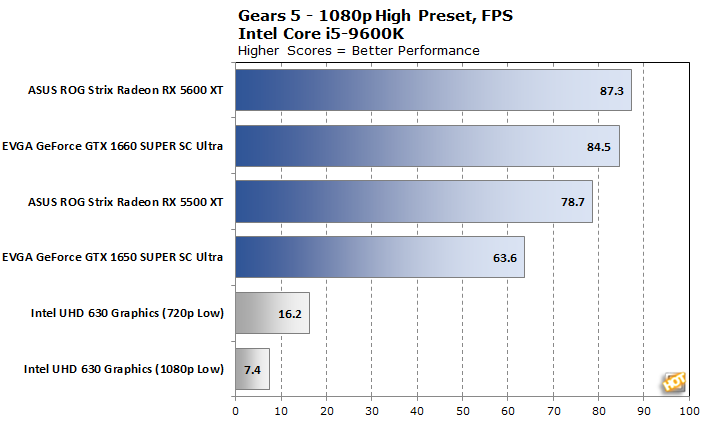

On the Intel system, the different cards performed to their price points. EVGA's GeForce GTX 1660 SUPER SC Ultra was just about 10% faster than the ASUS ROG Strix Radeon RX 5500 XT, and the ASUS ROG RX 5600 XT was a bit faster than the EVGA. All of the cards were very comfortably above 60 fps except for the cheapest card on the chart, EVGA's GTX 1650 SUPER SC Ultra. By this point in the testing, the trend seems to have locked in, and every card winds up right about where it has been all along.

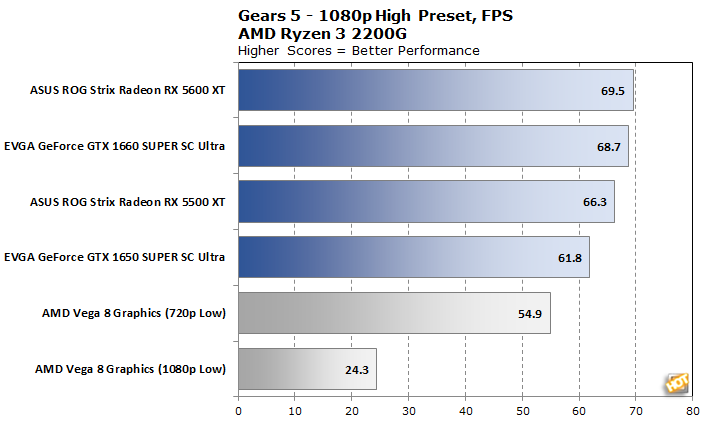

When we move to the Ryzen 3 2200G, we once again see diminishing returns. On the Core i5-9600K, two cards were north of 80 fps, but this time none of them even reach the 70 fps plateau. It seems like the extra performance on the ROG Strix RX 5600 XT would be going to waste here, but the same is also true of the EVGA GeForce GTX 1660 SUPER SC Ultra and even the ASUS RX 5500 XT. The smaller Radeon ran 16% slower on the budget CPU, so it seems that Gears is at least a little bit CPU-bound. Let's see what the frame time graphs look like.

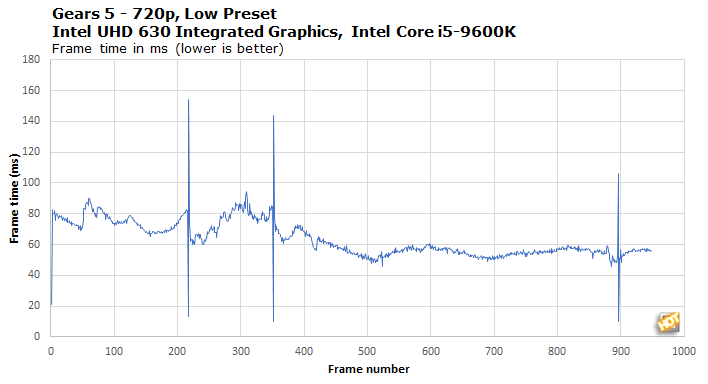

The only graphics card that the Core i5-9600K didn't totally love was the cheaper EVGA card and its GeForce GTX 1650 SUPER GPU. At several points throughout the run, especially early on when there's the most scenery changing, the frame times jumped up to 18 to 20 milliseconds, which means the game went in and out of 60 fps. The average was still up over 60, and gameplay never felt sluggish on the smaller GeForce, but there's a very obvious difference stepping up to the EVGA GTX 1660 SUPER SC Ultra or the ROG Strix RX 5500 XT. Frame rates went from mostly fluid to completely smooth. To us, it's worth spending an extra $60 (if it's in the budget) to step up to a faster card. EVGA does have its famous 90-Day Step Up program, so you could perhaps do that yourself later on if funds are tight at the time of purchase.

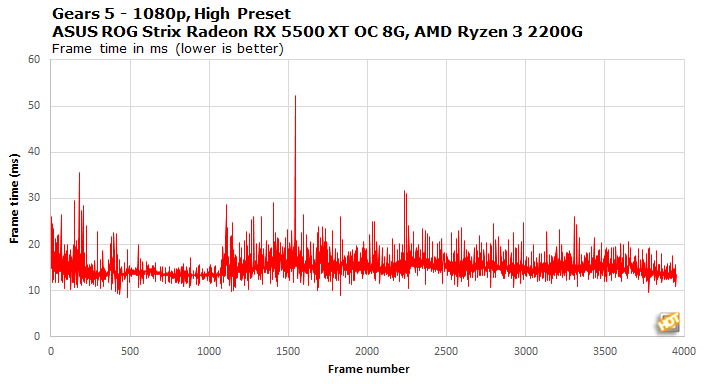

Lastly, here's the final proof that you can spend too much on a graphics card for a $100 CPU. In this case, the Ryzen 3 2200G always finds a way to drop below 60 fps with even the fastest ASUS card in our test. Fortunately, the graphics options in Gears 5 have explanations in-game as to how they affect performance and whether they're more influenced by the CPU or the GPU. With a little judicious tweaking, solidifying frame rates should be possible. Still, even in this title, we'd much rather be gaming with a discrete graphics card that lets us crank up the details than on integrated where frame times were higher overall. Still, it may not be worthwhile to buy anything more powerful than a GTX 1660 SUPER or Radeon RX 5500 XT for a budget processor.

Next up we'll take a look at power and noise for each graphics card, and summarize our findings (spoiler: it's well worth it to upgrade from integrated graphics, and both EVGA and ASUS have great options with which to do it).