How Many CPU Cores Do You Need For Great PC Gaming?

These days, just about all processors are running at or near the ragged edge of their clock speed capabilities. AMD's current Zen 3 family has four CPU models separated by a mere 300 MHz, which works out to be around a meager six percentage points. A Ryzen 5 5600X has six cores with 12 hardware threads and a maximum clock speed of 4.6 GHz while the Ryzen 9 5950X has more than twice the cores and threads, 16 and 32 respectively, but maxes out at 4.9 GHz. Is a Ryzen 9 5950X more than twice as fast in games, though? Again, assuming there are no other system bottlenecks, it's hard to say. As you might imagine, not all game engines are built to fully utilize 32 threads, but that's where this article comes in rather handy, to help you sort things out.

Bottlenecks, A Balanced System And How Many CPU Cores You'll Actually Need

Building a gaming PC is an exercise in alleviating bottlenecks. If a processor is too slow, it can't drive a graphics card to its fullest potential, leaving performance on the table. It would be unwise to put a Core i3-class CPU in a gaming PC with a GeForce RTX 3090, because it's imbalanced and a lot of GPU performance will go untapped. By the same token, if we only populate one memory channel, a very fast processor could be starved for memory bandwidth. On the other end of the spectrum, lower-end graphics cards trying to drive a 4K display at its native resolution will likely falter. These considerations are why we've published system build guides at HotHardware in the past. Balanced system builds make the most of your compute, memory, graphics and rendering resources.The question we're asking here, however, is pretty simple. How many cores are enough for a gaming PC? First of all, asking this without also knowing the target rendering resolution is meaningless. We're focusing here on 1,920x1,080 (1080p Full HD) and 2,560 x 1,440 (1440p or Quad HD). These are by far the two most popular resolutions according to Steam's PC hardware survey, with 1080p representing a full two-thirds of all PCs with the seminal online gaming store installed.

By the same token, targeting a resolution is great, but the graphics card is also a consideration because it might not be able to handle rendering at the display's native resolution. Unfortunately, shortages have made graphics cards hard to obtain, and we're not blind to that fact. Still, to detail an accurate view for the future, we're looking at current model GPUs. Based on their launch MSRP, the $500-550 GeForce RTX 3070 and $679-700 Radeon RX 6800 XT represent two slightly different segments of the market, but both still occupy the higher-end of the graphic card scale. Yes, we know they're very hard to get at MSRP, but that's currently a constant we have not control of, and we're answering questions of science here.

On the following pages, we'll be answering the question of how many CPU cores are enough four ways: at 1080p with the GeForce RTX 3070 and Radeon RX 6800 XT, and at 1440p with those same cards. We're also going to take an abbreviated look at 3,840x2,160 (2160p, UltraHD / 4K). However, even with the games and graphics cards we've selected here, 4K gaming performance is going to be dictated by the graphics card far more than the CPU, even in 2021 with these relatively powerful graphics cards.

Building Our Test Bench

We're testing a wide array of processor configurations. To do this, we turned to two different processors from AMD's high-end Ryzen 5000 family. The Ryzen 7 5800X has eight cores, 16 threads via symmetric multi-threading, and a maximum boost clock of 4.7 GHz. The Ryzen 9 5900X has 12 cores, 24 threads, and a slightly higher boost clock at 4.8 GHz. The ASUS TUF Gaming X570-Plus (Wi-Fi) allows us to disable cores, multi-threading, and even an entire chiplet on the Ryzen 9 5900X so for most of our tests, we used that. However, since its dies are limited to six cores a piece, we used the Ryzen 7 5800X for our 8C/16T configuration. The 100 MHz difference in maximum boost speed is almost entirely lost in the noise, though the dual-die Ryzen 9 5900X does have double the L3 cache.The rest of our test system is pretty standard PC enthusiast fare. Main memory is 32 GB of Corsair Vengeance LPX DDR4-3600 RAM in a 2 x 16 GB configuration. Our test subject games were installed on a 1 TB WD Blue SN550 M.2 SSD, while the boot drive was handled by a 1 TB Sabrent Rocket 4.0 M.2 SSD. Our power supply is a Corsair RM 750X ATX, our cooler is a Deepcool Castle 280Ex, and the whole system lives inside a Fractal Design Meshify S2 with its stock fan configuration.

Our Game Performance Testing Methods

As for the games themselves, we looked for a nice collection of recent PC exclusives and cross-platform triple-A titles, some of which we've reviewed extensively before. We ended up with 11 benchmarks across nine titles:- Ashes of the Singularity: Escalation

- Sid Meier's Civilization VI

- Cyberpunk 2077

- FarCry New Dawn

- Gears 5

- Middle Earth: Shadow of War

- Metro Exodus

- Shadow of the Tomb Raider

- Watch Dogs: Legion

Each test was run on all of the six different core count and SMT configurations. That includes 12, 8, 6, and 4 cores with SMT enabled, and again with 6 and 4 cores without SMT. This gives us a look at 4, 6, 8, 12, 16, and 24 hardware threads. If you've not yet done the math, it works out to be 264 total data points and a minimum of 792 benchmark runs. We also ran a couple of our more interesting results at 4K to see if the graphics cards became a bottleneck. Data can be fun, so we hope you enjoy copious amounts information!

How Many Cores are Enough for 1080p Gaming?

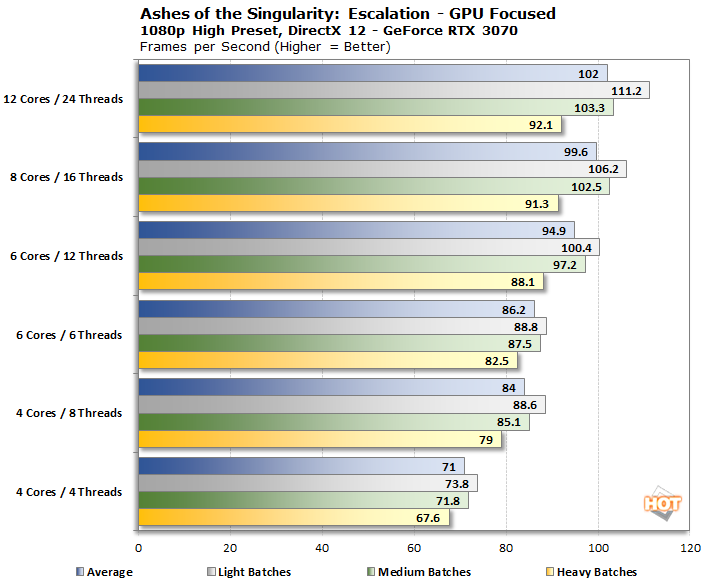

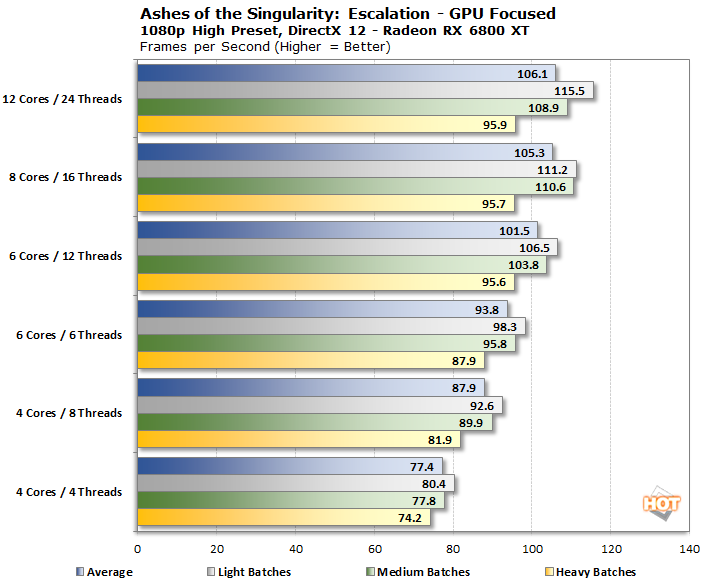

We're going to look at and analyze our results by resolution, rather than by game, because of the effect rendering workload has on both CPU and GPU demand. So, with that in mind, we'll start with our lowest resolution, which should expose the differences between core configurations the most.Ashes of the Singularity has been a showcase game for AMD over the years. Back in the early GCN days, models like the Radeon HD 7950 has an advantage over NVIDIA graphics cards in this game due to DirectX 12's Asynchronous Compute features, which performed better on AMD hardware. These days, it's still a pretty hefty benchmark for processors with two different benchmarks. Let's take a look at the latest version, dubbed Escalation.

In the GPU-focused test, we get four values per configuration: the average in frames per second, and also how the game performed with different "batches" of draw calls. Lighter -- or presumably, smaller -- batches of draw calls tend to perform better than more strenuous batches. There's definitely a "knee" of sorts in our charts, where having more cores gain you much performance, but losing hardware threads at a certain level starts to have a real drag on performance.

Here, we can immediately see that a quad-core processor is a performance drain on both our GeForce RTX 3070 and Radeon RX 6800 XT. It's far worse without SMT (Symmetric Multi-Threading), too. Overall, the sweet spot in this benchmark at 1080p seems to be 12 threads. If we look at our 6-core / 12-thread configuration as a simulated Ryzen 5 5600X, that helps us put a little perspective on it. That's a pretty mid-range processor with potent single-threaded performance and a lot of multi-threaded grunt.

Especially if you want to get the most out of a high-refresh 1080p display, it makes sense to get more than the bare minimum number of cores. Both the GeForce RTX 3070 and Radeon RX 6800 XT were stuck somewhere in the 70 FPS range on average, though the Radeon was slightly faster. We'd expect that, considering the (MSRP) price disparity between the two. Let's see how the CPU-focused test fares.

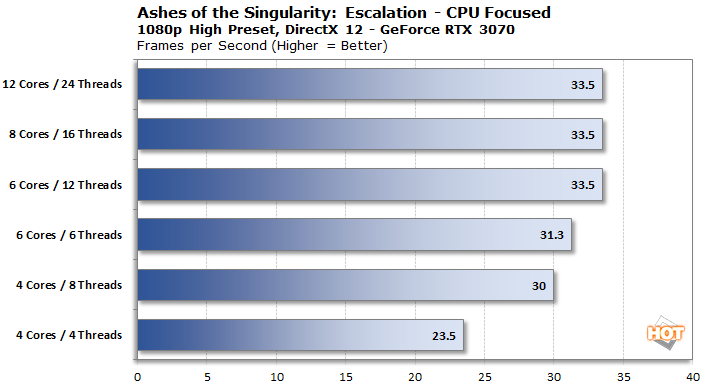

Once again we see a knee in the graphs, and it's very obvious here that while six cores are enough, it really needs some SMT to get all the way there. The performance fall-off at 4C/4T is particularly stark. Regardless, the wall we hit at 6C/12T is a full 36% faster than our lowest configuration.

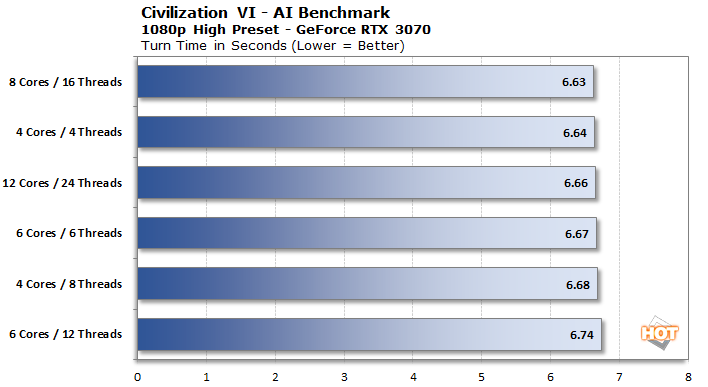

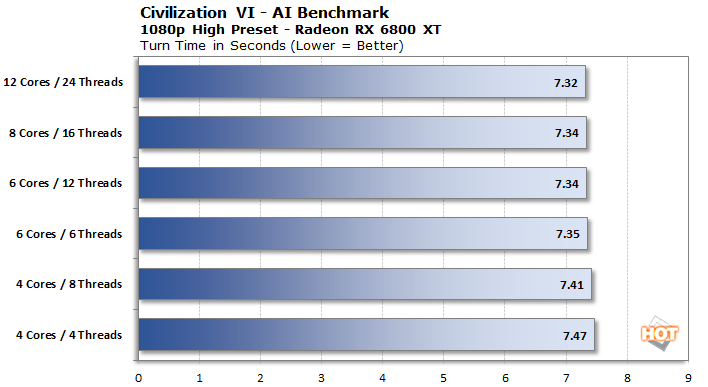

Next up is Sid Meier's Civilization VI, a title that at launch was known for being a bit of a CPU hog, at least in terms of CPU non-player turn times taking a while. The game has two benchmarks, just like AotS, and again they're separated between CPU and GPU functions. Let's take a look at the AI turn-time test first...

These results are pretty unexpected. It seems that when it comes to taking its AI turns, Civ VI is very limited in the number of threads it will use. We had hoped that more threads meant more simultaneous simulations while it did its thinking, but apparently 4C/4T is enough to do its thing. What's more amusing is how much the graphics card's architecture affects CPU turn times. We did not expect that the latest RDNA 2 chip in the Radeon RX 6800 XT would add around 10% additional response time to turns. It's not like any of these are slow per se, it was just unexpected.

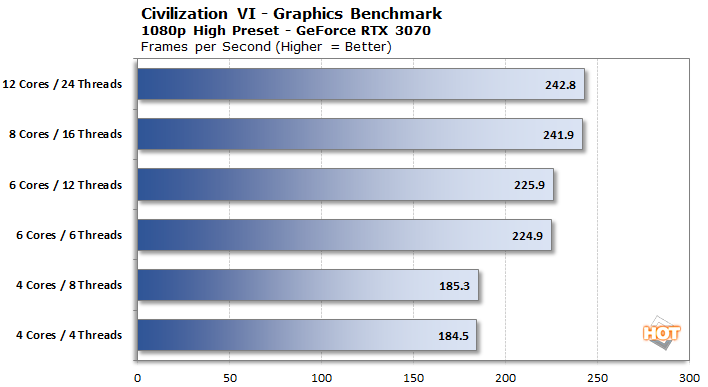

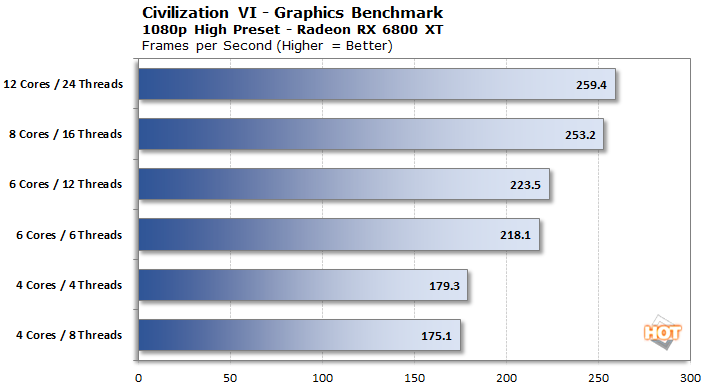

The graphics test in Civilization VI is far more CPU-dependent than the AI test, interestingly enough. These charts have more of a stair-step effect to them, which makes us think that the game doesn't efficiently utilize AMD's SMT threads, something that Cyberpunk 2077 famously eschewed at launch. On both of our graphics cards at 1080p, we'd max out most high-refresh monitors with a lowly 4C/4T processor, but if you need every possible frame in order to play Civ on a 1080p 240 Hz display, you should pony up for a Ryzen 7 5800X or something similar.

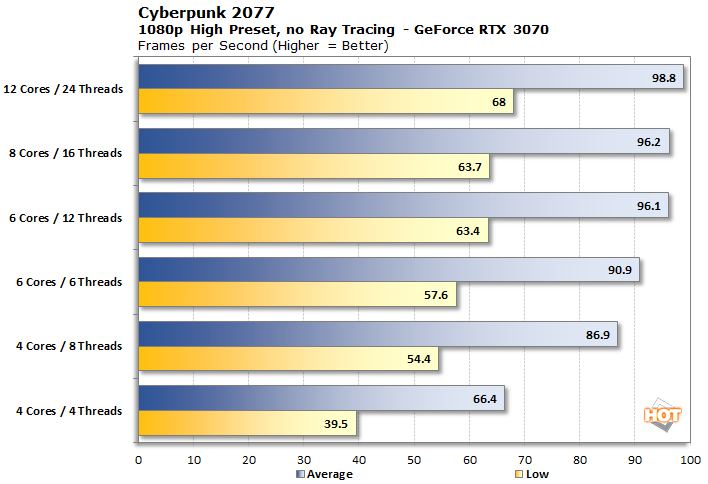

Next up is Cyberpunk 2077. We've made a lot of racket about performance in this title, covering performance in depth both at launch and after the first major patch. The title has had some more updates since then, and is now up to version 1.22. Unfortunately, bugs still exist and cars still appear out of thin air to run us over in a city crosswalk. Let's see how this title scales down to a lowly non-SMT quad core.

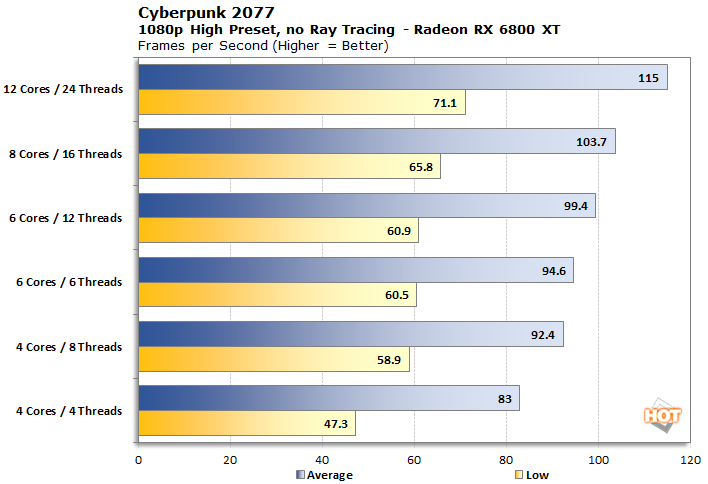

This title scales very well up to 12 cores and 24 threads on the Radeon RX 6800 XT, but less so on the GeForce RTX 3070. It seems that CD Projekt Red has finally addressed the performance improvements that multiple binary hacks exposed and just turned on SMT for all CPUs. That's especially obvious in the quad-core bracket at the bottom of each chart, where the GeForce RTX 3070 enjoys a 25% uptick just by enabling extra threads. The Radeon RX 6800 XT is similarly affected, but the change is closer to 12% at 1080p. On the high end, how many cores are enough depends on your monitor, as a 60Hz gamer could get by on 4C / 8T, if we look at the low frame rates, whereas gamers that enjoy higher refresh rates will get more from each step up the core and thread-count ladder.

Examining Frame Times And The Effect Of CPU Core And Thread Counts

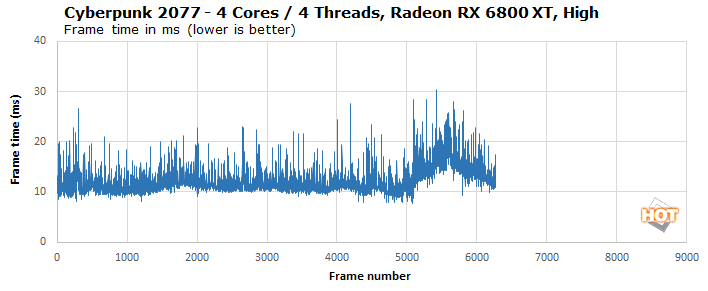

Let's take a short break from average and low frame rates to look at frame times for a moment. We know the 4C / 4T configuration is the slowest of all of them, but what does it feel like? Since Cyberpunk 2077 is our lone manual test, and because we capture our manual tests with CapFrameX, we can take a look at individual frame times. We needed to dig deeper into this game's performance. An average of 83 FPS seems pretty good for 4 cores, but it didn't feel that smooth in practice. What gives?

This is actually pretty educational. Despite an average frame rate of 83, the game spent an awful lot of time with individual frames spiking past the 20 millisecond mark. By the way, 20 milliseconds equates to a frame rate of 50 fps. All of this "yo-yo" motion effectively makes the game feel like it's not running particularly smoothly. On our FreeSync monitor, it felt a little jumpy, though. Normally FreeSync prevents tearing and makes lower frame rates feel smooth, but that's just not possible when the game is tanking and recovering repeatedly. Let's take a look at one of our 12 C / 24 T runs in comparison.

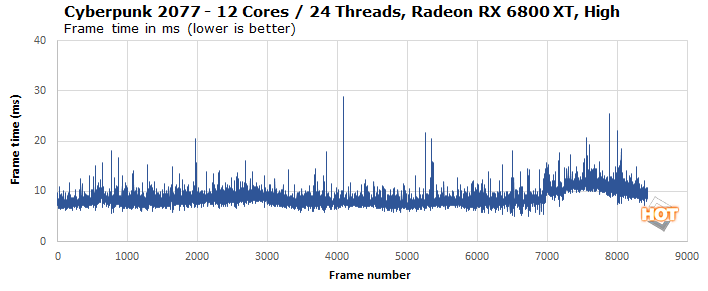

This is much smoother, and it shows in the low frame rate reported by CapFrameX in our frame rate charts above. There's a little bit of jumping past 20 milliseconds, but Cyberpunk's frame times generally stay below 14 milliseconds or so, which results in a low frame rate (which is the CapFrame X 1% low) of over 70 FPS. The game felt much smoother this way. So this is definitely something to keep in mind: just because an average frame rate indicates that it's running well doesn't mean that a given game title is really running as smoothly as its frame rate suggests. An average FPS is a single data point representing thousands of frames, and can't be taken as the final judgement of smoothness. A frame time chart like this, however, is a better indicator.

Stick with us, as we're only about half-way through our first resolution. Next up we'll hit the rest of our 1080p tests and move on to QHD 1440p.