Meteor Lake Architecture Revealed: AI, Tiles And The Future Of Intel Core CPUs

Intel’s First AI-Infused Consumer CPU

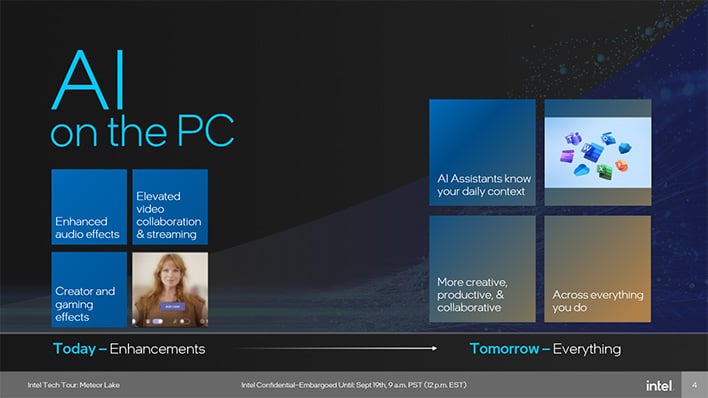

There’s no avoiding AI in the future of computing, and Intel is attempting to lead this PC transformation. Many AI workloads today are executed in the cloud. That affords massively scalable compute, but it requires Internet connectivity, comes with higher latency, privacy concerns, and can be expensive for software vendors to leverage – they have to rent compute power to run their applications. Adding in client and edge computing to the mix can remedy or at least take steps towards resolving each of these pain points.Right now, AI on the PC has some fairly limited use cases. It can be used for audio effects like background noise suppression or to blur video backgrounds on conference calls, but Intel sees much more potential. With more local power, AI could know the context of your day-to-day life to improve recommendations and handle scheduling, amplify your creativity and otherwise help you be more productive by taking care of some more menial tasks for you.

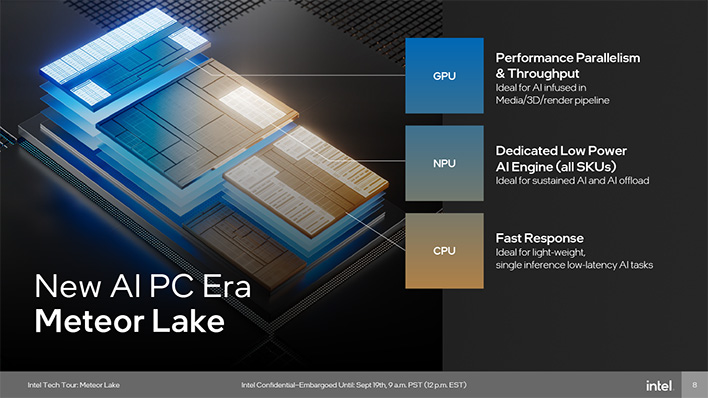

Meteor Lake is helping to lay that foundation, but while it sports a dedicated NPU for the first time, that’s not the whole AI picture. Different kinds of AI workloads actually benefit from being run on different types of hardware. For example, while the NPU is great for sustained AI workloads, the GPU may still a better option for a lot of media and 3D/render pipeline usage like generative AI uses. Conversely, the CPU with VNNI and other instructions is more than adequate to return low-latency single-inference requests.

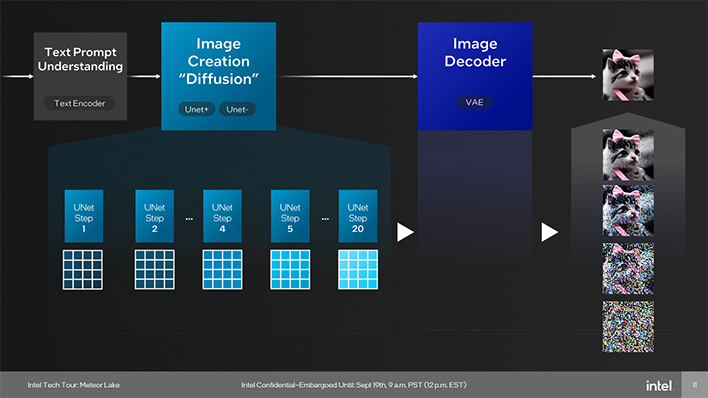

We can see how the different IP might impact a workload with Stable Diffusion. This AI model takes a text prompt as input, then creates an image through “diffusion.” The image creation process starts as pure noise, then step by step it pulls a low-resolution image out of the noise using its understanding of the prompt given (Unet). Finally, the end image is decoded at a higher resolution.

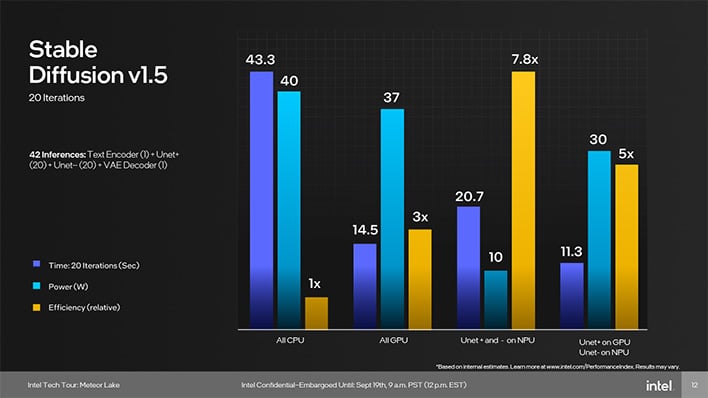

This chart shows different metrics to evaluate how well it performs running entirely on the CPU, entirely on the GPU, with the Unet offloaded to the NPU, and with Unet+ and Unet- split between the GPU and NPU. When the GPU is engaged, it can return very fast results, but its power usage is nearly as high as the all CPU approach. Conversely, the NPU is very efficient, but not as fast as the GPU is. Ultimately, it may make sense to use the hybrid GPU+NPU approach to get the fastest result while using a moderate amount of energy.

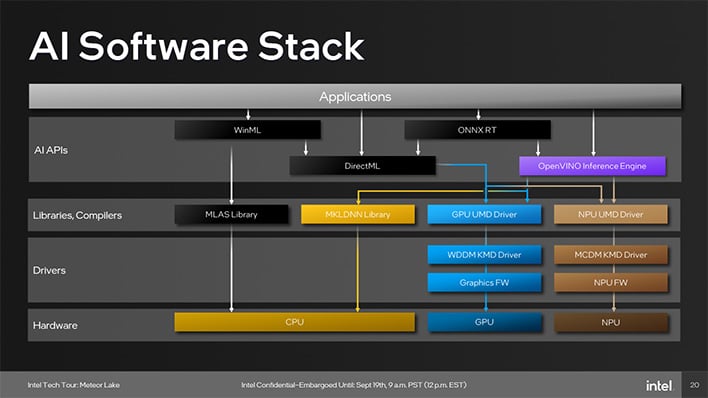

OpenVINO And The AI Software Stack

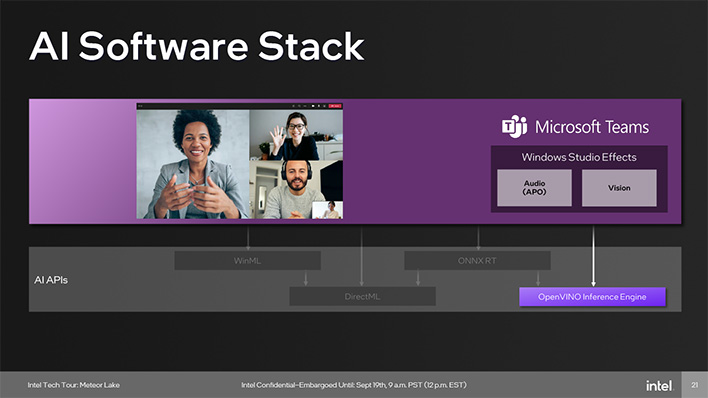

The AI stack in Meteor Lake supports a variety of APIs that include WinML, DirectML, ONNX RT, and Intel’s own OpenVINO. These somewhat-connected APIs interface with different libraries that can leverage different IP to run. For example, WinML can use the MLAS Library to run on the CPU, or it can chain through DirectML to leverage the GPU or NPU.Each application may take a different path. For example, Microsoft Teams features Windows Studio Effects. This applies audio and vision processing that’s accelerated by the NPU. To make that happen, it uses the OpenVINO Inference Engine stack.

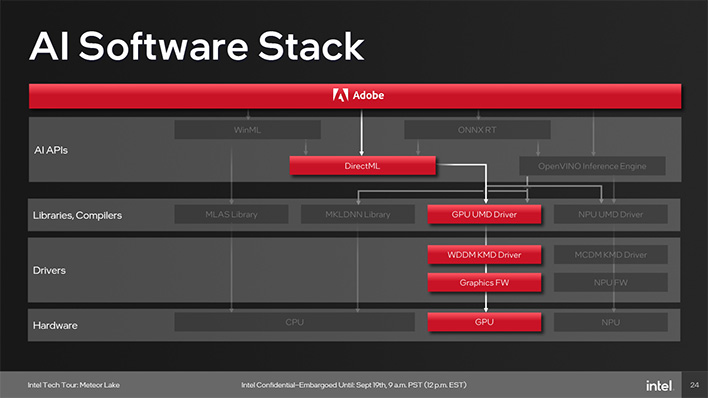

Meanwhile, Adobe Creative Cloud leverages DirectML for its features which ultimately run on the GPU.

Accelerating AI In Hardware

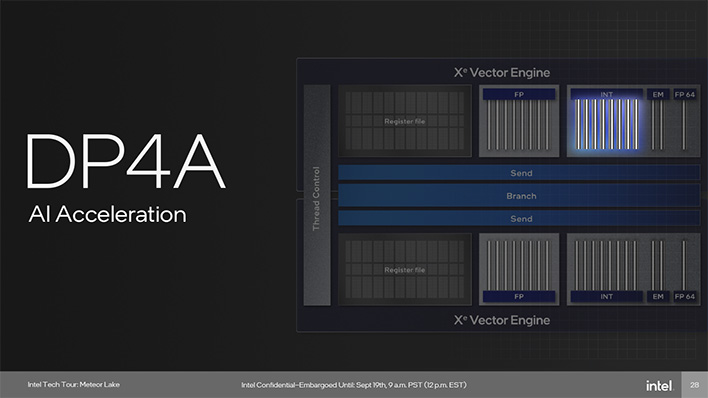

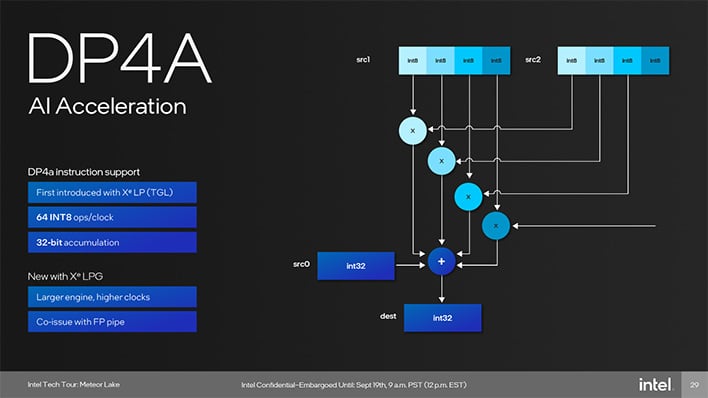

AI acceleration requires support for certain instructions, and we’re going to look at how the GPU and NPU are implemented.The GPU predominantly runs DP4A instructions with the INT8 data type, first introduced with Xe-LP in Tiger Lake. The calculations are performed by splitting a single 32-bit integer into INT8 lanes, then calculating at a rate of 64 INT8 operations per clock. Xe-LPG brings a larger engine and higher clocks, plus the ability to co-issue instructions with the FP pipe.

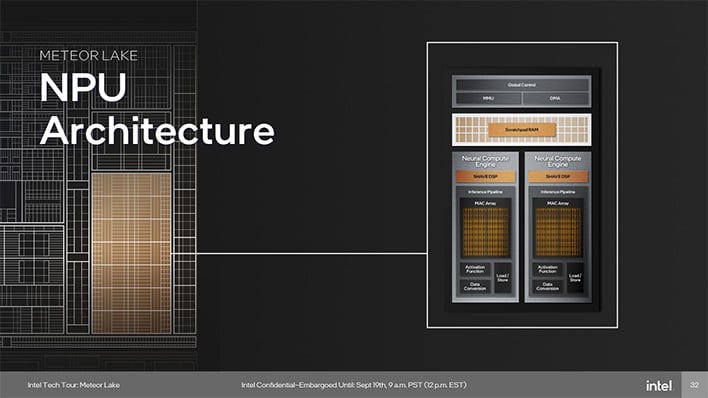

The NPU’s architecture is purpose-built for AI. It is designed with two Neural Compute Engines containing an Inference Pipeline and Programmable SHAVE DSP.

The Streaming Hybrid Architecture Vector Engine (SHAVE) digital signal processor (DSP) is AI optimized and can be pipelined with the Inference Pipeline and DMA engine for parallel heterogeneous compute with very high performance, which is to say it can handle INT4 through FP32 datatypes.

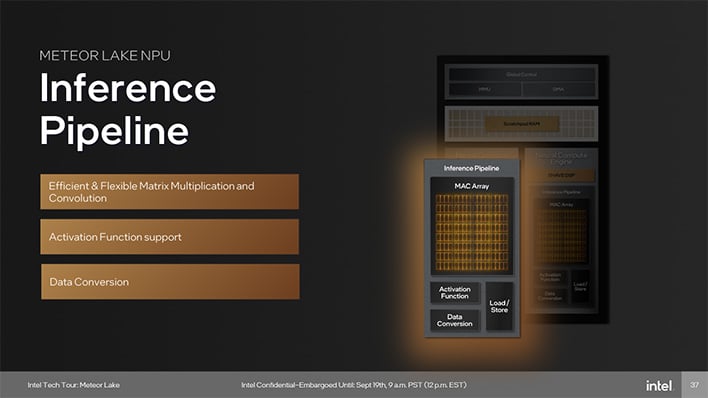

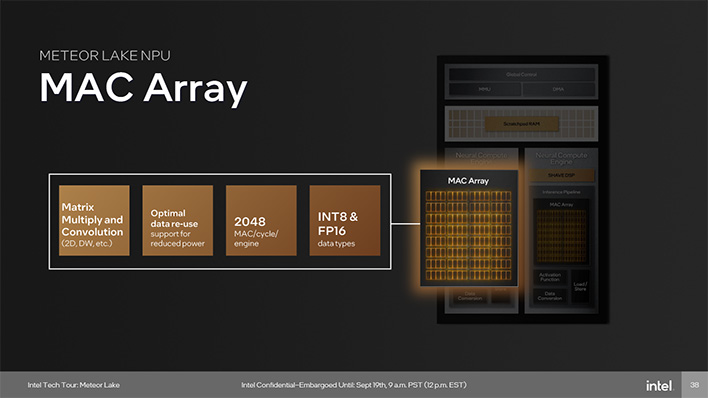

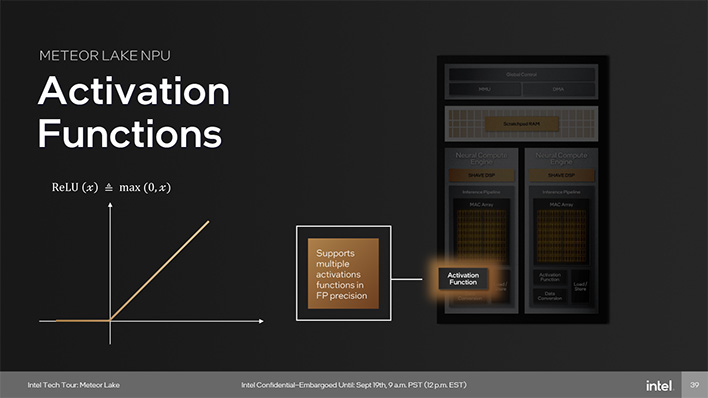

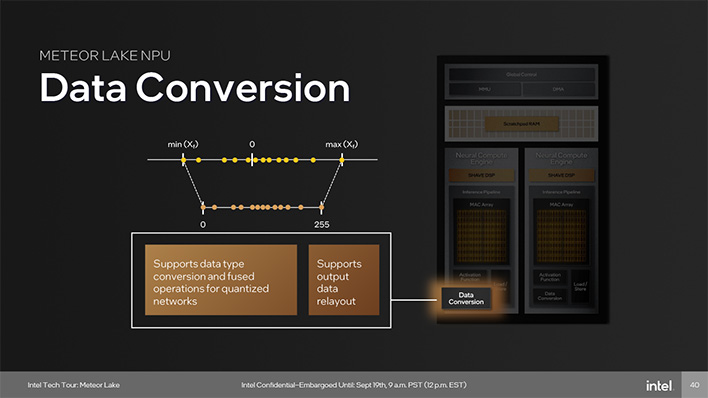

The Inference Pipeline uses a MAC Array (that’s matrix multiplication and convolution) that supports all operations which devolve into matrix multiplications, Activation Function block, and Data Conversion block.

The MAC array features 2048 MAC/cycle per engine with INT8 and FP16 data type support.

The Activation Function is applied after weights and biases, and calculates some intermediate values before the Data Conversion block quantizes it to be written back to the Scratchpad SRAM.

Most importantly, the Inference Pipeline reduces data movement with its fixed function operations, which helps increase efficiency.

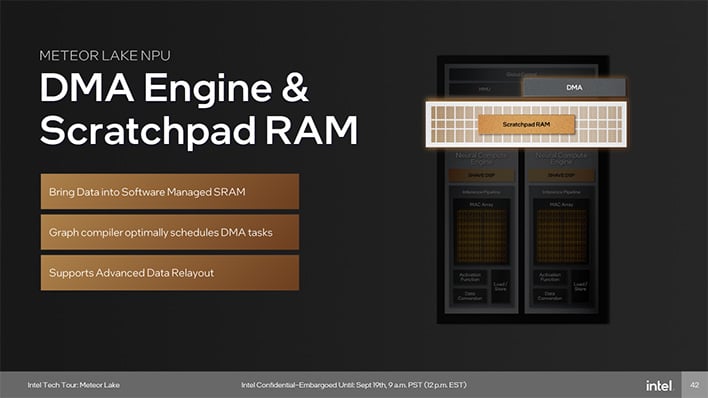

The DMA Engine is what coordinates the flow of data through the NPU. It optimizes for efficiency while extracting as much performance as it can. It brings data into the Scratchpad RAM and uses a graph compiler to schedule tasks.

Wrapping Up Meteor Lake

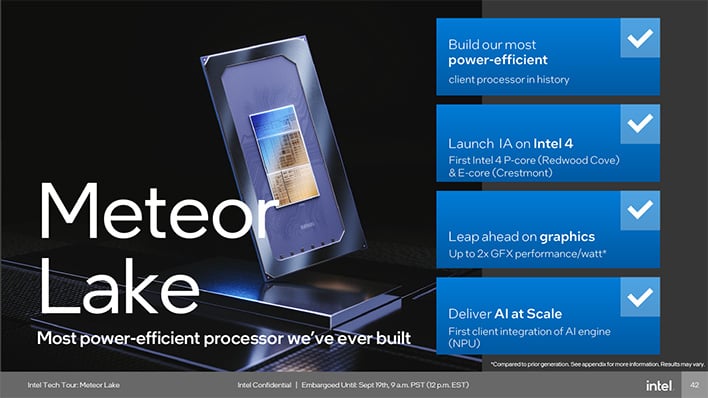

The broadest takeaway we can offer is that Meteor Lake is Intel’s first tiled consumer CPU and we expect it to move the efficiency needle in a big way. Intel now has three tiers of CPU cores – the P-Cores and E-Cores on the Compute Tile plus the LP E-Cores on the SOC Tile – which mostly extend the bottom of the performance per watt curve to use less power than ever. The Xe-LPG graphics architecture complements this push with a 2x improved performance per watt design and the NPU can enable sustained AI experiences with up to 8x greater efficiency than the CPU alone. This is all knit together using Intel’s Foveros advanced packaging technology. Foveros allows Intel to use different process technologies for the different tiles, optimizing each to the given IP.We hope this has provided a solid foundation to understand what makes Meteor Lake so different from prior Intel processors, and the potential it holds for the future of client computing. While we don’t have any specific SKU information to provide just yet, we can expect mobile device designs sporting these processors to begin making their way onto the market soon.