AMD EPYC 7003 Series Unveiled: Big Iron Zen 3 Takes Flight

AMD EPYC 7003 Series Server Processors: Zen 3-Based Milan Invades The Data Center

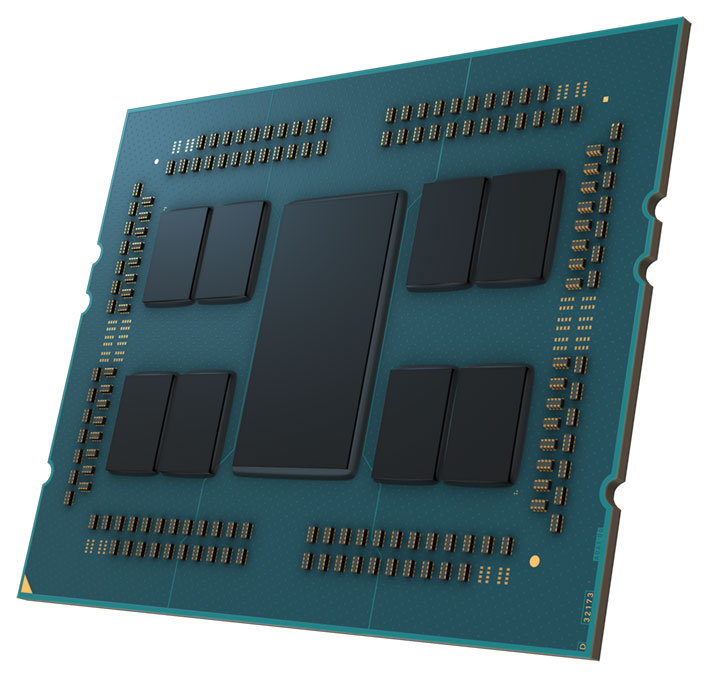

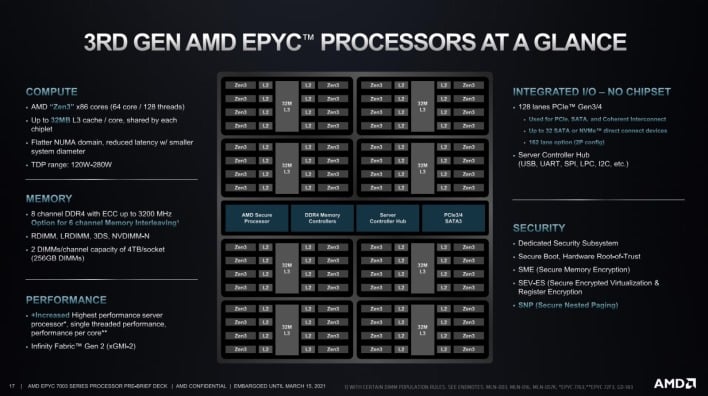

The biggest change with the EPYC 7003 series is the microarchitecture. Like the current AMD Ryzen 5000 series desktop processors, the new EPYC 7003 series leverages the high-performance Zen 3 microarchitecture. Unlike the desktop parts, however, EPYC 7003 server processors use much larger packaging and feature up to nine chiplets (up to eight 7nm CPU dies and a 12nm IO die), with up to 64 physical cores (128 threads). Core counts and density do not increase with this generation, but performance will be significantly better, thanks to the updated Zen 3 microarchitecture.

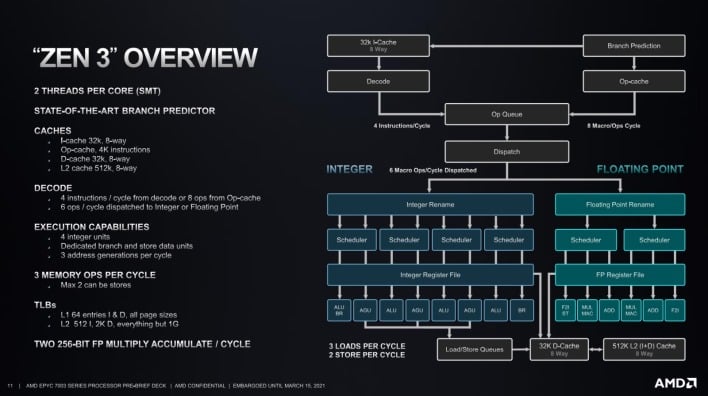

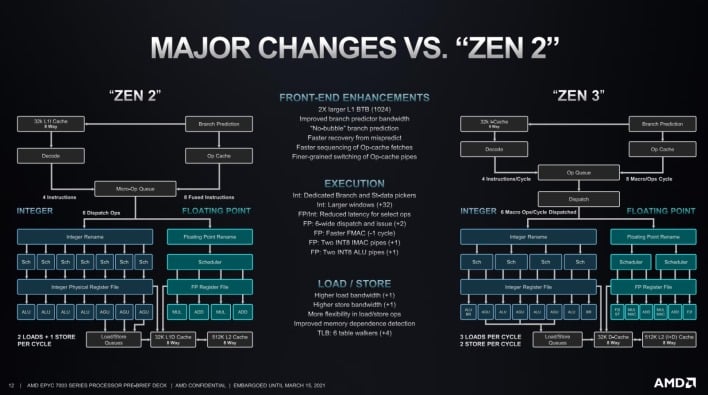

Zen 3 introduced many architectural changes over its predecessor. In fact, AMD tweaked virtually every part of Zen 3, from the front-end to the execution units, to the load / store capabilities, and the overall topology and chiplet configurations, which in turn affects the cache configurations of the various processors in the new EPYC 7003 series line-up.

AMD's Zen 3 Architectural Advancements Come To The Data Center

Versus Zen 2, Zen 3 has a larger L1 branch target buffer and improved bandwidth through multiple parts of the pipeline. The front end is faster to react to mispredictions, latency has been reduced for some operations, and there is additional load/store flexibility. Where Zen 2 could handle 2 loads and 1 store per cycle, Zen 3 can handle 3 load and 2 store operations.With all of these architectural enhancements in place, AMD offers an average 19% increase in IPC with Zen 3, which is a major uplift gen-over-gen. Couple that significant IPC uplift, with strong multi-core scaling, and the new unified L3 cache configuration, and Zen 3's performance is greatly improved across a wide variety of workloads versus its predecessors.

If we focus on the front-end, Zen 3 has a faster and more accurate branch predictor and it can switch between the op-cache and instruction cache more quickly as well. Should there be a misprediction, the enhanced front-end is also able to recover faster than Zen 2.

Fetch/Decode in Zen 3 was designed to perform better with “branchy” or large footprint code, according to AMD. The architecture still uses a TAGE branch prediction unit, but the branch target buffers have been resized (L1=1,024 entries, L2=6.5K entries) and there is a larger Indirect Target Array as well. Along with optimizations to the L1 cache, these changes in Zen 3 result in improved prefetching and better utilization of resources.

The integer and floating-point execution engines in Zen 3 have also been improved. Both units are wider, and have larger schedulers, the architecture can perform faster 4-cycle fused multiply–accumulate (FMAC) operations, they have larger execution windows, and new dedicated branch and st-data pickers have been added to the integer unit.

Zen 3: Less Is More, When It Comes To Latency

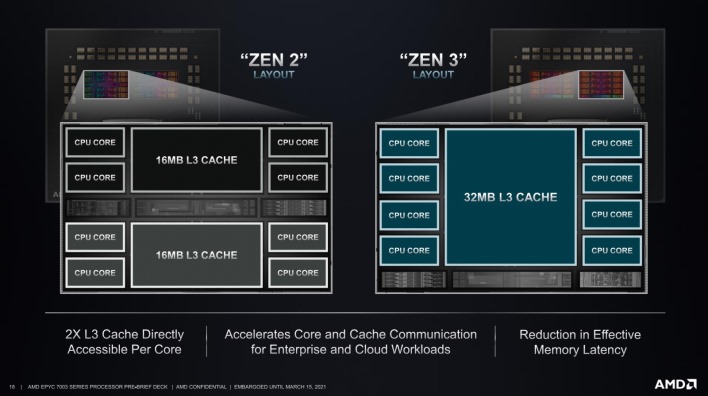

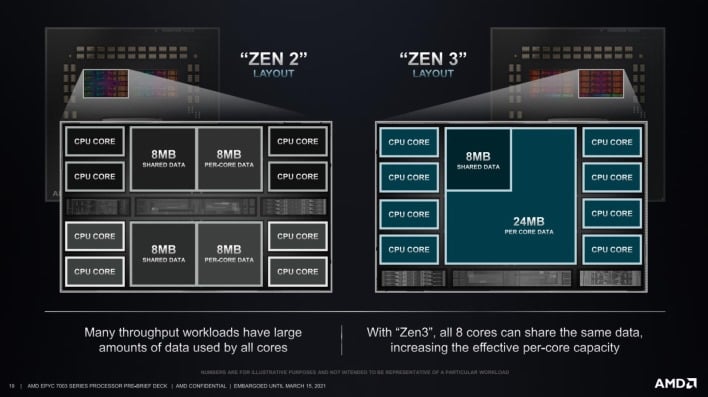

In terms of load/store capabilities, Zen 3 has been enhanced with a larger entry store queue (increased from 48 up to 64), additional TLB walkers (+4) and there is fast copying of short strings, improved prefetching across page boundaries, and better prediction. All told, the load/store enhancements offer higher bandwidth, lower latency, and more flexibility.And all of the changes in Zen 3 are wrapped up in new die and CCX configuration. Whereas Zen 2 consisted of 8-core dies, with dual 4-core CCX configurations, each with 16MB of L3 cache (for 32MB total), Zen 3 unifies the configuration into a single 8-core complex, with a single, shared 32MB pool of L3 accessible by all cores. This new configuration not only reduces effective overall core-to-core latency, and reduces the need for the CPU cores to communicate across the IO die, but it effectively doubles the amount of L3 cache accessible to each core.

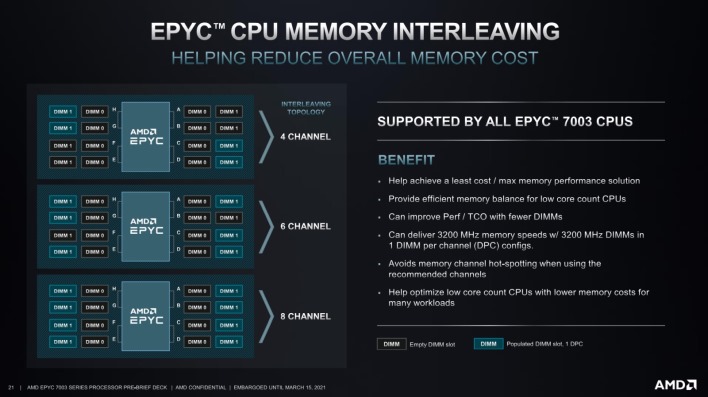

In addition to all of the updates and enhancements that come by way of the microarchitecture, AMD has also tweaked the IO die with the EPYC 7003 series. The updated IO die now supports nested paging and memory interleaving and can function in 4 channel, 6 channel, or 8 channel configurations. AMD made this move to allow its customers to optimize costs and better balance memory bandwidth with some of the lower core count CPU. All EPYC 7003 processors support memory interleaving, though. In conversations with AMD, the company noted that the updated IO die is effectively the same size as the one employed in Rome, and its transistor count is within a couple of percentage points total.

Like previous-gen EPYC processors, the new 7003 series features AMD’s integrated Secure Processor, which provides cryptographic functionality and also enables features like hardware validated boot, which uses the secure processor to authenticate the BIOS and Boot Loader, and won’t load the OS or Hypervisor if any issues are uncovered.

The security related features in the EPYC 7003 series receive a couple of other updates as well. In addition to the features supported by Zen 1 and Zen 2, Zen 3-based EPYC 7003 series processors gain support for SEV-SNP, which provided memory integration protection, and support for Shadow Stack, which protects against control flow attacks.

EPYC 7003 - Expected Performance Levels

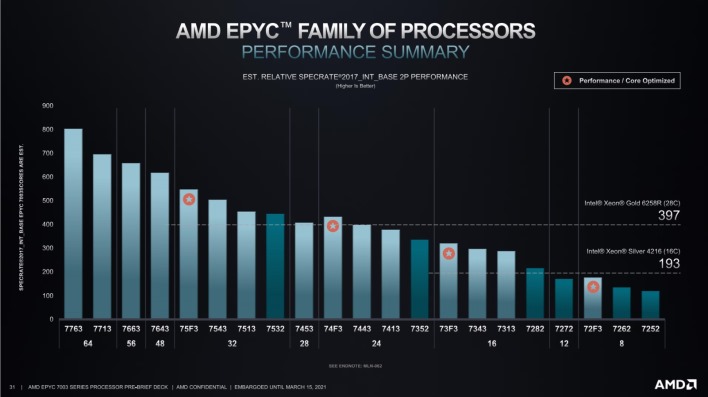

AMD provided a wealth of performance data, showing how the EPYC 7003 processors perform versus other models in the stack and versus competitive Xeon solutions from Intel.Relative performance with SPECrate 2017 shows the EPYC 7252 8C/16T processor at the entry point and the flagship EPYC 7763 64C/128T processor offering roughly 8x the 7252’s performance. Also note, the dotted lines in the slide above represent the performance of the Xeon Gold 6258R (28C/56T) and Xeon Silver 4216 (16C/32T); AMD’s mid-range and high-end SKUs reportedly offer significantly better performance.

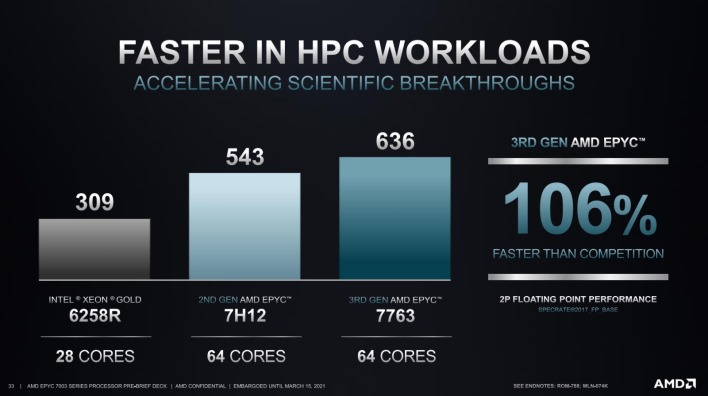

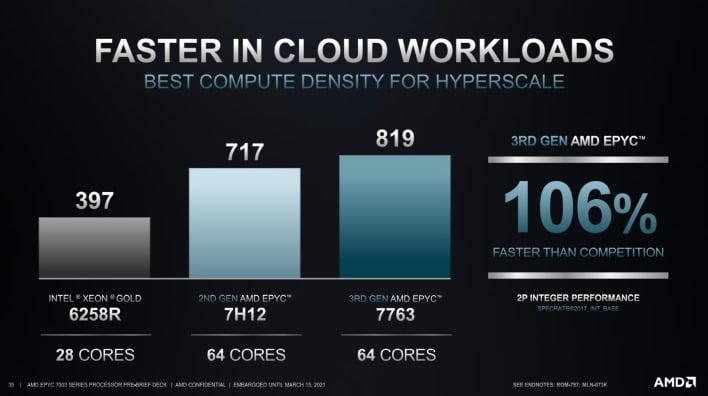

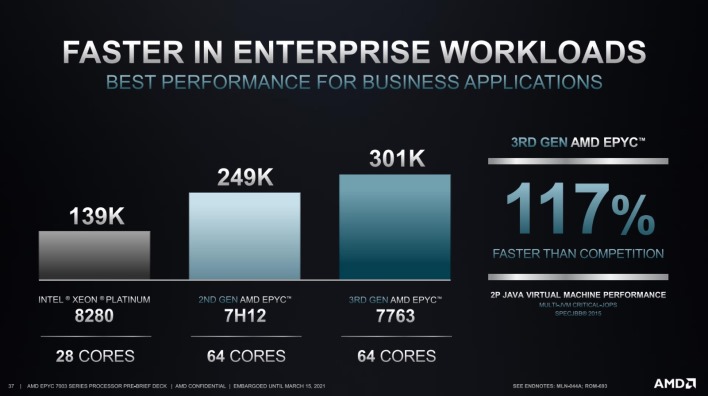

AMD also provided a number of performance examples in HPC, Cloud, and business workloads, with various EPYC 7003 processors versus competing Intel processors...

Two things to note while perusing the data provided by AMD are that Intel doesn’t currently have any Xeon processors that can match AMD in terms of single-socket core density, and as such, EPYC 7003 series CPUs should consistently offer better performance in the workloads shown here.

Meet The AMD EPYC 7003 Family

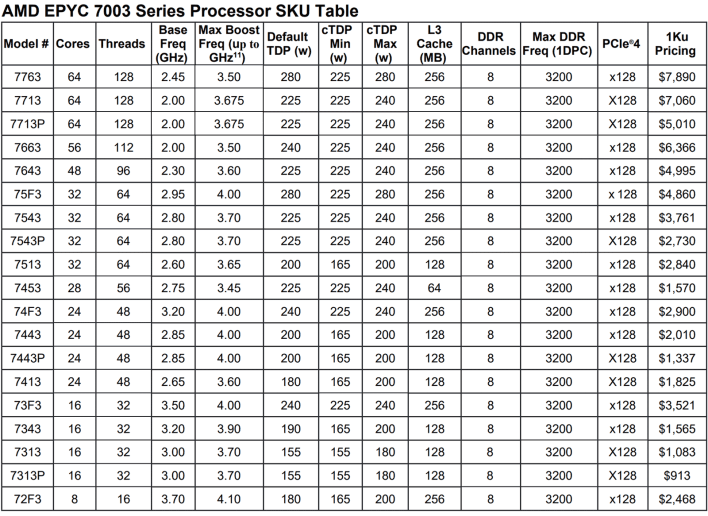

There are 19 total EPYC 7003 processors initially arriving with as few as 8 cores (16 threads) and as many as 64 cores (128 threads), with up to a 256MB of L3 cache. EPYC 7003 F-series processors (the ones with an F in their model number) are designed with high core performance in mind, and feature higher frequencies and large cache to core ratios. Conversely, 77xx and 76xx series processors focus on the highest core and thread counts, and 75xx / 74xx / 73xx series EPYC processors aim to strike a balance between price and performance.The full breakdown on the initial AMD EPYC 7003 stack, complete with frequencies, cache configurations, TDPs, and pricing is shown in the chart above. Pricing for these new big iron processors ranges from $913 or the 16-core 7313P, and up to $7,890 for the powerful EPYC 7763, which AMD is calling “the world’s highest-performing server processor.” Though $7,890 is no small potatoes, AMD appears to be continuing its aggressive price strategy with the EPYC 7003 series, relative to Intel’s Xeon Scalable processors.

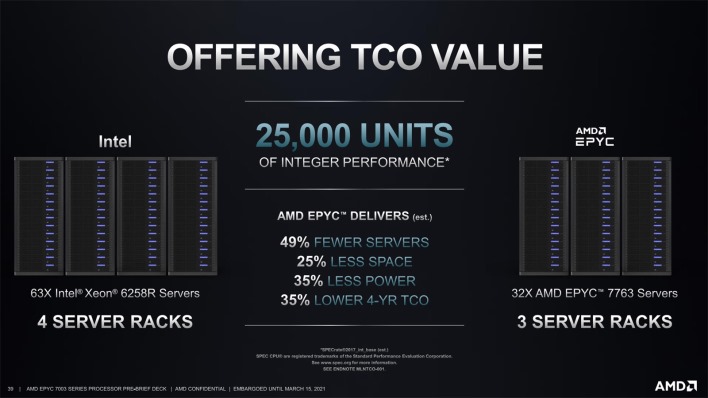

The major takeaway of this launch is that AMD continues to have a significant per-socket density advantage over competitive Xeon solutions. Couple the density advantages with the performance uplift provided by Zen 3, along with the potential power savings offered by the EPYC 7003 series, and AMD is claiming similar integer performance with nearly half the number of servers, which reduces the physical and electrical footprints considerably, while also lowering total cost of ownership (TCO).

AMD has been gaining market share in the data center with each successive generation of EPYC processors. As we saw in the desktop, however, the Zen 3 architecture is a significant step forward in terms of performance and efficiency. With all of the benefits of Zen 3 coming to the EPYC 7003 series, in addition to the more flexible memory options afforded by its updated IO die, all the while maintaining socket compatibility, this could prove to be AMD's most successful launch yet for its data center product portfolio. Of course, Intel isn't standing still. Ice Lake-based Xeon processors are due to launch in a couple of weeks, and will bring with them much higher per-core performance, higher core density, and additional features as well, which will change the landscape yet again.

That said, AMD's partners already include AWS, Cisco, Dell Technologies, Google Cloud, HPE, Lenovo, Microsoft Azure, Oracle Cloud Infrastructure, Supermicro, and Tencent Cloud (among others), which is a who's who of big iron data center platform providers. AMD claims the EPYC processor ecosystem will grow to an expected 400 cloud instances, with 100 new OEM platforms by end of 2021. We'll see how all of this renewed competition shakes out in the market soon enough.