Acer Predator BiFrost Arc A770 OC Graphics Card Review: Intel On-Board

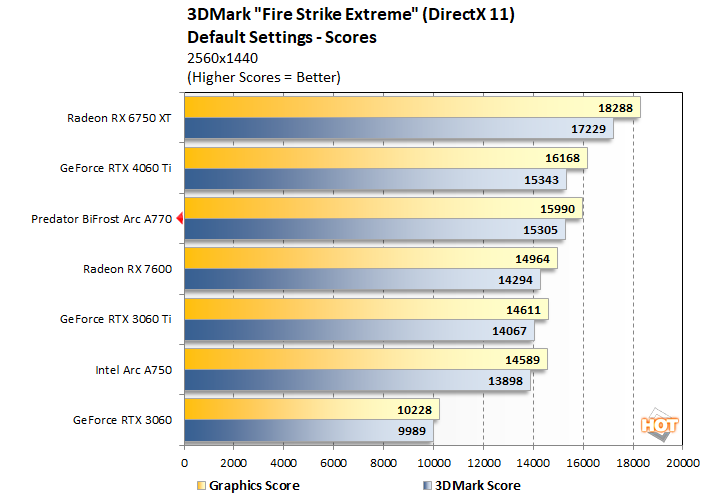

UL 3DMark Fire Strike Extreme DirectX 11 Benchmarks

Interestingly, most of the cards in our arsenal today perform in roughly the same ballpark. The GeForce RTX 3060 falls behind the rest of the pack, but otherwise, we see the most powerful GPU on paper ride out ahead, while the GeForce RTX 4060 Ti and the Predator BiFrost Arc A770 are neck-and-neck in this DirectX 11 benchmark. This is a good result for the Arc A770 card, and encouraging, as Intel struggled with DX11 performance early on.

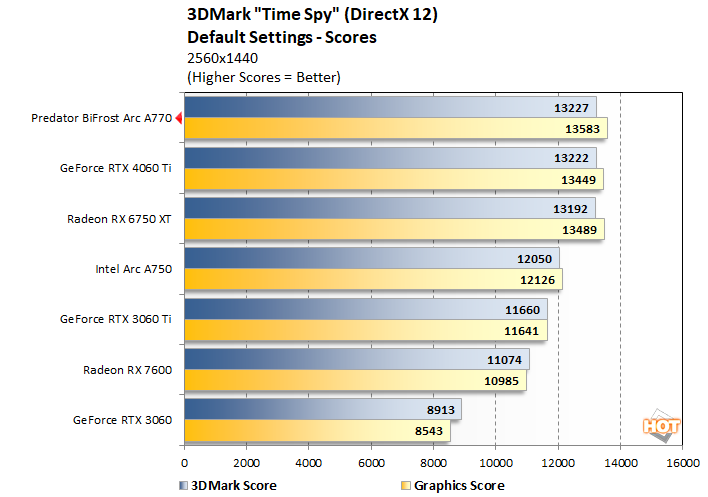

UL 3DMark Time Spy DirectX 12 Benchmarks

Well, how about that. The Acer Predator BiFrost Arc A770 tops the chart in our Time Spy test, even if ever-so-slightly. It's offering competitive performance in this benchmark against both NVIDIA's latest Ada Lovelace architecture as well as the Radeon RX 6750 XT that really lives in a higher tier of product, considering its original MSRP. This is good stuff.

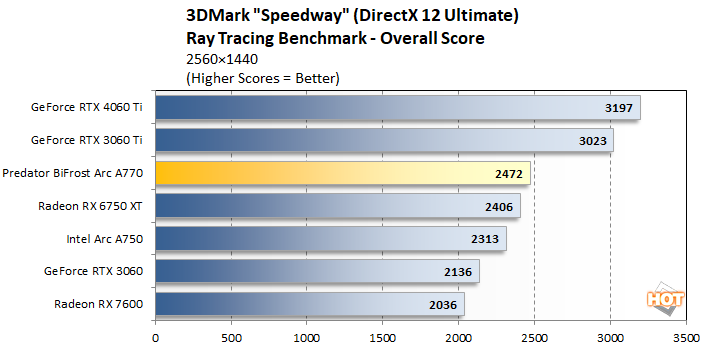

UL 3DMark Speed Way DX12 Ultimate Benchmarks

NVIDIA's RTX 3060 Ti and RTX 4060 Ti leap out ahead of the pack, as expected when it comes down to ray-tracing performance. NVIDIA has been a pioneer in that space, and it's no surprise that its cards are the best for this kind of workload. However, if we ignore those two front-runners, the Predator BiFrost Arc A770 takes the lead ahead of AMD's potent Radeon RX 6750 XT. Overall, not a bad showing.

Real-World Game Test Discussion: To Upscale Or Not To Upscale?

Before we get into our real game tests on the next page, we wanted to talk a little about game testing in 2023 and the proliferation of advanced upscaling techniques. We're of course talking about NVIDIA's DLSS, AMD's FSR, and Intel's XeSS. Now, NVIDIA wasn't strictly first to market with this idea; console games had been flirting with the concept of upscaling from a lower-resolution image long before the green guys debuted their Deep Learning Super Sampling with the GeForce RTX 20 series cards in 2018.However, at this point in time, NVIDIA is widely-acknowledged as a leader in the space. Its DLSS Super Resolution feature offers near-native image quality with a huge jump in performance, owing to the actual internal render resolution being much lower in terms of pixel count than the final output image. AMD's solution has its advantages—most notably that it can run on anything and doesn't require hardware acceleration—but it is generally considered to offer somewhat inferior image quality compared to NVIDIA's AI-powered solution.

When Intel first announced the Xe-HPG graphics architecture and the Arc GPUs, well before they actually shipped, the company also teased XeSS, its own AI-powered upscaling solution. XeSS made all of the same promises as DLSS at the time—huge performance gains thanks to rendering games in lower resolution and then allowing AI to upscale them. XeSS was slower to penetrate the market, but it has now found its way into over 70 games, including such big names as Call of Duty, Hogwarts Legacy, Forza Horizon 5, F1 2023, and more.

We've tested XeSS pretty extensively, and the conclusion we've come to is that, at least on Arc GPUs, it is very similar to DLSS at this point. We should also note that, XeSS works on other companies' graphics cards, but it uses a different algorithm that relies on DP4a math instead of the Arc GPUs' own XMX tensor units. This algorithm, to preserve the speed boost, isn't as effective at upscaling as the native XMX version on Arc. If you've tried XeSS on your GeForce or Radeon card and came away unimpressed, that's probably why.

Here, we've compared XeSS against DLSS in the Quality and Performance modes. You can click the image to go to the gallery and see the full-resolution screenshots. You can see the that the image quality is broadly equivalent. XeSS, even with sharpening disabled, still seems to have a mild sharpening effect, and typically gives a cleaner-looking resolve as a result, where DLSS typically is a bit soft. However, DLSS seems to be a little better at preserving certain details, particularly in the lighting in these Hogwarts Legacy examples:

But what about the performance? There is a performance cost associated with the upscaling performed by NVIDIA's DLSS, AMD's FSR, and Intel's XeSS. DLSS and XeSS run on dedicated matrix math units within the GPU, so their cost is generally lower, but there is still a cost. This cost is dependent primarily on the output resolution, not the input resolution.

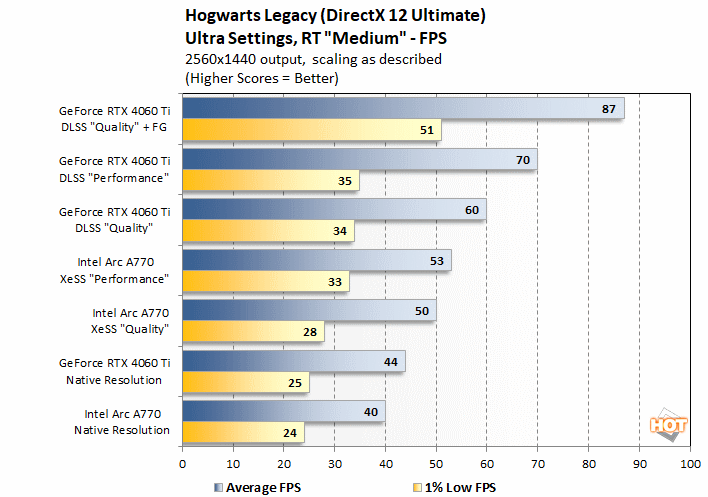

We compared XeSS against DLSS in Hogwarts Legacy using the GeForce RTX 4060 Ti, which is generally a bit faster than the Arc A770, so keep that in mind as you look at the results. The RTX 4060 Ti isn't very far ahead of the Arc A770 when playing Hogwarts Legacy in native resolution, and that's mostly down to its inferior memory bandwidth; it's bottlenecked by its narrow memory bus at 2560×1440, so that's why it gains a lot more performance from DLSS super resolution here—ignoring the Frame Generation (FG) result for a moment.

The Arc A770 still gains a nice performance bump from XeSS, pushing the game from "a little sluggish" territory all the way up to "smooth". It might look like the jump from Quality to Performance isn't worth it, but the increase to the 1% low frame-rate is actually surprisingly noticeable, as it allows our Freesync monitor to stay within its 30-144 Hz VRR window. As we discussed above, dropping to Performance really doesn't look bad, especially at 2560×1440.

Both NVIDIA and AMD have their own frame generation solutions now, although we haven't tested AMD's FSR3 yet to know if it's any good. Frame generation increases a game's frame rate by using frame interpolation. This increases input lag, but in theory, the increase in frame rate helps to mitigate that somewhat. It's a worthwhile technique, and a real technology advantage for NVIDIA right now. Intel doesn't have a frame generation solution at the moment, but we would be surprised if there isn't an "XeFG" by the time Battlemage rolls around.

So with all this data in hand, the question becomes: should you play at native resolution, or make use of upscaling? Our judgment is like this: if you have a GeForce or Arc GPU, and the game you're playing supports your vendor's upscaler, then you should probably at least be using the Quality setting. As long as you're not CPU-limited, it will give you significantly-improved frame rates with very little loss of visual quality.

This isn't universal; in games with very long draw distances you may want to avoid using upscaling, because rendering at a lower resolution absolutely does reduce fine detail at a distance, even with DLSS or XeSS. Likewise, in games that already run at high frame rates on your system, you may simply prefer the cleaner resolve of native-resolution rendering, which is totally fair.

Despite all that, we mostly don't use smart upscalers in our testing here, and the reason is because the various algorithms don't give identical results. The visual difference is small, but very real, and as a result, doing benchmarks with these scalers on is not an apples-to-apples comparison. To avoid complications from trying to say things like "well, FSR2 needs [x] mode to hit the same image quality as DLSS at [y] preset", we simply focused on native resolutions. That doesn't mean you should do the same, though.