GeForce GTX 1660 Super - FC 5, Final Fantasy, Overclocking And Power

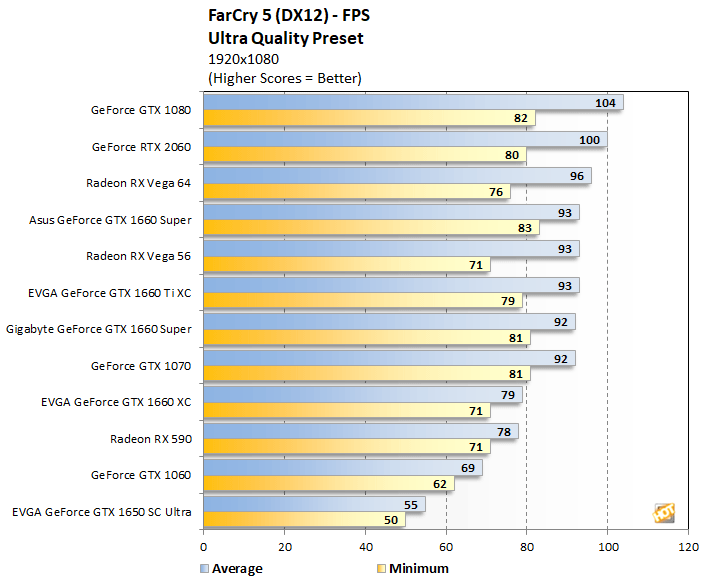

Next up, we’ve got some benchmark scores from FarCry 5, the latest installment in the storied franchise. Like its predecessors, FarCry 5 is a fast-action shooter set in an open world environment with lush visuals and high graphics fidelity. The game takes place in a fictional county in Montana, where a cult has taken over control of the area. We tested all of the graphics cards here at multiple resolutions using Ultra Quality settings to see how they handled this recently-released AAA title.

|

FarCry 5

We saw more of the same in the FarCry 5 benchmark, with the new GeForce GTX 1660 Super cards sandwiching the GeForce GTX 1660 Ti, but the three cards essentially finish on top of each other. The 1660 Supers also finish well out in front of the Radeon RX 590 and nearly catch the Vega 56.

|

Final Fantasy XV

Overclocking NVIDIA's Turing

We also spent a little time overclocking the the Gigabyte GeForce GTX 1660 Super, to see what kind of additional performance we could wring from it. Before we get to our results, though, we would like to quickly re-cap Turing's GPU Boost algorithm behavior and cover some overclocking related features.

Turing-based GeForce cards like this GTX 1660 Super feature GPU Boost 4.0. Like previous-gen GeForce cards, GPU Boost scales frequencies and voltages upwards, power and temperature permitting, based on the GPU's workload at the time. Should a temperature or power limit be reached, however, GPU Boost 4.0 will only drop down to the previous boost frequency/voltage stepping in the curve -- and not the base frequency -- in an attempt to bring power and temperatures down gradually. Whereas GPU Boost 3.0 could result in sharp drop-offs down to the base frequency when constrained, GPU Boost 4.0 is more granular and should allow for higher average frequencies over time.

As we've mentioned in some of our previous coverage of the Turing architecture, there are beefier VRMs on Turing-based GeForce cards versus their predecessors, which should help with overclocking, though most of the cards are still power limited to prevent damage and ensure longevity. The Gigabyte GeForce GTX 1660 Super card we used here allowed us to increase the power target by 13% -- the slider is maxed-out above -- and we could bump the voltage offset up by .1v.

With the launch of Turing, NVIDIA also tried to make the overclocking process easier by introducing a new Scanner tool and API. The NVIDIA Scanner is a one-click overclocking tool with an intelligent testing algorithm and specialized workload designed to help users find the maximum, stable overclock on their particular cards without having to resort to trial and error. The NVIDIA Scanner will try higher and higher frequencies at a given voltage step and test for stability with a specialized workload along the way. The entire process should take around 20 minutes, and when it’s done, the Scanner will have found the maximum stable overclock throughout the entire frequency and voltage curve for a given card.

As we have mentioned in the past, we have not had much luck with the NVIDIA scanner tool across multiple test beds, and we were under sever time constraints for this review, so we couldn't properly test the auto-scan feature. In lieu of using the NVIDIA Scanner, we kept things simple and used the frequency and voltage offsets and temperature target sliders to manually overclock the Gigabyte GeForce GTX 1660 Super. First, we cranked up the temperature target and voltage, tweaked the fan speed curve by 10-20% at each stepping to better manage heat, then we bumped up the GPU and memory clocks until the test system was no longer stable or showed on-screen artifacts.

When all was said and done, we were able to take our card all the way up to 2.1GHz, with 7.12GHz memory. Unlike most other Turing-based GeForces though, we did not bump into the power limit with this card. Trying to push the clocks further resulted in driver errors, however, and we couldn't complete any benchmarks. With some more tweaking, we think the memory may have some headroom left, but were can't really complain about these results.

While we had the card overclocked, we re-ran a couple of tests and saw some nice performance improvements, which pushed the cards into similar territory with the higher-priced Vega 56 in these tests.

|

|

|

Our goal was to give you an idea as to how much power each configuration used while idle and also while under a heavy gaming workload. Please keep in mind that we were testing total system power consumption at the outlet here, not the power being drawn by the graphics cards alone. It's a relative measurement that gives you a decent view of how much additional power draw a graphics card is placing on a system while gaming.