Displays 101: Here’s What To Look For In Your Next Monitor Or TV

Display Basics: Refreshing The Screen

Now that we understand about pixels and resolution, let's talk about frames. Everything we've just learned applies to a single "frame" of video. One screen capture, a still image of video output at an instant, is a "frame" of video. If you're a gamer, you're probably familiar with the term "frames per second." Well, that's relevant to displays in general, not just games. We refer to a display's "FPS" value as the refresh rate.You may be aware that refresh rates are usually measured in "Hz", rather than "frames per second." That's simply because the "frames" part is implied, and "Hz", the symbol for the SI unit "Hertz", explicitly means "per second." In other words, refresh rate in "Hz" and "FPS" are quite literally the same thing.

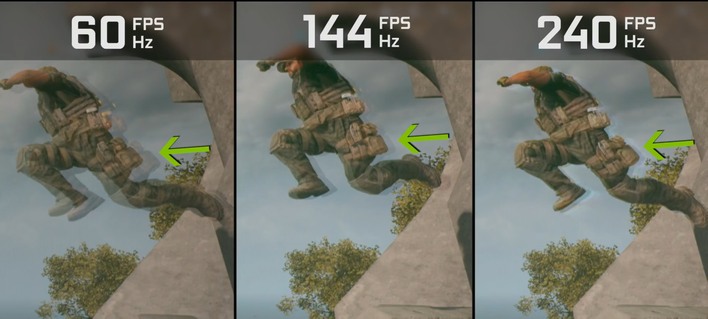

So when we talk about a monitor as having a "60 Hz" refresh rate, that means it updates the entire screen sixty times every second. You can divide the refresh rate into one second to get the amount of time that each frame is on screen; at 60Hz, one frame lasts 16.67 milliseconds, and at 144 Hz, it's ~6.94ms. The faster your refresh rate is, the smaller the reduction in frame time. In other words, there are major diminishing returns as refresh rates get higher and higher.

The most common display refresh rate is 60 Hz, and the reason this became commonplace is a little complicated, but the short version is that it all comes back to the 60Hz switching interval used for AC power in the United States. Today, there's no hard requirement that displays operate at 60Hz or any interval thereof; 70Hz was the most common frequency back in the MS-DOS days, and nowadays monitors that run at 75Hz, 100Hz, 144Hz, or 165Hz are not uncommon.

A higher refresh rate simply means that the screen is updating more often. This, obviously, has significant benefits in terms of smoothness, but it doesn't *really* matter for typical desktop usage. A 120-Hz screen is nicer to look at, but you don't particularly gain anything for typical usage, except for smoother mouse movements, and it may be easier on your eyes if you're sensitive to display flicker.

For gaming, it's a whole different story. High refresh rates can improve reaction time and make moving objects easier to track by reducing image persistence, or how long a still image remains in your vision. Particularly in fast-paced competitive games like Counter-Strike 2 and Valorant, a high-refresh-rate display is practically a requirement for competitive play, and the higher the refresh rate, the better.

Normally, displays operate at a fixed refresh rate, but in the last decade we've seen considerable advancements in the area of "Variable Refresh Rate" (or "VRR") displays. These are exactly what they sound like -- screens that can dynamically alter their refresh rate to accommodate for changes in the frame rate of the content being sent to them. This completely eliminates artifacts like screen tearing, and also improves visual fluidity due to the elimination of "judder", the awkwardly inconsistent frame timing caused when the content frame rate does not match the display frame rate.

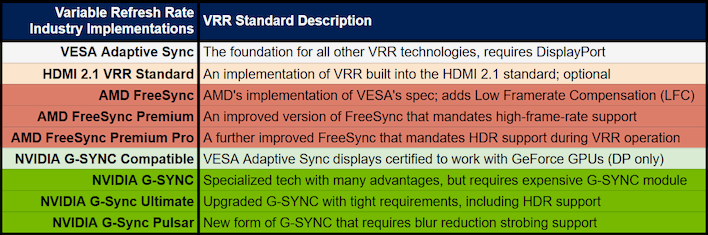

NVIDIA was the first to bring VRR technology to consumers with its G-SYNC feature, but VESA had actually added the concept of "VRR" to the DisplayPort standard beforehand, where it was originally intended as a power-saving feature. Nowadays, you can find displays with many different terms describing their variable-refresh-rate abilities attached. There are still G-SYNC monitors, but there's also G-SYNC Ultimate and G-SYNC Compatible screens. AMD has its own standards, called FreeSync, FreeSync Premium, and FreeSync Premium Pro. Finally, there is the generic term "Adaptive Sync", as well as HDMI 2.1's own "VRR" standard. What's the difference?

This chart should lay it out for you. Essentially, G-SYNC in original, Ultimate, or Pulsar form are the best, but also the most expensive, and they're limited to GeForce GPUs, too. Meanwhile, the rest of the options should work with any modern GPU as long as you're using DisplayPort or HDMI 2.1. Console gamers, you'll need to rely on HDMI 2.1 VRR, and using it can be a little complicated, especially on PlayStation. That's outside the scope of this article, though.

Monitor And Display Basics: Contrast And Color Depth

Resolution and refresh rate are critical specs for monitors, but there's a lot more to image quality than just how many pixels you can show. Two of the most important specifications that determine a monitor's capabilities are its contrast ratio and its color gamut. We're going to talk about contrast first, because it's the simpler of the two concepts.

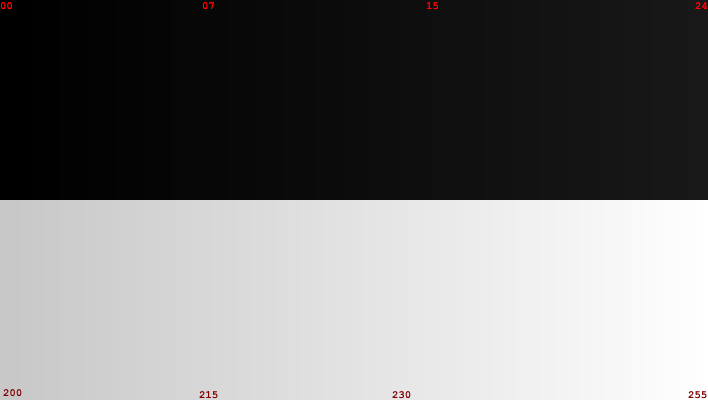

"Contrast" is of course the difference in two values, and so "contrast" in display specifications is simply a measure of brightness versus darkness. It's a value that describes the difference in luminance (brightness) between the brightest point on the monitor and the darkest point. This should in theory be measured with the monitor in a static (unchanging) state, but unfortunately that isn't always the case.

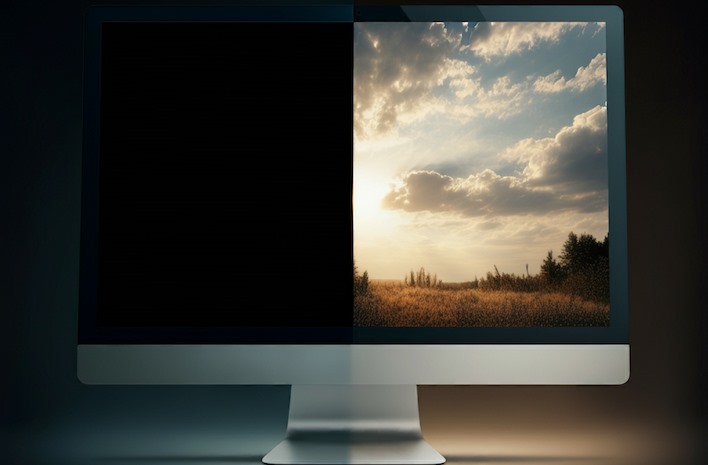

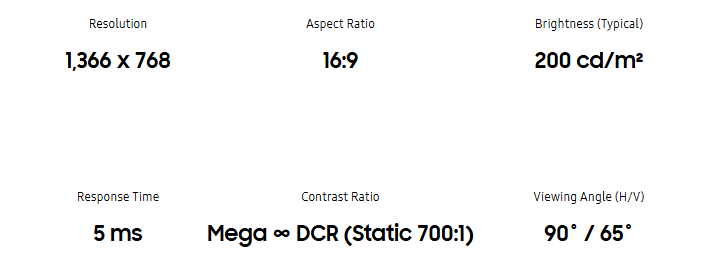

When we talk about a display's "contrast ratio", what we're talking about is normally the "static" or "native" contrast ratio. This is given as a numerical ratio, usually over 1 (e.g. 1000:1), and it describes the ratio between the brightest and darkest value that the monitor is able to display simultaneously, without adjusting the image or the overall screen brightness.

Some monitors dishonestly advertise high contrast ratios as a "dynamic contrast"; this "measurement" involves comparing two values that can't exist on the same time at the same point on a screen, making it extremely misleading and often useless. Sometimes it's justified with automatic contrast adjustment features that will change the backlight brightness based on what's on screen, but that doesn't really tell you anything about the capabilities of the panel in the display.

If the monitor has a good static contrast ratio, then dark areas will be dim without becoming murky, while bright areas will be very bright without "whiting out", or losing detail. You can somewhat think of a display's contrast ratio as a measure of how well it retains detail in very light or very dark images. Most LCD monitors have rather poor contrast, and this is one of the major strengths of OLEDs.

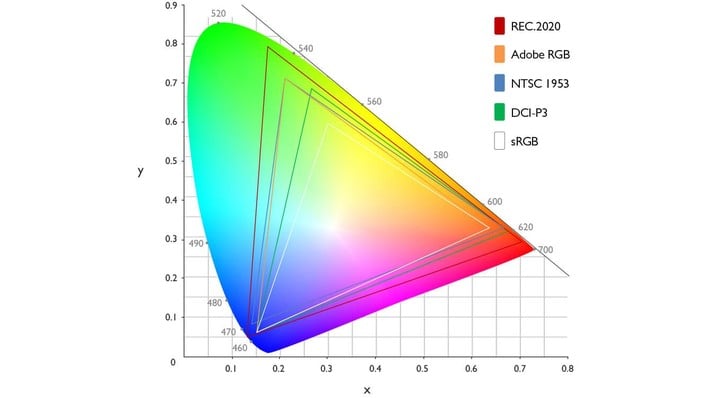

Meanwhile, a monitor's color gamut refers to the range of colors that it can accurately reproduce. This is a complex concept, which is why this specification is typically presented as a percentage of a specific, pre-defined color space. By plotting the most-saturated red, green, and blue colors that a display can represent, we can determine how much of a known colorspace the display can reproduce.

Understanding these specifications requires a bit of understanding of the colorspaces, but it's not a particularly difficult concept as long as you're okay with some abstraction. The image above displays the regions covered by some popular colorspaces. Put simply, sRGB is the standard that covers the smallest area, which is why you will often see displays claim greater-than-100% coverage of the sRGB space. DCI-P3 covers much more area, as do Adobe RGB and NTSC. Finally, the Rec.2020 standard forms the basis of modern HDR color grading, which is why it's very wide.

One thing you'll notice is that none of these colorspaces exactly line up. For example, the NTSC color space includes much richer green colors than DCI-P3, but doesn't delve as deeply into blues. It's for this reason that you can't "convert" color space specs. You'll sometimes hear that 100% sRGB is "equivalent" to 72% NTSC. As you can see, though, the sRGB space isn't entirely included within the NTSC colorspace, so this statement doesn't really make any sense.

The key takeaways from this section, when reading a monitor's spec sheet, are that you want as high a contrast ratio as possible (allowing for the other specs you want), and that you want to see colorspace comparisons against wide colorspaces: preferably Rec.2020, but more likely DCI-P3 or Adobe RGB. You want the percentage in those colorspace comparisons to be as high as possible; this means that the monitor will have vibrant, saturated colors and can more accurately display content designed for those colorspaces.