Displays 101: Here’s What To Look For In Your Next Monitor Or TV

Welcome to Monitor and Display Specifications 101. This is foundational information, and this guide is geared toward those new to display shopping or seeking a refresher, so it will likely cover familiar ground for regular HotHardware readers. Still, we're interested to hear your feedback, so make sure to leave a comment if you have a question, a concern, input, or simply want to give your opinion on this guide. Without further ado, let's get started.

Monitor And Display Basics: Pixels On A Screen

So what is a "pixel"? You've probably heard the term before, even if you're a complete novice to learning about displays. Put simply, a pixel is one single dot in the grid that makes up your screen. An individual pixel is created by setting the color values for that pixel, usually in the form of three eight-bit numbers—that is, numbers ranging from 0 to 255. These numbers correspond to the proportion of red, blue, and green light, and by setting those values, you determine both the color and the brightness of the pixel.

For the purposes of this guide, we're going to be exclusively talking about flat-panel displays. That means screens based on Liquid-Crystal Displays (LCDs) and Organic Light-Emitting Diodes (OLEDs), primarily. Some of this information will apply to older CRT monitors, projectors, and other display technologies, but not all of it. Just keep in mind that when we make broad generalizations such as "all displays" or "all screens", we're specifically referring to modern flat-panel displays.

As we noted, these basically come in two types these days: LCDs and OLEDs. These two display technologies are radically different in nature despite serving essentially the same function. LCDs work by shining a bright light (called the "backlight") through a rapidly-shifting crystal matrix that filters out certain colors for each pixel, while OLEDs are a bit simpler, with a single panel that provides both light and color.

OLEDs do have a much higher baseline in terms of image quality, but LCDs and OLEDs can both offer stunning display experiences. We're not going to get real in-depth with the differences right now, but the key point is that OLEDs are still relatively expensive, so you mustn't be misled by "LED" monitors. When a monitor is described as an "LED monitor", what it's saying is that it is an LCD monitor that uses a bright LED for its backlight.

That's basically standard practice at this point, but in the early days of LCD monitors, many of them used a fluorescent tube (or "CCFL") as the backlight. LEDs make the monitor thinner in addition to reducing weight, power consumption, and heat output, while also providing a brighter and more consistent image. Just keep in mind that if it says "LED monitor" and not specifically "OLED," then it's really talking about a garden-variety LCD panel with an LED for its backlight. We'll talk more about panel types later.

Monitor And Display Basics: Aspects Of Resolution

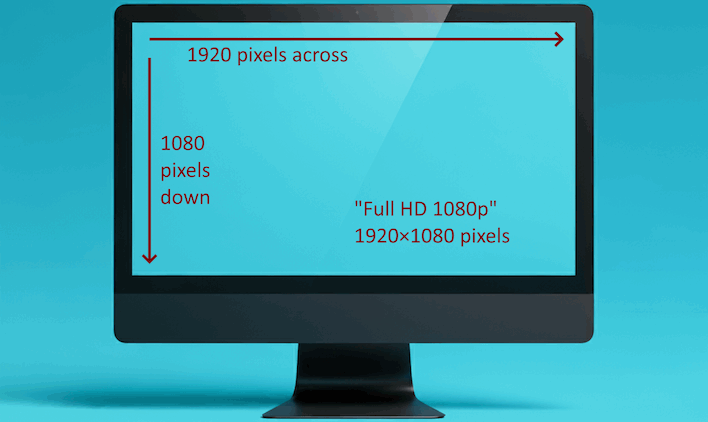

So what is "resolution," then? When you hear about a screen's "resolution", they're talking about the amount of detail that the screen can "resolve", or represent correctly. Resolution is arguably best measured using a metric called "Pixels Per Inch" (PPI), but you'll most often see it instead listed as an X-times-Y value, like "1920×1080". This means that the pixel grid has 1920 columns across (1920 pixels wide) and 1080 rows down (1080 pixels tall). We refer to this as the "spatial resolution" of the display.

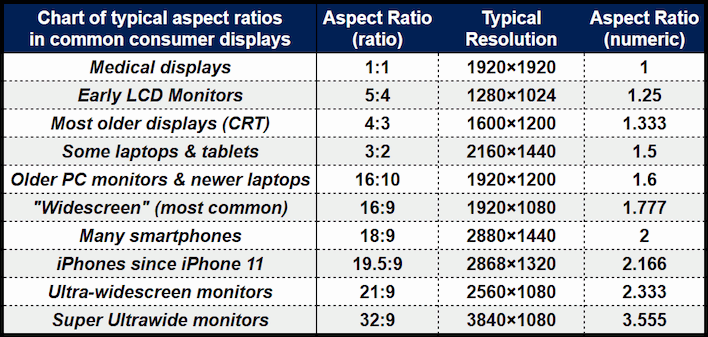

Why is the second number smaller than the first number? Because of the non-square aspect ratio. "Aspect ratio" simply refers to the ratio between the width and the height of something; in this case, the display. The most common aspect ratio for displays today is 16:9, meaning that for every 16 pixels across, there are 9 pixels down. This aspect ratio is called "widescreen," but there are many other aspect ratios for displays. Here's a few:

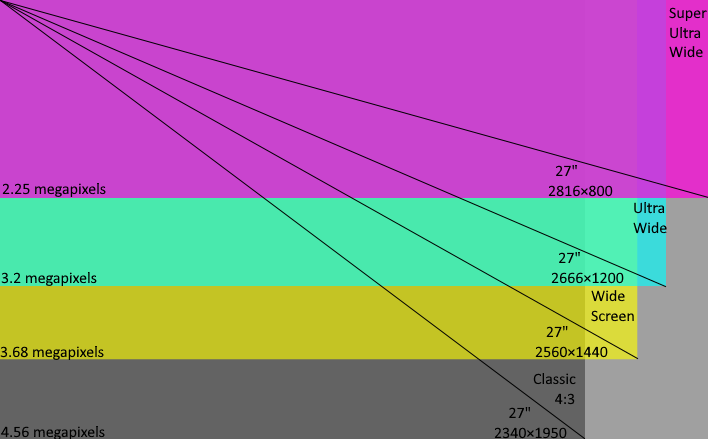

We typically talk about screen size in terms of diagonal inches. This is simply a linear measurement, in inches, from a top corner to the opposite bottom corner of the screen. This made sense when everything was vaguely the same aspect ratio, but now that we have a pretty wide variety of aspect ratios in use, it can be pretty misleading. Check out this graphic:

You can clearly see that as the aspect ratio gets wider, the actual screen area goes down considerably even though the diagonal measurement doesn't change. This will be obvious to anyone who remembers geometry class, but it's very easy to hear two monitors described as, say, "34 inches" and think that they are the same size. Don't be fooled: the wider a monitor, the smaller it is relative to its diagonal measurement. A 34" ultra-wide is the same height as a 27" widescreen display.

Of course, resolution varies between display models and isn't necessarily connected to size. You can have a very large display with a low resolution, and you can have a very small display with a very high resolution. When we talk about "screen real estate", we're talking about the workspace afforded by a monitor, which is related to its resolution, not its size. A 24" 4K monitor has four times the workspace of a 48" 1080p TV.

However, the problem with spatial resolution values is that this number by itself doesn't actually tell you anything about the true sharpness of the screen. That's because you need to know the size of the screen to calculate the PPI, as we mentioned before. A higher PPI simply means higher "pixel density", and that in turn means each individual pixel is smaller. This allows the display to create a sharper and clearer image. Here's a chart of some representative pixel density values:

As you can see, a high spatial resolution value, which equates to a high pixel count, does not necessarily mean that the display is particularly sharp. If you've ever looked a laptop or smartphone screen and thought "wow! what a clear image!" it was because of their relatively-high pixel density value. Pixel density is exactly what Apple was selling when it started talking about "Retina" displays, and it's the reason your Apple Watch looks sharp despite having less than one-quarter of a megapixel of resolution.

Of course, a higher resolution at the same physical size increases the PPI value, just as a smaller size at the same screen resolution does. As such, when you're shopping for monitors, be mindful that buying a larger display will reduce the sharpness of the screen unless you also get a higher resolution.

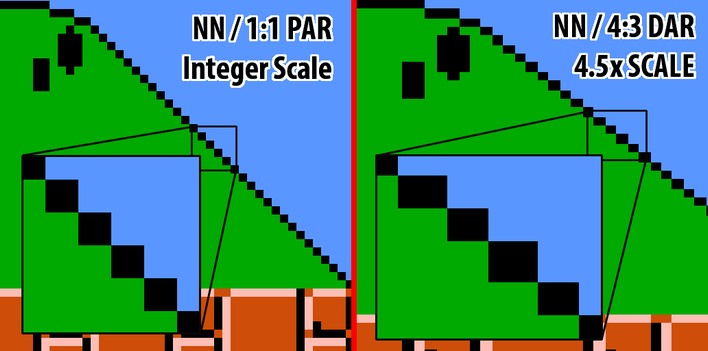

Another thing to understand is that the picture on your screen is only as good as the picture you're sending to the screen. Generally speaking—allowing for a few exceptions—the best picture quality will always come from sending the same resolution image to the display as the display's built-in or "native" resolution. In other words, if your monitor supports a 1920×1080 ("1080p") resolution, you want to feed it a 1080p signal for best quality.

Sending a lower-resolution input stream may work, but it can introduce messy scaling artifacts as the display struggles to represent an image that doesn't match up 1:1 with its pixel layout. Meanwhile, sending a higher-resolution video feed generally won't work at all, although some TVs are capable of "downscaling" a higher-resolution image. This usually introduces additional latency and isn't really a good idea for PC use.

Even if the video signal remains unchanged, you can still suffer reduced image quality when watching content (like a video, or a game) that isn't in the correct resolution. Of course, there are reasons to do this intentionally, such as choosing a lower video resolution to save bandwidth, or reducing game render resolution and thus sacrificing visuals to improve performance.