NVIDIA Boosts DGX Spark Performance And Pushes New Developer Tools at CES 2026

DGX Spark Performance Increases

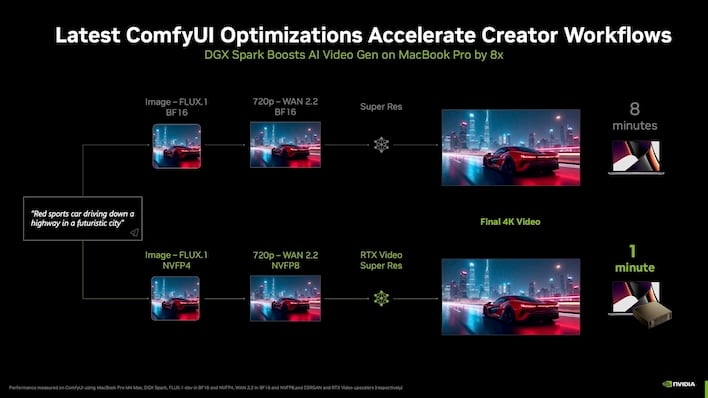

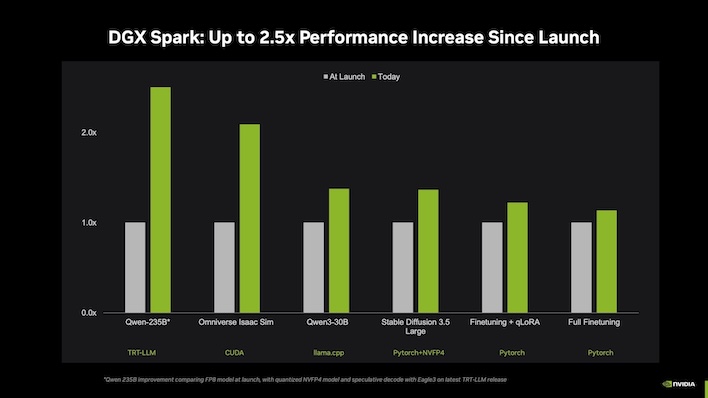

Because performance was the biggest hole that we observed in our DGX Spark review at the time of its launch, NVIDIA has spent the majority of its engineering time working on ways to bring an uplift. Getting thumped by an M4 Max Mac Studio that shipped months earlier in our review probably didn't feel too good, but NVIDIA didn't sit back and let Apple march all over it.In fact, NVIDIA showed at least one image generation workflow that was 8x faster on DGX Spark than on an M4 Max MacBook Pro.Curiously, this slide apparently uses a placeholder, since it doesn't show the output for a subjective quality comparison. Instead the same image is used throughout. At any rate, the company is touting "up to" 2.5x performance since launch back in September, which does include updates released in November as well as what's being announced today.

Before we get too excited about huge performance optimizations, it appears that the majority of the big increases come from models that have been quantized from FP8 down to NVIDIA's proprietary NVFP4 data type. For example, the 2.5x performance gain is specifically for Qwen-235B, but that is mostly because the model has been converted for NVIDIA's latest TensorRT-LLM (often abbreviated TRT-LLM) release available as open-source software on GitHub.

This is great in a couple of ways: one being the obvious performance increase, but also in memory consumption. The DGX Spark has 128 GB of memory, of course, but the real advances are multi-model agents that need to cram even more data into memory. With memory shortages coming in fast and furious, and the vast majority of wafer allocations earmarked for high-margin datacenter hardware, conserving memory is going to be critical if AI developers intend to improve both performance and accuracy. For example, the open Nemotron models that NVIDIA released last month use NVFP4 heavily.

There are performance increases across the board, however, and NVIDIA says we can expect to see them with other frameworks, including bare CUDA increases, llama.cpp, and Pytorch both with and without NVFP4. Speculative decode isn't really a new concept, but it's seeing wider use as Eagle3 "pre-bakes" responses that then get passed off to Qwen, and that's how NVIDIA achieved its claimed 2.5x performance vs the launch period. What's unclear is what the chart above is talking about with 2.5x performance, but we're working on the assumption that it's related to time to a completed response.

Our own DGX Spark testing showed a lot of promise (credit: HotHardware)

For those unfamiliar, speculative decode lets the system decrease a key performance indicator: time to first token. If you've experimented with LM Studio LLMs on your PC you know the most agonizing period of time is the time spent thinking, and speculative decode reduces that. Basically, the concept is to have a smaller "draft" stage that gets to generating output quickly (Eagle3-120B quantized to NVFP4 in this case), and then a larger "target" that refines and completes the work. It wasn't really possible to fit a 235B-parameter model into 128GB of system memory along with a smaller draft model for speculative decode until the larger Qwen 235B was quantized to NVFP4.

Explore AI Workloads With More Tools

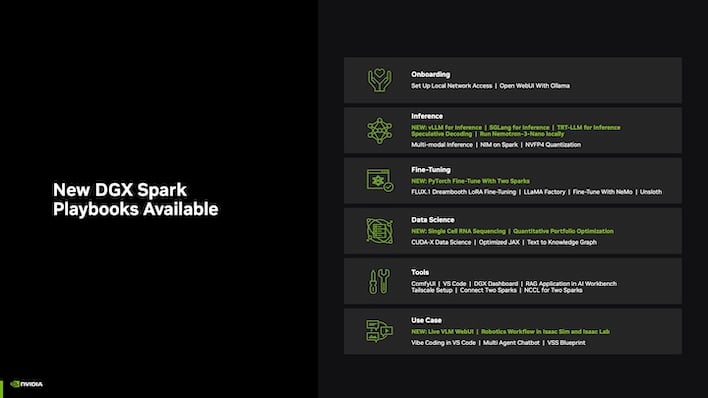

One of the most helpful resources NVIDIA provided during our DGX Spark review period was a healthy list of what the company calls playbooks, which provide step-by-step instructions for setup, execution, and performance metrics of a variety of AI workloads. While some may wonder how NVIDIA got into such a dominant position in the AI hardware race, one only has to look at the vast array of APIs, finely-tuned libraries, developer documentation, and supporting software to get their answer.Several of those playbooks have seen significant updates, including the onboarding process, Open WebUI with Ollama LLMs, and playbooks built around common development tools like Visual Studio Code, retrieval-augmented generation (RAG) applications that can search the web in addition to generate text, and multi-DGX Spark tools using NVIDIA's Collective Communications Library (NCCL).

Additionaly there are a total of seven brand new playbooks around a variety of use cases: TRT-LLM speculative decoding (as discussed above), multi-modal inference, and a really interesting single-cell RNA sequencing workload. If you have a Spark (or even if you don't, but you have an NVIDIA GPU with gobs of memory like a GeForce RTX 5090) definitely check those out.

The company also has some new game creation tools to assist with generating code and assets. However, rather than being fully AI-created "slop," NVIDIA wants to augment existing textures and objects using AI to upgrade environments and textures to use custom materials and enhanced lighting.

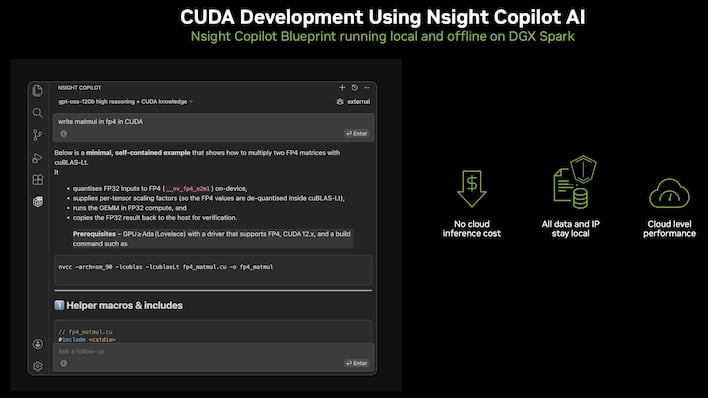

Aspiring developers in non-game fields might be interested instead in using Nsight CUDA Copilot AI within Visual Studio Code to get some vibe coding done while keeping all data and intellectual property local to the machine rather than in the cloud. Nsight will be a exclusive to the DGX Spark or partner machines built on NVIDIA's GB10 Superchip.

Lastly, NVIDIA is bringing support for its Enterprise AI platform to the DGX Spark later this month. Enterprise AI is a bit of an umbrella term that encompasses cloud-native tools meant to help businesses build and deploy production-ready AI applications. This could be anything from robot-assisted manufacturing in factories to retail loss-prevention and medical point-of-care applications. A lot of those apps run either in datacenters or cloud providers, or on edge devices like the Jetson family of small, power-efficient single-board computers.