NVIDIA Blackwell GPUs Supercharge MoE AI, Boosting Performance Up to 10X

For clarity, the company is talking about its GB200 NVL72 machines. These are the big racks you've seen in pictures like the one above; they really don't look like typical server racks due to half the rack being network hardware and everything requiring liquid cooling. The compute density is impressive—5.76 PFLOPS for single-precision—and they have the ability to focus the entire power of the whole rack on a single problem thanks to all that advanced networking equipment.

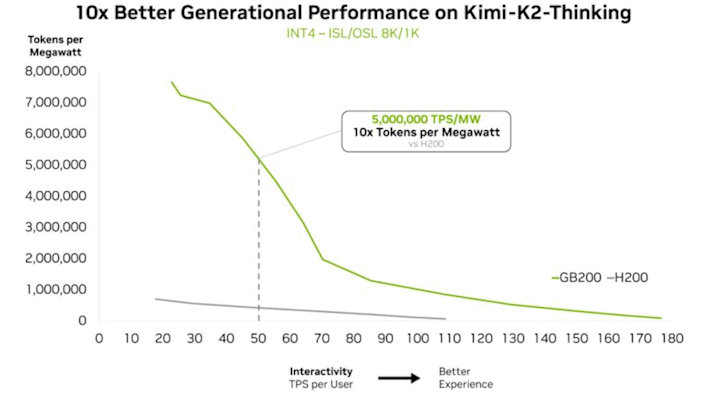

The comparison that NVIDIA is making is against its previous-generation HGX H200 machines, which are darn fast by any reasonable metric, and certainly not 1/10 the speed of Blackwell based on specs alone. Specifically, NVIDIA is comparing the HGX H200 against the GB200 NVL72 in AI inference on the Kimi K2 Thinking model, which is the top-rated open-source model on the Artificial Analysis leaderboards.

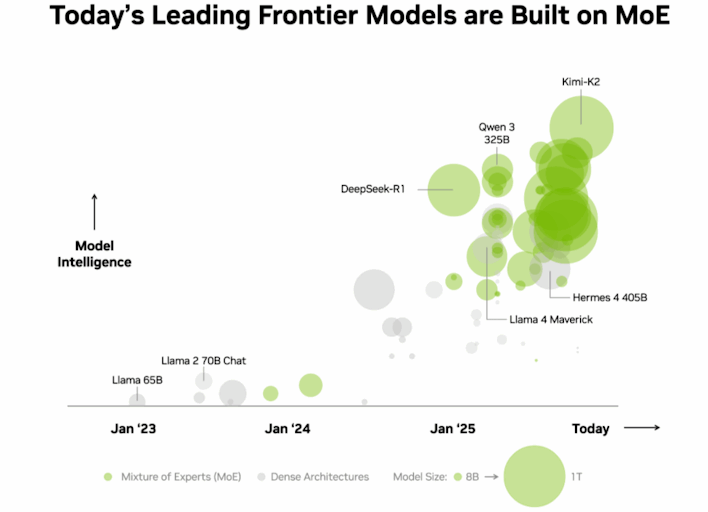

So, how does Blackwell achieve such a massive speed-up over Hopper? The answer isn't as simple as "it's because of this feature." It's a combination of advantages that the newer system has over the older machine, including improved specs (particularly higher memory capacity), reduced interconnect latency, and the fact that Blackwell was specifically designed to accommodate Mixture of Experts models, which have unique requirements versus "dense" AI models.

As we noted above, Mixture of Experts is a model sparsity strategy. A "sparse" AI model is one that only lights up some of its parameters when doing its thing; this is distinct from "dense" models that activate all of their parameters at once, which is unbelievably compute-heavy. Mixture of Experts is only one sparsity strategy of several, but it's the most popular because it happens to dovetail neatly with the way we're using multi-processing clusters to get around the fact that nobody can build a processor at this time with enough RAM to store an AI model with 1T+ parameters.

That means that on an NVIDIA compute server, each GPU or tray can host a tightly coordinated cluster of a model's parameters; this is one such "expert". Input goes to the expert that can handle it the best, and only that set of parameters has to be active, saving memory bandwidth and compute throughput. This requires careful coordination of the model layout and structure, though.

That's why NVIDIA is boasting about its "extreme codesign." By collaborating deeply with AI developers and doing plenty of its own AI software development, NVIDIA has managed a massive library of what it calls "full-stack optimizations," like the disaggregated serving that we reported on before, as well as advanced data formats like NVFP4 that attempt to minimize the downsides of using low-precision models.

Obviously, "NVIDIA is the best at AI" isn't exactly a surprising story, but it's interesting to see how the company has achieved real-world performance numbers that would have been unimaginable just a few years ago. AI is truly a unique workload and the engineering that has gone into these machines is mind-boggling. The company's competitors (primarily AMD) have some impressive hardware on the way, but for now, NVIDIA still sits at the top.

If you're curious for more technical details about how all this shakes out, NVIDIA has a technical deep dive on the topic.