NVIDIA's Road Ahead: Ion, Tegra and The Future of The Company

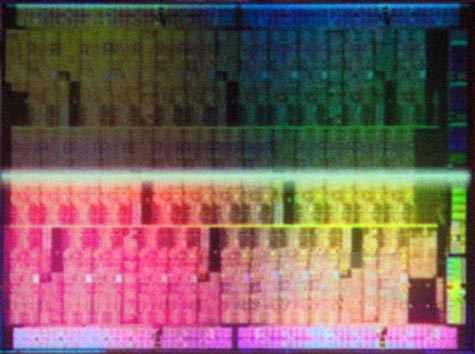

Actual Westmere on the left, NVIDIA's 9400G on the right. Images are scaled to the same size, but this is *not* a side-by-side photo. The larger of the two dies on the Westmere core is presumably the GPU.

Westmere's integrated GPU isn't the only Intel-branded headache NVIDIA will have to deal with in the next few years; Intel has given guidance that it expects to ship Larrabee silicon in the first half of 2010. It is, of course, possible that Larrabee will arrive with all the attractiveness of a week-dead walrus: overpriced, underperforming, hot, noisy, and unable to deliver on its lofty promises of real time ray-tracing (RTRT). Good companies, however, don't make future plans on the assumption that their competitors will screw up, which means NVIDIA has to plan for a future in which Larrabee is actively competing for the desktop / workstation market. Intel, after all, won't just throw down its toys and go home, even if first-generation Larrabee parts end up sold as software-development models rather than retail hardware. And by the time Microsoft, Sony, and Nintendo start taking bids on their next-generation consoles, we could be looking at a three-way race for their respective video processors.

The physical layout of Larrabee's die. General consensus is that the above is a 32-core part with 8 TMUs (Texture Mapping Units) and 4 ROP units. The small square attached to each core is the L2 cache; each core is equipped with 256K of exclusive cache for a total of 8MB of L2 on-die.

If Intel successfully establishes itself as a major player in the discrete GPU market, both NVIDIA and AMD will be faced with an unwelcome third opponent with financial resources that dwarf the two of them combined. As the dominant company in both desktop and workstation graphics, NVIDIA literally has more to lose from such a confrontation, and it's the only one of the three that does not possess an x86 license or an established CPU brand. This leaves the corporation at a distinct disadvantage compared to AMD; the latter can combine a CPU and GPU into a single package and / or design itself a graphics core based on the x86 architecture. With no simple way to address these issues, NVIDIA is exploring a separate market altogether, and that's where Tegra comes in.