SLI Under The Microscope: Vista vs. XP

Upon its release, it was expected that even the most ardent power users and enthusiasts were going to tread lightly at first with respect to adopting Windows Vista as their primary OS. Though end users in this demographic are classically early adopters, in terms of new hardware technologies coming to market, an operating system change brings with it a whole myriad of pitfalls, from backwards compatibility to stability and performance issues, which are all understood to be "part of the deal" with any new OS. In reality, many folks migrate to a new OS platform over time, opting to dip their toe carefully into the pool with less critical usage models, like running it on a backup machine or secondary notebook, for example, at least initially.

Gamers, on the other hand, are perhaps a breed apart, in that many are risk takers, (should we say thrill-seekers maybe?) willing to take it on the chin with their gaming rig in an effort to have the latest and greatest in both hardware and software technologies. In short, a risky new OS move to Vista is especially tempting for gamers, with the advent of DirectX 10, which is only supported by Microsoft's new OS. However, our early findings were mixed with respect to Vista's gaming performance and certainly with a few games that had a DX10 rendering mode, performance just wasn't up to par with Windows XP, which has years of ring-out and performance optimization behind it. This all seemed logical but the view with Vista was even more bleak if you were running a pair of NVIDIA graphics cards in SLI mode. Performance in Vista on this type of system, in either DX9 or DX10 mode just wasn't where it needed to be.

In an upcoming article, HotHardware's Mike Lin will take a deep-dive look into the various performance profiles of graphics cards from both NVIDIA and AMD-ATI, with a view of Vista and Windows XP performance as well as image quality and rendering enhancements associated with DX10. As a precursor to this article, we thought it might be interesting to see what the numbers looked like for the extreme performance junkies in our midst, running dual-graphics SLI setups with high-end DX10 capable GeForce 8800 graphics cards. Is SLI mode on Vista still two steps behind in performance? The following pages will offer you a quick-take assessment of NVIDIA's latest ForceWare driver release, running on Windows XP and Windows Vista installations in SLI mode. NVIDIA seems to have closed the gap a bit and we set out to explore this in some of the most exciting and new game engines on the market.

|

|

|

|

Testbed Configuration: Core 2 Extreme QX6850 (3GHz) Asus Striker Extreme nForce 680i SLI chipset GeForce 8800 GTX (2) 2048MB Corsair PC2-6400C3 2 X 1GB Integrated on board Western Digital "Raptor" 150GB - 10,000RPM - SATA

Windows XP Pro (DX9) |

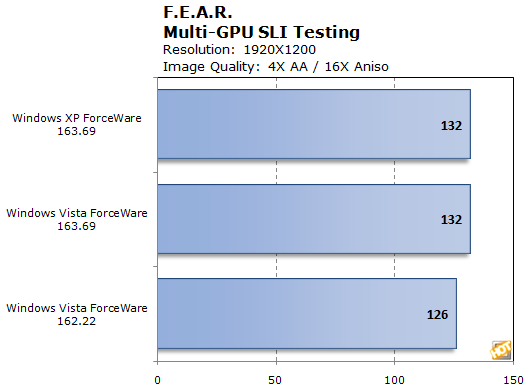

Of course F.E.A.R. doesn't represent a new game engine any longer and it's obviously only a DX9 game but we thought we would start with one quick look at legacy SLI gaming performance on Vista with these new drivers and F.E.A.R. is one of the more taxing game engines from back in the day.

|

|

|

F.E.A.R |

One of the most highly anticipated titles of recent years was Monolith's paranormal thriller F.E.A.R. Using the full retail release of the game patched to v1.08, we put the graphics cards in this article through their paces to see how they fared with a popular title. Here, all graphics settings within the game were set to their maximum values, but with soft shadows disabled (Soft shadows and anti-aliasing do not work together currently). Benchmark runs were then completed at a resolution of 1920X1200 with 4x anti-aliasing and 16x anisotropic filtering enabled. |

Here we see that NVIDIA has made continuous improvements in their driver updates for SLI gaming in Vista, such that this now very mature game title's performance is completely on parity with the performance while running on an installation of Windows XP. The recently released 163.69 ForceWare driver version shows a small percentage increase over 162.22.

|

|

|

Lost Planet |

Lost Planet is a first person shooter style game from CAPCOM that features both DX10 and DX9 rendering paths. Users are able to select DX10 or DX9 rendering modes on start-up. There are some subtle enhancements in lighting and pixel shader quality that are visible in screen shots while running in DX10 mode, but during game-play these are hardly noticeable. We ran the full version game benchmark test at a setting of 1920X1200 with 8X AA and 16X AF enabled in DX9 mode to get a fair comparison between Vista and XP. |

We should first note that we observed an anomaly when running our benchmark in DX10 mode at this high AA processing setting. Since we weren't confident in that datapoint, we elected to drop it from our graph. We will try to provide you updates in this section as we dig a bit deeper into the issue. Regardless, comparing DX9 mode on Vista to DX9 on Windows XP, we see that our GeForce 8800 GTX SLI-enabled rig exhibits a little more than 6% performance variance between the two OS platforms with NVIDIA's latest driver. And that there is a marginal performance improvement between the older v162.22 drivers and the current 163.69 drivers.