Intel Lunar Lake CPU Deep Dive: Chipzilla’s Mobile Moonshot

Obviously, we can't let a discussion of the Lunar Lake processor architectures go without talking about AI performance and the NPU. The slide above proudly boasts that Lunar Lake can provide up to 120 TOPS for AI processing. The bulk of this grunt comes from the Xe2 GPU and its 64 XMX units, while the CPUs, lacking AMX as they do, provide only 5 TOPS. The rest comes from the integrated NPU.

Lunar Lake Quadrules NPU Performance Over Meteor Lake

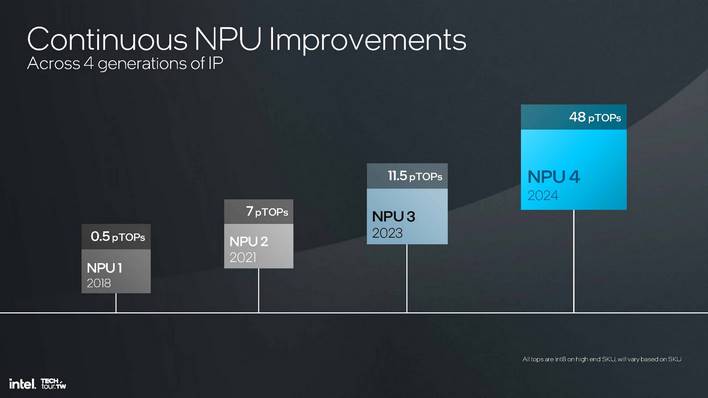

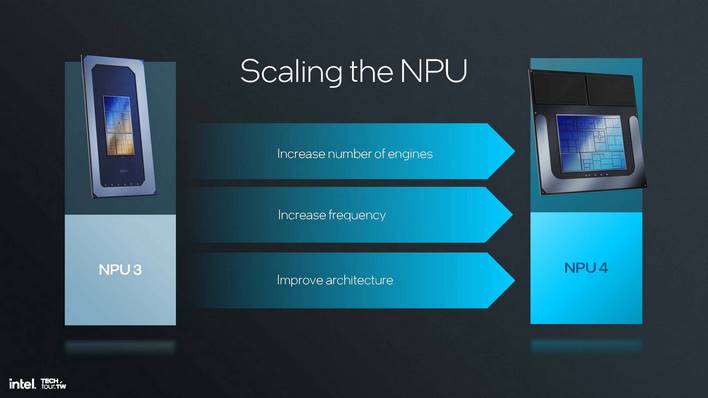

Intel describes the architecture in the Lunar Lake NPU as "NPU 4", which means that it's a 4th-generation NPU. The company is counting this from its original add-on NPU silicon, based on the Movidius Myriad X in 2018. Its "King Bay" AI processor was the next-gen, and then Meteor Lake's integrated NPU was the third-generation AI processor. Now, with Lunar Lake, we have the 4th-gen offering up to 48 TOPS.NPU4 is certainly improved from NPU3 architecturally, and we'll look at that briefly, but the biggest difference between the Meteor Lake NPU and the Lunar Lake NPU is simply a matter of scale. Both the number of functional units inside the neural processor as well as its clock rate have increased, giving a greater-than-fourfold increase in background AI processing power.

That makes sense when you consider that the Lunar Lake NPU is literally three times the size of the Meteor Lake incarnation. It triples the number of Neural Compute Engines, which likewise triples the number of MAC (Multiply And Accumulate) units.

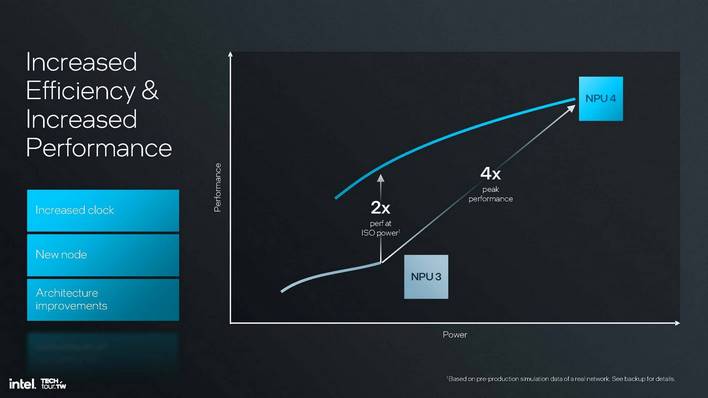

Thanks to the die shrink, in combination with an unspecified (but probably around 33%) increase in clock rate, Intel says that NPU4 can hit double the performance for the same power compared to NPU3, while peak performance is, as we noted before, around four times as fast. This puts it in the same range as Qualcomm's Snapdragon X Elite and AMD's Ryzen AI 300 processors, and that isn't a coincidence, as Microsoft mandated 40 TOPS as the minimum requirement for a Copilot+ PC.

The biggest changes in NPU4 appear to be those made to the SHAVE DSPs, which quadrupled the size of their vector register and quadrupled the bandwidth both in and out of the DSP. These DSPs perform critical vector operations for AI processing, so these changes are radical improvements to the NPU's capabilities.

Intel also says that it doubled the DMA bandwidth for the NPU, and notes this change in particular drastically improves the responsiveness of large language models (LLMs) when running on the NPU. There are new functions in the DMA engine too, including hardware embedding tokenization. Tokenization is the process of turning user prompts into smaller pieces that the AI model can actually process, and doing it in hardware should reduce response latency of models running on Lunar Lake's NPU.

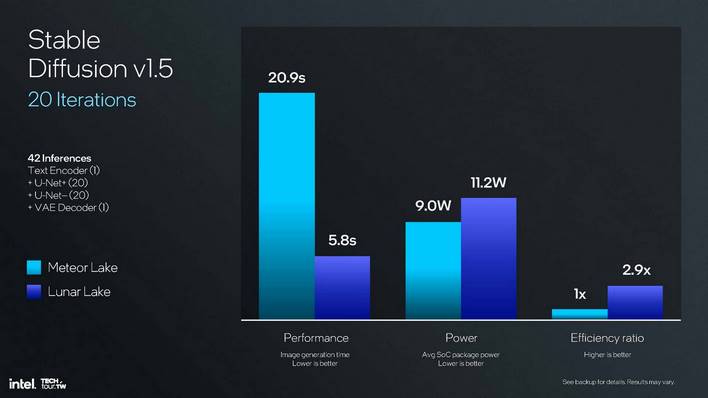

Intel performed a Stable Diffusion image generation demo on stage for us, and gave us some numbers from the process. While the Lunar Lake NPU does use slightly more power to generate an image across 20 iterations, it also completes the task nearly four times faster. This results in an overall efficiency—in terms of task energy, or, the battery charge it took to complete the task—2.9x better than the Meteor Lake part. In other words, the Lunar Lake machine used slightly more than one-third of the battery charge to generate the image, and it took less than a third of the time to do it.

Thread Director and Power Management Updates

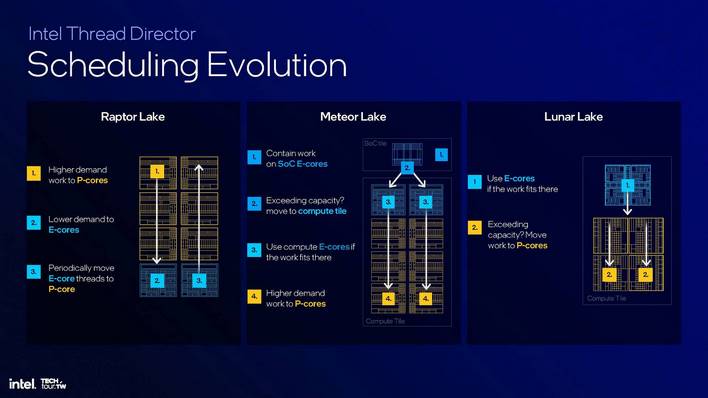

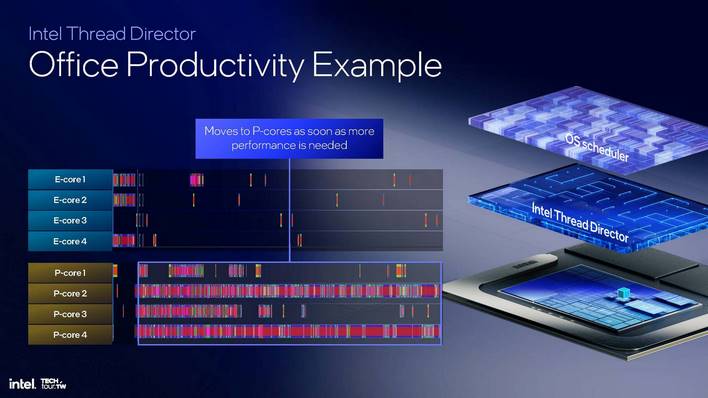

We've already mentioned a few times in this piece that Intel has completely changed the way it does thread scheduling on Lunar Lake, but it's still handled by the Thread Director, of course—it's just a revamped version of that functionality. In a sense, it's just a simplified version of the way it worked on Meteor Lake, which was itself essentially backwards from how it worked on Raptor Lake. Check it out:The goal is to keep everything on the E-cores when possible. This is because the E-cores run lower clocks and lower voltages, meaning they use less power. The quick Lion Cove cores are relatively power-thirsty compared to the simpler Skymont CPUs, to say nothing of that big 12MB L3 cache. However, if the E-cores are being overloaded, tasks will get moved to the P-cores quickly. It looks like this, in practice:

Here, the office work started out on the E-cores, but as the workload became more intensive, it got moved over to the CPU's P-cores to improve perforamnce. Actually, in the graph, you can see that even the P-cores get loaded pretty heavily, but that's what they're there for.

We're not going to go into Intel's Thread Director refinements in explicit detail, but fundamentally it comes down to these four things listed above: better feedback on process needs, OS-managed "zones" to contain light tasks on the E-cores (and keep them from slipping to the P-cores after a moment's heavy load), improved integration with operating sysstem power management, and taking hints from vendor and user settings to alter the algorithm as needed.

This slide outlines the final outcome. With Lunar Lake, Thread Director communicates closely with Intel's Dynamic Tuning Technology to carefully schedule threads where they make the most sense according to the power management paradigm in effect. It will probably be possible to tank your Lunar Lake's battery life in exchange for improved responsiveness, if you really need the extra speed.

Intel Lunar Lake: Initial Conclusions

Unfortunately, Intel wasn't able to provide us with a hard shipping date for Lunar Lake processors nor the PCs that will be based on them. Instead, Intel offered a "Q3 2024" release window. We're just entering that period now, and neither Qualcomm nor AMD have shipped their competing products yet, so Intel may just be able to stave off its competitors for a little while longer and bring the fight with Lunar Lake. We'll see soon enough.

Of course, we won't truly know how things will shake out until we have hardware in hand, but if the reality is even close to what Intel is claiming here, it seems like the blue team is in a good position for the latter half of 2024. Lunar lake is looking strong.