Intel Lunar Lake CPU Deep Dive: Chipzilla’s Mobile Moonshot

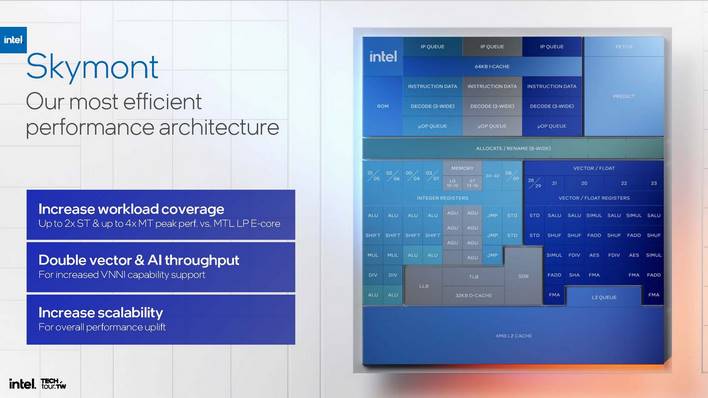

Just like with Lion Cove, the engineers working on Skymont had a few goals in mind. The most prominent of them was to increase workload coverage. That means increasing the amount of tasks that the E-cores could handle, which largely boils down to improving performance and efficiency. With an eye toward future products, Intel's engineers also worked to double the AI throughput of Skymont, and in general increase its scalability.

Enahnced Skymont E-Core Improve Performance And Efficiency

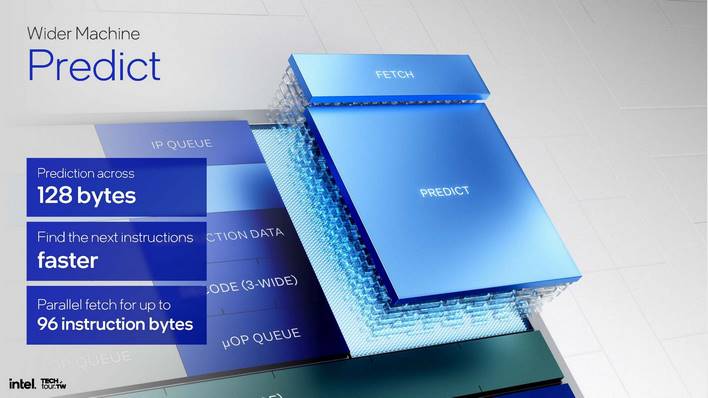

How do you increase performance on a microprocessor? There are a lot of knobs you can tweak, but in general, the most straightforward way from a design perspective is to make it wider. Skymont is MUCH wider than the Crestmont E-cores in Meteor Lake. Starting right up front, it has a more capable predictor that can search across 128 bytes for code to prefetch, and its parallel fetch capability has increased by 50%.

That's because Intel has added a third decode cluster to the front end, giving Skymont three three-wide decode clusters. That makes the front-end of Skymont even wider than the front-end of Lion Cove. Intel has also implemented a new feature called "Nanocode" that can generate micro-ops for "common and specific microcode flows." This allows the decoders to continue doing things in parallel, adding bandwidth and improving the reliability of decoder performance. Micro-op queue capacity has likewise grown by 50% to 96 entries.

Naturally, the back end has grown as well. Allocate/rename is now 25% wider than in Crestmont, and the retire block has doubled in size from eight to 16-wide. Intel says that this is not really about performance, but instead about freeing up resources—the faster the core can retire, the more resources it can free up, which saves power. Intel also says that it has implemented dependency breaking in Skymont, which lets the core "short-circuit" the dependency chain when the result of an operation is already known. This improves IPC.

Altogether, Skymont features a massive 26 dispatch ports. Intel explained that you can either share hardware or make it dedicated. The former saves area, while the latter saves power. Intel clearly decided to make everything dedicated for power reasons, so Skymont has eight more dispatch ports than Lion Cove does.

This "make it wider" methodology extends in particular to the E-cores' floating-point units. Intel doubled the number of 128-bit vector units, which straight up doubles the peak FLOPs or TOPS of this core compared to Crestmont. Intel says that despite the greatly-increased throughput, it has also reduced latency. Skymont now can perform FMA operations in just 4 cycles.

Another major addition to Skymont's FP units is native floating point rounding hardware. This eliminates the need to implement denormal support in microcode. Lastly, where Crestmont doubled the number of VNNI SIMUL units over Gracemont, Skymont yet again doubles it to four. This will significantly speed up AI computations.

Where Meteor Lake's LP-E-Cores had two megabytes of L2 cache for two cores, Lunar Lake implements four megs of L2 for its four E-cores. Intel says that it "went out of its way" to improve core count scaling by doubling the L2 cache bandwidth, and it also improved eviction from 16bytes/clock to 32b/clock. Furthermore, where Crestmont and earlier E-cores would have to bounce off the fabric to transfer data from core to core within a cluster, Skymont now supports direct L1 to L1 transfers, which improves latency for cooperative workloads.

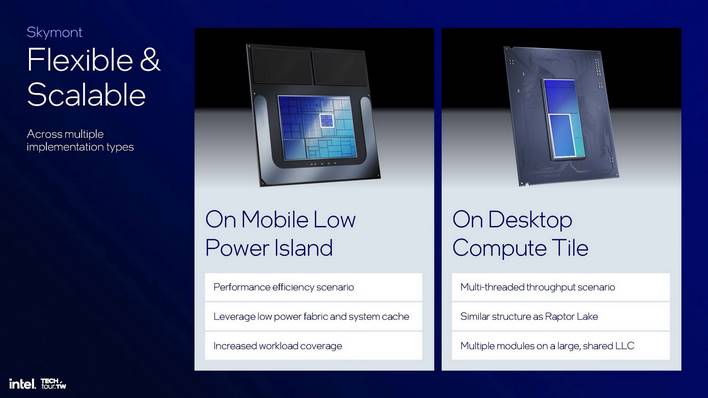

As we noted before, the goal with these changes was to run as many workloads as possible on the CPU's E-cores. This allows a Lunar Lake chip to keep those power-thirsty hot-clocked Lion Cove cores powered down, saving precious battery life. However, Skymont is also coming to desktop and server CPUs. Intel wanted to make some comparisons for both use cases.

Skymont Offers Massive Gen-Over-Gen Performance Improvements

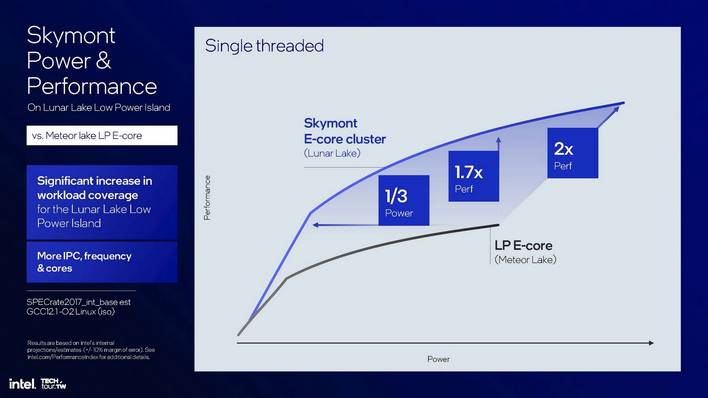

Here, we see a Skymont core on Lunar Lake compared against one Crestmont core on a Meteor Lake SoC tile. This test is using the exact same compiled binary on an identical Linux setup at a fixed frequency, so it's a pure measure of IPC with no architecture-specific optimizations. As you can see, not only are there zero regressions, but Skymont offers an absolutely massive gain in performance in both integer and especially floating-point math. Intel said that the giant gain in FP speed is primarily down to both the doubled-up vector hardware as well as the native hardware denormal rounding support that we noted before.As you can see, at least according to Intel, Skymont on Lunar Lake can hit the same performance as a Meteor Lake LP-E-core for 1/3 the power, or around 70% better performance with the same power. However, notably, due to the power and scheduling changes in Meteor Lake, Skymont can also clock much higher on Lunar Lake as compared to Crestmont on Meteor Lake. As a result, the peak speed is actually higher, at around double per-core performance.

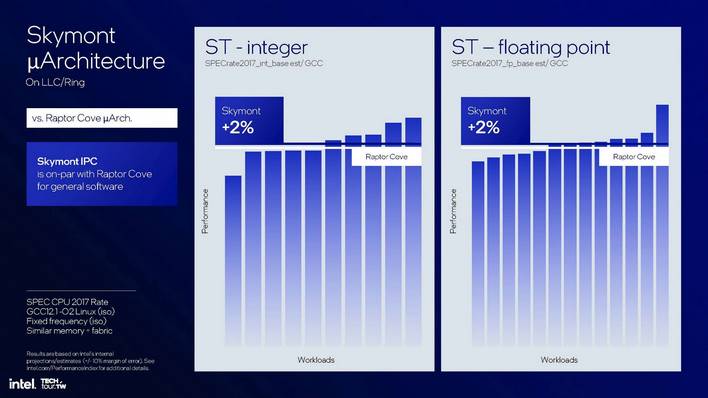

Meanwhile, for Skymont implemented in desktop or server processors where it can share the ring bus and last-level cache with the rest of the CPU, it can apparently achieve IPC roughly on par, on average, with Raptor Cove. This is actually an incredible result. Skymont is still a very efficient E-core design, but clock for clock, it can match the Raptor Cove P-core in many workloads. Intel notes that it is a trade-off, and some things still present superior IPC on Raptor Cove -- they're very different CPUs, after all. Still, the fundamental take-away is that Skymont can offer comparable performance to Raptor Cove in day-to-day tasks, which is pretty amazing.

So we've talked about both CPU cores, and now it's time to move on to the biggest portion of the Lunar Lake die: the Xe2 integrated GPU. Just like with the Lion Cove P-cores and the Skymont E-cores, the Lunar Lake integrated GPU is massively revised from Meteor Lake, so head over to the next page to see how.