About a year ago, an AI startup known as Recogni announced a patented number system for AI math, known as Pareto. Pareto is a logarithmic system, meaning that it stores numbers using their logarithmic representation rather than their explicit values. This can be

highly advantageous for AI, because it drastically simplifies the math you need to do to multiply two values; instead of A×B, it becomes log(A)+log(B). For computers, addition is much simpler than multiplication, and requires a lot less silicon (and thus less power) to perform.

We didn't cover the original Pareto announcement because it was just that, an initial technology announcement. But now there's much bigger news: Recogni is rebranding itself as "Tensordyne", and Tensordyne is coming for the big boys like NVIDIA with an upcoming processor that promises to revolutionize AI computing—at least, according to Tensordyne.

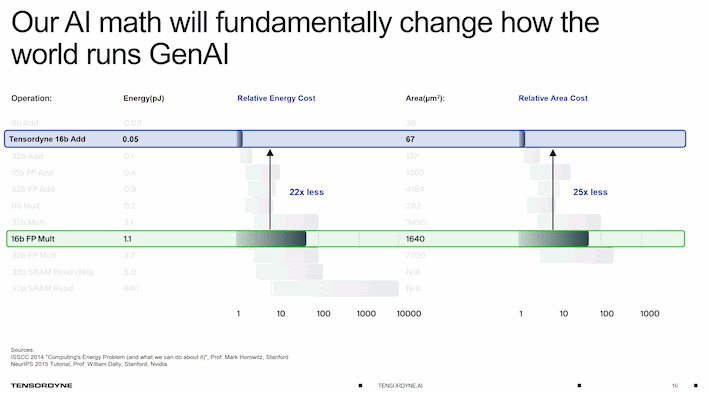

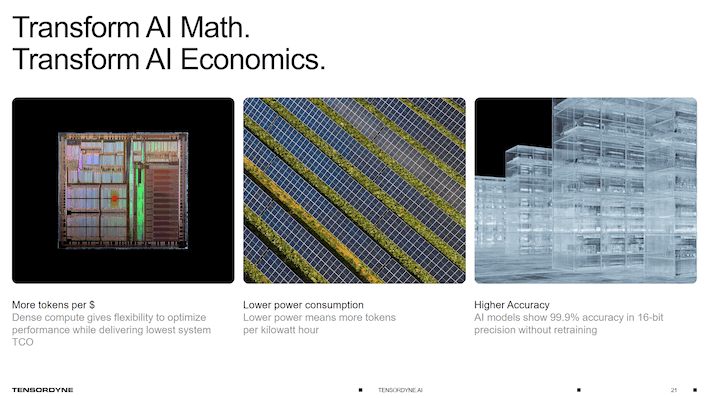

By using Logarithmic Number Systems (LNS), you can slash both the energy cost and silicon area cost of AI inference computations. How much? Tensordyne says that its upcoming chips can beat an

NVIDIA GB200 NVL72 rack in power efficiency by a factor of 8. In other words, it would draw one-eighth the power to generate the same number of AI tokens, specifically with the Llama3.3 70B open-source LLM.

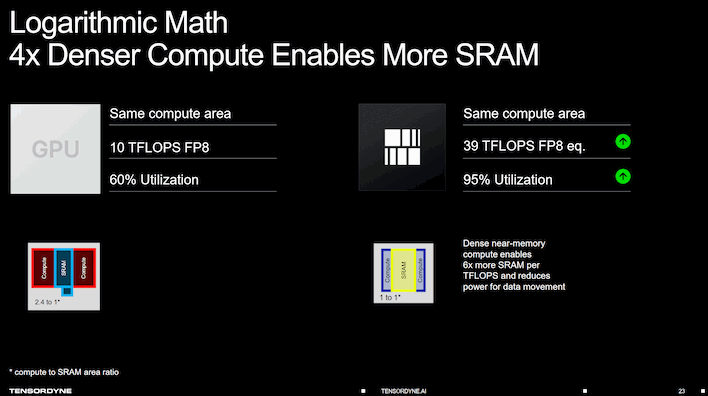

Tensordyne's method uses a logarithmic number representation instead of floating-point or integer numbers. This has a big advantage: it allows them to perform AI inference using primarily additions instead of multiplication operations. Because AI compute is primarily composed of matrix multiplication, this radically simplifies the workload, and allows the functional units of Tensordyne's chip to be significantly smaller. In turn, more of the die can

be SRAM cache (6x as much versus the competition), which improves both performance and core utilization, while also reducing power consumption.

Of course, there is a 'gotcha' to this, though. AI math isn't just matrix multiplication. It's actually primarily "MAC", or "Multiply-Accumulate" instructions; on current GPUs and CPUs, this usually manifests as an "FMA", or Fused Multiply-Add. In other words, it's both a multiplication and an addition.

While logarithmic representation makes certain kinds of math very easy, it makes other kinds of math extremely difficult. What kinds? Well, addition, as you've probably guessed. You can't just do log(A + B); for an accurate result, you have to work out the correction factor, which involves some complex calculus. If computers based on LNS have to do additions very often, then they lose their efficiency benefits—and then some, usually. This is why LNS-based chips like the Gravity Pipe are usually

fixed-function accelerators and not Turing-complete processors.

The idea of using LNS math isn't novel. Logarithms are algebra stuff; they were first conceptualized way back in the early 1600s. The idea of using logarithms in computer chips isn't new either. People were experimenting with it as far back as the 1970s, and there have been chips produced already, like

the Gravity Pipe processors used in some GRAPE supercomputers. NVIDIA has experimented with the idea too, as recently as 2023. LNS-based hardware has won many benchmark prizes and efficiency awards in the past, but it has never become common because there was no good way to solve the addition conundrum.

Tensordyne isn't ignorant of this. In fact, our own Marco Chiappetta asked about this issue in a Q&A section, and the company's VP of AI, Gilles Backhus, explained that the "swapping FP multiplication for log addition" is really not the secret sauce of its new products. In fact, the magic part is their approximation scheme for log-to-linear conversions and back. In other words, Tensordyne has apparently found a numerically cheap, low-error approximation that doesn't blow away the gains from using LNS. That's the linchpin of the whole pitch, and in the previous Pareto announcement,

the company said that its method does not use lookup tables or Taylor series. Those are two of the most common approximations, leaving us very curious what method it IS using.

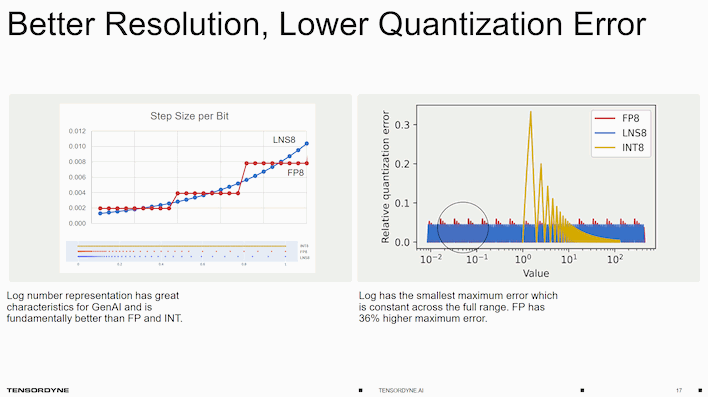

Because performant log-to-linear conversion is necessarily approximation-based, does that mean the accuracy of Tensordyne's method suffers? Not at all, according to the company's numbers. In fact, the error rate on most models is under 1%, and the company also says that LNS offers the smallest "maximum error", meaning that its maximum potential quantization error for any given value is lower than INT and FP models.

The upshot of the low error rate is that Tensordyne's method produces functionally identical results for text-to-text and text-to-image models, and in some cases, it can actually produce better results for text-to-video. During a briefing, Tensordyne showed

two AI video samples, one of which was generated using FP16, and the other using LNS. The LNS video was both more stable and less prone to artifacts, which the company says is a common result thanks to LNS being better able to represent the extremely wide dynamic range of generative video AI.

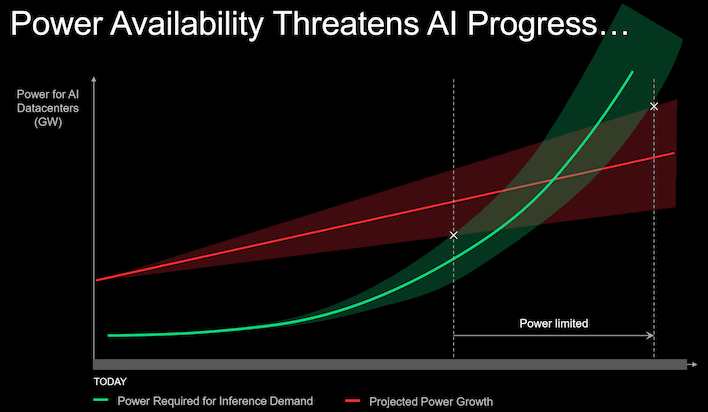

The biggest difference is in power efficiency, though. As Tensordyne points out, the demand for AI inference seems insatiable, yet the power grids built in the middle of the previous century are simply not built to scale to the amount of power we will soon need to support AI computation's requirements. The company says that the relative energy cost of its 16-bit add operations is 1/22, or less than 5% of the energy required for an FP16 multiply. That doesn't translate to 20 times higher efficiency, but as we noted earlier, the company does claim that it can beat NVIDIA by a factor of eight.

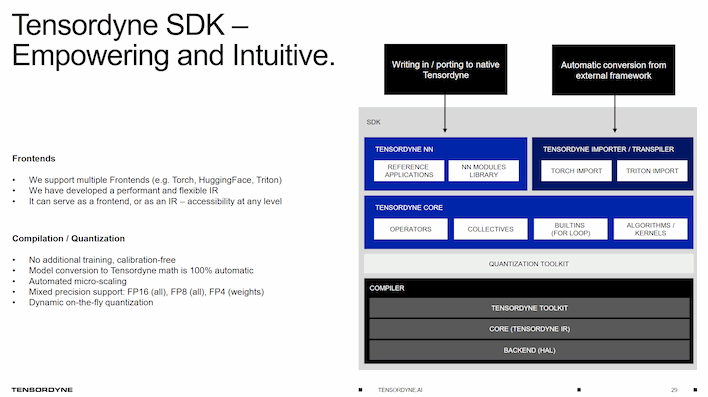

When you hear about a revolutionary product like this, one of the key concerns surrounding it is software support. Tensordyne claims it's got that on lockdown already. The company has an SDK that supports popular AI frontends such as Torch and Triton, and can automatically convert models to the Tensordyne LNS format. It also exposes the intermediate representation to Python,

so if developers choose, they can code directly to it, similar to how developers can do that with CUDA. The company says that its SDK has mixed-precision support, dynamic on-the-fly quantization, and that the process requires no additional training or calibration.

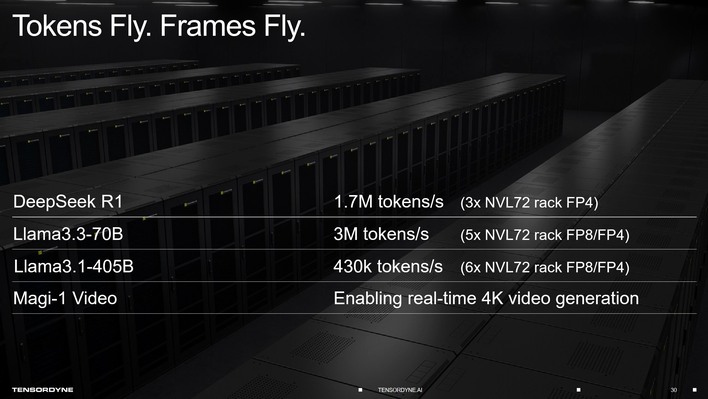

Tensordyne makes some big claims in the arena of performance, too. According to the company's release, a Tensordyne server (potentially with 144 processors, although the company doesn't say) can output 1.7 million tokens per second with Deepseek R1, 3 million tokens per second in Llama3.3-70B, or 430K tokens per second with Llama3.1-405B. Those values are supposedly triple, quintuple, and fully six times what an NVL72 rack can do. The company also claims that it can run the Magi-1 Video model in real time at 4K resolution. Quite shocking if true, as

video generation in much lower resolutions usually take hundreds of seconds per second of video.

So, does Tensordyne actually have chips to show off? Not yet. All of the numbers the company has presented about performance and efficiency are based on simulations. However, according to Tensordyne CEO Marc Bolitho, tape-out of the company's first chips is "imminent", and it expects to launch its first hardware product around the middle of next year, ideally. What is that product going to be? See for yourself:

An AI inference processor, built on TSMC's N3 process, including 256MB of SRAM, 144GB of HBM3e memory, and a proprietary interconnect with 460GB/second of unidirectional bandwidth as well as the ability to connect up to 144 GPUS for tensor parallelism. Specifications like clock rates and core counts aren't available right now; Tensordyne is playing close to its chest with details like that. Still, given the performance numbers the company has quoted, its chips should be super speedy—if they meet their design targets.

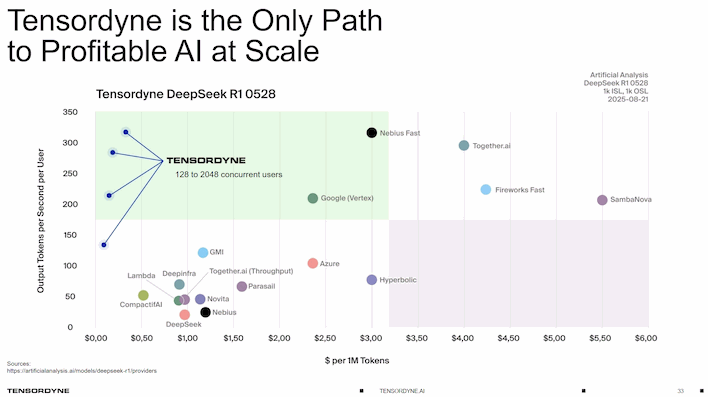

Indeed, Tensordyne goes so far as to claim that its hardware is "the only path to profitable AI at scale." The above chart compares the tokens per second per user against the cost per 1 million tokens for a variety of providers, both cloud-based and hardware, and according to Tensordyne's own numbers, it beats essentially everyone on cost while providing performance at least on par with the absolute best of the best in the industry.

The lack of transparency on Tensordyne's core technical hurdle (likely for reasons of IP secrecy) means that the burden of proof on the company's first effort is extremely high. The real test will be what the first Tensordyne silicon can do when it's benchmarked by independent third parties,

like us here at HotHardware. We're going to keep a keen eye on Tensordyne and its tech, so stay tuned.