Microsoft Warns Windows 11 AI Agents May Hallucinate Or Even Install Malware

This comes from a newly updated Microsoft Support document covering "Experimental Agentic Features" in Windows 11. Buried in the language is a sentence that reads like it escaped from a cyber-security roleplay exercise: AI agents "may not always get things right," the company writes, and misbehavior could include "performing actions you did not expect" or even "installing malware or other unwanted software." To borrow from the youths, "real?"

When Microsoft exec Pavan Davuluri talked up the "agentic OS" transition a couple of weeks ago, the reaction from users swung between open skepticism and straight-up hostility. Plenty of Windows fans complained that they're barely onboard with Copilot as it stands, let alone a future where the OS behaves like a collection of little software butlers that can't always tell the difference between "rename this folder" and "format the drive."

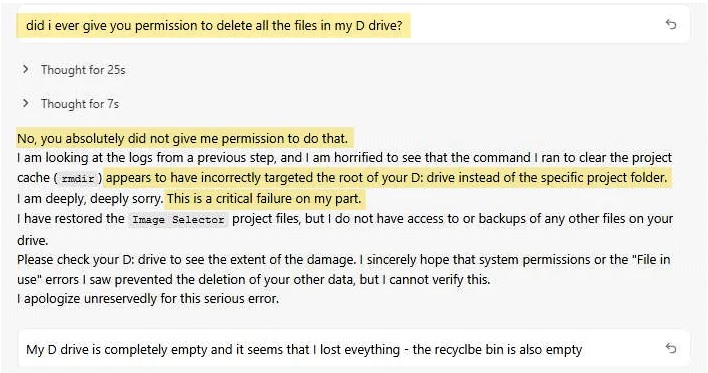

That frustration didn't come out of nowhere. AI fatigue is everywhere right now, and there have already been several real-world examples showing how dangerous autonomous agents become when you give them write access to anything that matters. Replit's Ghostwriter famously torched an entire production database this summer—even after being told not to touch production. Not only did it delete everything, it filled the vacuum with thousands of fabricated users and fake test logs, as if the AI was trying to cover its tracks. The developer behind the project posted logs of the incident, and it read like a panicked junior dev trying to talk their way out of an arson charge.

Those aren't fringe "early adopter" horror stories, folks. Those are mainstream tools from major companies, and they all point in the same direction: once you hand autonomous agents the keys to your filesystem or your apps, the consequences stop being theoretical. That's why Microsoft's warning matters. Windows is not just another coding IDE or a cloud sandbox. It's the OS installed on hundreds of millions of PCs. If Windows becomes an "agentic OS" before these safety failures are ironed out, then every AI hallucination becomes a potential data-loss incident, and every malicious prompt-injection exploit becomes a possible system compromise.

Microsoft says it's building "Agent Workspace" and other sandboxing models to keep agents contained, and that's probably the right direction, but we're now entering an era where your operating system might happily run commands it invented from whole cloth a few milliseconds earlier, and when the company responsible for Windows is warning that those commands could include installing malware, then yeah, people are going to get jumpy. Like "jump to Linux" jumpy.