This is shaping up to be an exciting year in graphics. AMD is planning to launch a

beefier version of Navi with support for real-time ray tracing, NVIDIA has its

Ampere GPUs on the horizon, and Intel will assert itself back into the discrete GPU space for the first time since it shelved

Larrabee a decade ago. Out of the three, Intel is arguably the wild card, since there is no recent track record in the GPU space to draw conclusions from. So, what do we know about Intel's discrete GPU architecture so far?

First and foremost, Intel's graphics team is headed up by Raja Koduri, the former Radeon Technologies Group (RTG) boss who oversaw the development of Vega. After launching Vega, he took what was supposed to be a

sabbatical from AMD, and ended up

jumping ship to Intel to assemble a GPU super team and lead its efforts in the discrete GPU space.

That was a little over two years ago. During that time, Intel has heavily promoted its newfound focus on graphics, though has only

provided a spattering of details here and there. For the most part, we still don't know quite what to expect. However, as time goes on, things are becoming a bit more clear.

Intel Xe Graphics Architecture To Support Ray Tracing At The Hardware Level

One thing we know for certain is that Xe will feature hardware-based

support for real-time ray tracing. We can credit

NVIDIA for getting the rolling in terms of broader support for ray-traced visuals, and with the

PlayStation 5 and

Xbox Series X both bringing support as well (by way of a custom Navi GPU), it's clear this is a technology that's here to stay.

As it pertains to Intel, its senior principal engineer and senior director of advanced rendering and visualization, James Jeffers, held a workshop in Germany last year where he announced that ray tracing was in the Xe architecture's roadmap.

"I’m pleased to share today that the Intel Xe architecture roadmap for data center optimized rendering includes ray tracing hardware acceleration support for the Intel Rendering Framework family of API’s and libraries," Jeffers wrote in a blog post.

We'd be surprised if ray tracing support landed on all of Intel's discrete GPUs, and particularly its lower end variants that will likely ship first. But that's a discussion for another day.

Intel Tiger Lake Processor To Ship With Gen12 Xe Graphics

The first Intel

Tiger Lake processors to ship with Gen12 graphics based on the Xe architecture are scheduled to arrive later this year or in early 2021. According to Intel, the shift to Gen12 will provide "the most in-depth" EU ISA remake since the i956 debuted over a decade ago.

"The encoding of almost every instruction field, hardware opcode and register type needs to be updated in this merge request," Francisco Jerez, who works on Intel’s open-source Linux graphics team, wrote ina merge request on GitHub. "But probably the most invasive change is the removal of the register scoreboard logic from the hardware, which means that the EU will no longer guarantee data coherency between register reads and writes, and will require the compiler to synchronize dependent instructions anytime there is a potential data hazard."

Based on bits of information Intel has sporadically provided, the company believes Tiger Lake will provide a 2x uplift in performance compared to the Gen11 graphics in Ice Lake. To put that into perspective, the Gen11 IGP is already offering

comparable performance to Vega-based GPUs in AMD's Ryzen 3000 series APUs (although trailing a bit in gaming performance).

"It's Alive!" Intel's First Xe DG1 Graphics Card Gets Powered On For The First Time

He wrote, "It's alive!" His short but exciting tweet sends a larger message. As for the DG1, it is based on Intel's Xe graphics architecture and presumably built on a 10-nanometer manufacturing process. It is on track to release in 2020.

What about 7nm? Intel CEO Bob Swan said Intel is on track to launch a "datacenter focused discrete GPU in 2021" that is based on 7nm manufacturing.

High Performance Xe Based Graphics Cards Are Coming

While Intel is likely to bring lower powered solutions to market first, Xe will also find its way into more powerful solutions.

Koduri teased as much in a photo-op with a bunch of engineers wearing t-shirts with "Xe HP" imprinted on them. HP presumably stands for high performance and not Hewlett Packard.

"It’s all Xe HP—the team here in @intel Bangalore celebrated crossing a significant milestone on a journey to what would easily be the largest silicon designed in India and amongst the largest anywhere. The team calls it 'the baap of all'," Koduri stated on Twitter.

Xe will also find its way into supercomputers. This will come by way of a

7nm Ponte Vecchio solution built on a 7nm node, according to semi-recent rumors. Tying into that, more recent rumors suggest Intel's Xe GPUs will sport Foveros 3D packaging, with one of the solutions having a 500W TDP.

Source: Digital Trends

Source: Digital Trends

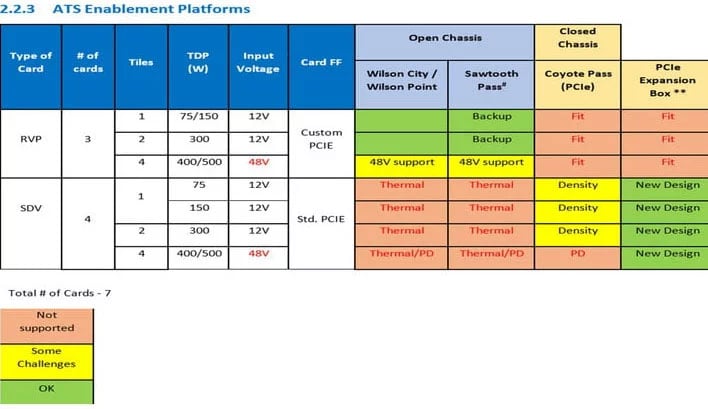

This came to light when

Luke Larsen at Digital Trends claimed to have obtained parts of an internal presentation from Intel's datacenter division. The unnamed source who provided the above slide was unable to identify some of the terminology, but the presentation as a whole lists a single-tile GPu, a two-tile GPU, and a four-tile card at the top of the stack.

Some of the details are left out, like the number of execution units (EUs). However, the three examples align wit ha past driver leak pointing to Intel's discrete GPUs having 128, 256, and 512 EUs. If that's the case, we can surmise that each tile has 128 EUs.

While referenced as tiles, these are actually chiplets, which again lands us back on Intel's Foveros 3D stacked packaging technology.

According to the leaked presentation, the maxed out four-chiplet solution has a 400W or 500W TDP. This seemingly indicates Intel will be chasing the high performance market to compete with AMD and NVIDIA. However, if the slide is real, we don't think it will be a consumer card. To put things into perspective, a reference

GeForce RTX 2080 Ti has a 250W TDP, while the overclocked Founders Edition model pushes that up slightly to 260W. Likewise, the

Radeon RX 5700 XT has a 225W TDP, while the

Radeon RX 5700 XT 50th Anniversary Edition nudges that up to 235W.

The leak also pointed to the use of HBM memory instead of GDDR6 or GDDR5, and PCI Express 4.0 support. But again, this will likely be aimed at he high performance computing (HPC) sector, rather than the consumer market.

When will all this become clear? That's a good question, and we don't have an answer other than sometime this year.