Intel Details Option To Move Antialiasing To The CPU

The first thing to understand about MLAA is that it isn't a traditional form of antialiasing at all. Traditional antialiasing (supersampling or multisampling) is performed after lighting is calculated and textures are applied. Supersampling is much more computationally and bandwidth intensive than multisampling, but both techniques are capable of demanding more horsepower than modern consoles or mobile

devices are able to provide.

ATI's MLAA implementation uses DirectCompute--Intel's CPU-centric method doesn't

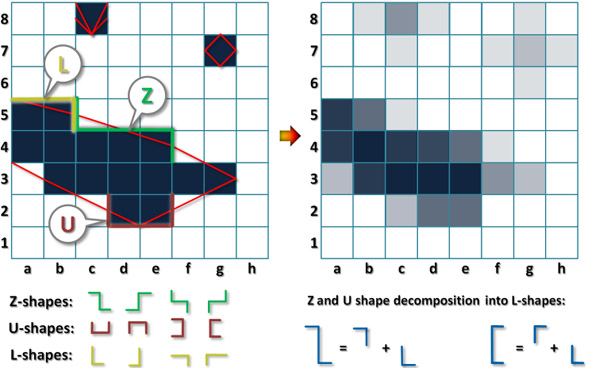

Morphological Antialiasing, in contrast, is performed on an already-rendered image. An MLAA filter examines the image buffer to locate discontinuities between neighboring pixels, identifies U, Z, and L-shaped patterns in those discontinuities, and finally blends the colors around the patterns. The screenshot below demonstrates the process:

The left-hand side is the original image. MLAA has been applied on the right

Intel describes MLAA as "an image-based, post-process filtering technique which identifies discontinuity patterns and blends colors in the neighborhood of these patterns to perform effective antialiasing. It is the precursor of a new generation of real-time antialiasing techniques that rival MSAA."

Battlefield: Bad Company 2 DX10, No AA

Battlefield: Bad Company 2, MLAA Enabled. Check the individual branches of the scene to see the difference between MLAA enabled and disabled

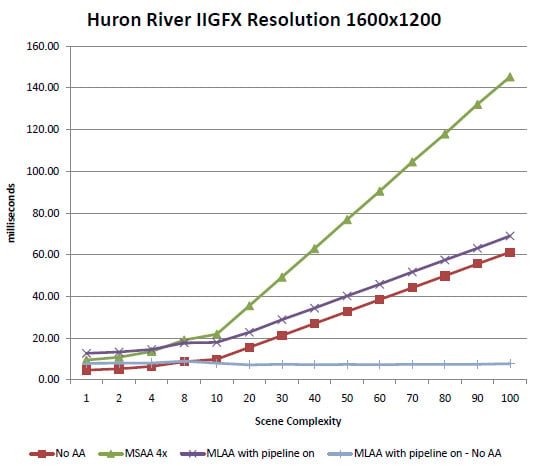

The technique is embarrassingly parallel and, unlike traditional hardware antialiasing, can be effectively handled by the CPU in real time. MLAA is equally compatible with ray tracing or rasterized graphics and it functions perfectly when combined with deferred lighting (a type of rendering used by modern consoles), Another advantage is that MLAA is calculated once per frame regardless of scene complexity, making it easier for programmers to estimate the performance hit of enabling the feature. It's not perfect--morphological antialiasing doesn't calculate sub-pixel precision--but it's a decent compromise between MSAA's improved visuals and a total lack of AA. It lacks the former's occasionally hit-or-miss approach; MLAA can be universally applied, whereas MSAA has trouble with transparencies.

Render The Future

Performance comparison using Intel's Sandy Bridge integrated GPU. Scene complexity = visual detail in this case. Milliseconds on the left refer to the time to render a frame as opposed to a frame rate. Ignore the bottom light-blue curve.

Intel's idea of using spare CPU cores to process MLAA is, ironically, exactly the sort of cross-pollinated environment AMD has in mind when it talks about heterogeneous computing. AMD could theoretically port MLAA to the CPU as well, but we suspect the company will wait until its CPU and GPU are connected by a faster, wider interconnect.* It offers game developers a method of improving overall image quality more evenly than MSAA alone can provide, and for much less of a performance hit.

MLAA isn't necessarily aimed at computer enthusiasts with midrange or higher discrete graphics cards. Rather, it's likely to prove most important in devices where power consumption, battery life, or GPU subsystem performance is substantially constrained. It makes sense for consoles (both the PS3 and XBox 360 can use it) due to the graphical limitations of both. Intel's move to highlight the advantages of performing MLAA on the CPU may be a preview of what we'll see the company focus on when it releases its next-generation, DX11-class Ivy Bridge, but we expect to see more companies focus on this type of rendering regardless.