Exploring AI With NVIDIA’s $59 Jetson Nano 2GB Dev Kit

NVIDIA Jetson Nano: A Machine Learning Dev Kit For The Masses

Some of NVIDIA's higher-end Jetson products are a little pricey for hobbyists and beginners, and so the company has created the Jetson Nano. This platform has been around for a little while as a way for AI enthusiasts to break into the world of hardware-accelerated machine learning or experienced developers to prove out and quickly deploy concepts. The cheapest Jetson Nano 2GB kit is now a $59 system-on-module with plenty of IO connectivity built into edge connectors, where the compute resource is socketed and not much bigger than a traditional laptop SO-DIMM. This little board has a quad-core Arm Cortex A57 CPU and an integrated 128-core NVIDIA Maxwell-based GPU that accelerates CUDA-targeted workloads, and has just enough storage to boot up and run a project.

NVIDIA's JetPack software development kit drives a whole host of applications that rely on trained AI models and inferencing performed on its GPU architectures. That Maxwell GPU inside the Jetson Nano should be capable of crunching data quickly enough to handle all sorts of real-world use cases. Image processing encompasses a ton of problems that can be solved, from object identification to obstacle tracking. Self-driving cars, agriculture tools, and factory equipment are just a few of the applications that currently run on NVIDIA's AI technologies, and Jetson Nano affords you access to the same APIs and software development tools.

An Affordable, Accessible AI Development Kit

The Jetson Nano 2GB Developer Kit takes that same module and pairs it with a host board with physical USB, HDMI, Ethernet, and DisplayPort connectors. There's also a 40-pin GPIO header and a ribbon cable socket for a serial camera interface. Back in March of 2019, NVIDIA released the first version of the Jetson Nano, which has 4 GB of memory for $99. The original version of the host board also had a micro USB port and a barrel connector as two different options for the power supply.To get the Jetson Nano dev kit into the hands of even more students and developers, the new 2 GB Jetson Nano pairs that same SoC with just 2 GB of memory, but costs a mere $59. This one trades out the micro USB and barrel connectors in favor of a single USB Type-C connector for power. The GPIO pins and camera socket remain, which is great news for folks interested in robotics. It does strip out a USB port and the DisplayPort connector to keep costs down, though.

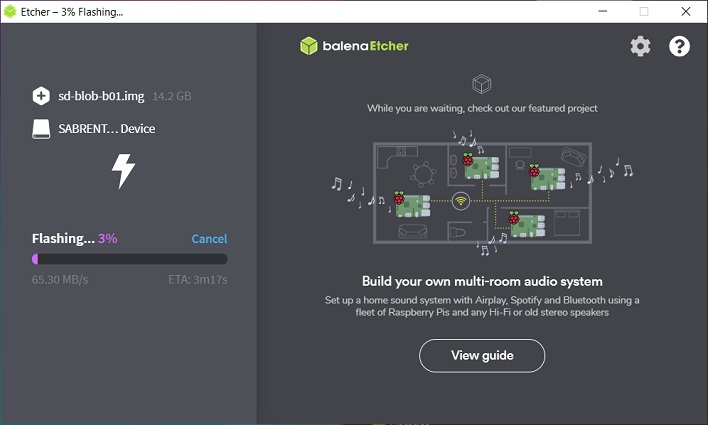

Getting started is pretty simple. The kit NVIDIA sent us included the Jetson Nano 2 GB SoM, a dev board, a USB-C power supply, and a 64 GB micro SD card. Installing the operating system requires downloading an image from NVIDIA's developer portal and flashing it to the SD card using an image flashing tool like balenaEtcher. Once the image has been flashed to the SD card, pop it into the micro SD reader on the Nano and plug in power, keyboard, mouse, Ethernet, and an HDMI or DisplayPort cable. The development tools are already installed and ready to go as part of the image flashing process.

Flashing an image to the SD card is a simple one-step process

God Mode - Now the AI Creation Fun Can Begin

Once the Ubuntu 18.04-based Linux distribution is up and running, it's time to start looking into AI projects. To get started, NVIDIA recommends checking out the Hello AI World inference project on GitHub. There's a ton of functionality and a variety of pre-trained models ready to infer data based on images captured by the camera module or stored in local files. The kit supplied by NVIDIA didn't include a camera, but we picked up a module with a Sony IMX219 sensor and a 160-degree field of view for around $22 on Amazon.We cloned this Jetson Inference repo and built the project using NVIDIA's documentation, which is straightforward for anybody who's ever run a makefile in Linux. Even if you're not familiar with the process, the instructions are pretty clear for beginners to get going. Unfortunately, since the Jetson Nano relies on its quad-core Cortex A57 complex to handle compilation, that process took around a half hour. It's fine to get up and grab a cup of coffee, but around around half-way through the process we were prompted for our user account password so the next step could install some additional dependencies.

Fun With Image Detection And Getting It Right

The Hello AI World project comes with its own sample photos that have a resolution of 1024x512 that show how the various AI models can process images. We ran the AI on a couple of those to make sure it was all working as anticipated, and then we threw it a curveball. The image below is a blurred person running past a house with a car in the driveway. We wanted to see what the AI thought of an out-of-focus subject. The image is also much larger than the samples at 3888x2592, or around 20 times the number of pixels. These take more processing power to push through the model since they have so much more detail.

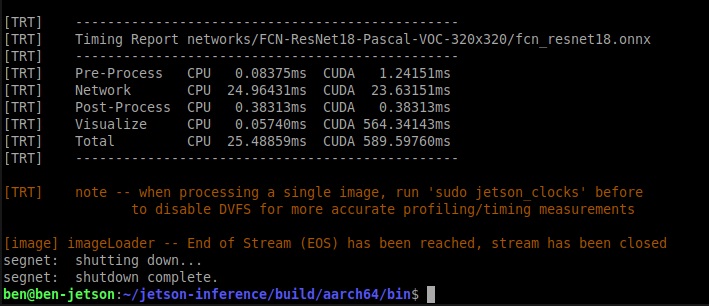

As we ran the project, we were mostly concerned with whether there would be a performance hit due to the newer, cheaper Jetson Nano having half the RAM of the original model. Happily, that did not seem to be the case. NVIDIA has a series of benchmark figures published for the original model complete with scores expressed in frames per second and in milliseconds. Since each run of the Hello World AI project pumps out metrics showing how long a process took, it was an easy comparison to make. Every time, the 2 GB Jetson Nano sped through the images at roughly the same speed of the original. The only time we ever saw any sort of memory warning was when the Chromium browser was running with a couple of tabs open showing the project's documentation. That means it's possible to run into some overhead issues, but only when trying to do multiple things at the same time.

Image classification is the process of identifying what is the subject of a photo. The AI model scans over an image and adds a label showing what it thinks the subject is, and a percentage of certainty that the AI has that it's correct. The various models use different sized chunks of the image to figure out what's in each part, and then confidently (or not) decides which detected object in each chunk is the subject. Then figures out overall what the subject is.

Undeterred, we moved on. The next "step" in the process is object identification. AI models run against an image to try to find various objects that aren't part of the background. This time the model places all of the objects in the photo in different colored boxes. Each box has its own label that shows what it thinks the object is and what percentage of certainty it has in its conclusion. This time, even the simplest AI model was capable of identifying both the runner and a car in the background.

This time the model correctly identified objects in the photo, despite how blurry the runner was.

Last is semantic segmentation, which is a per-pixel process that identifies different objects in a photo and colorizes them. The image below is again our sample with the various objects that the AI thought might be the subject highlighted. This sheds some light on our results earlier where it thought the subject was a motorized scooter or a parking meter, since it really only identified the cars as subjects of the photo. The runner was likely the object identified as a parking meter or a motor scooter, since the only in-focus objects it could really detect semantically were in focus.

Semantic Segmentation identifies subjects on a per-pixel basis

All of that might seem amusing, or like a failure of the AI, but maybe you're familiar with the phrase "garbage in, garbage out". The photo might have artistic value, but it's not clear or in focus, and the AI could only rely on a blurry mess to try to identify what it was. The fact that it pulled out a person at all is, frankly, amazing. When the subject was in focus, the results are much more solid. Below is one such example of the image classification neural network using the default fastest model on a clearer image. While the results aren't perfect, we can at least kind of see where they came from.

Community AI Projects Can Be Fascinating

Once we were satisfied everything was working as it should by tinkering with the Hello AI World beginner project, it was time to hit NVIDIA's Jetson Community Projects page. Some of the projects here require very little in the way of additional hardware, such as NVIDIA's own Real-Time Human Pose Estimator, but have limited utility. One more useful project that doesn't need a ton of hardware is the AI Thermometer, which relies on a FLIR thermal camera module to read the temperature of a person and send an alert if the person has a fever.The best way I've found to learn about a software development topic or framework is to find an existing sample and use it and walk through the code to see what's going on. That meant picking one of the projects we had the hardware for and digging in, and turning a camera stream into electronic music seemed like a fun example. The "DeepStream <3 OSC" project was the perfect candidate: it only needs a video source, and then it will turn detected objects into sonic frequencies as a sort of interactive music machine. The "music" generated by the system is controlled by a script takes in an object and spits out a musical note. The project has a nice demo on YouTube to check out. Hit play below for a taste of The Matrix, recreated in sound...

Other projects need a bit more of an investment in terms of hardware, but have the potential to be a lot of fun. The JetBot robotics kit looks like a relatively inexpensive way to get started in the world of AI-driven robotics. Folks who own a 3D printer can just print their own chassis, while those who don't can buy one of a handful of commercially produced kits. The robot gets around using a set of Jupyter Notebooks, which are web apps that assist in programming the robot, including live code manipulation in the browser. It looks like a pretty straightforward way to get into robotics, and should make for a nice cold-weather indoor family fun project.

We had wanted to do some benchmark testing with the Jetson Nano. The problem is that the biggest ML test suite around, MLbench, does not support Arm64 in its publicly-available version. When we tried to build using the included scripts, it failed because SSE isn't supported, since that's an x86 feature. The test also doesn't quite know what to make of the CUDA cores, either. As far as the projects that are available from NVIDIA's community, everything seemed pretty speedy, though performance isn't really the focus here. Other higher-end, more expensive Jetson platforms are obviously faster, and those who need the best performance should focus there. The Jetson Nano 2GB kit, on the other hand, is a nice entry-level or low power development and testing platform.

Jetson Nano 2 GB Conclusions

The 2 GB version of NVIDIA's Jetson Nano can handle just about any project that its more expensive sibling can, at a price that more folks can afford. Ringing in at $59, which is roughly comparable to a Raspberry Pi 4, the 2 GB Jetson Nano has plenty of compute power for a wide variety of machine learning tasks. The SO-DIMM sized compute board is small enough to lose on a cluttered desk (don't ask me how I know this), and the host board that accompanies the development kit only doubles the footprint. Despite its diminutive size, there's enough connectivity to do just about anything from real-time AI image processing (with an optional camera) to driving robotics kits.Just as important for any educational or hobbyist platform is the availability of samples and documentation. NVIDIA's got that covered in the form of sample projects. The Jetson community has contributed quite a bit of open-source material for study, too. For anybody who's interested in getting started in the world of machine learning, the Jetson Nano 2 GB is an excellent tool with plenty of documentation and sample code to get started.