AMD Supercharges AI Data Centers With Powerful MI350 GPU At Advancing AI 2025

AMD Debuts Its Next Gen Al MI350 Accelerator And Full-Stack, Rack-Scale AI Platforms

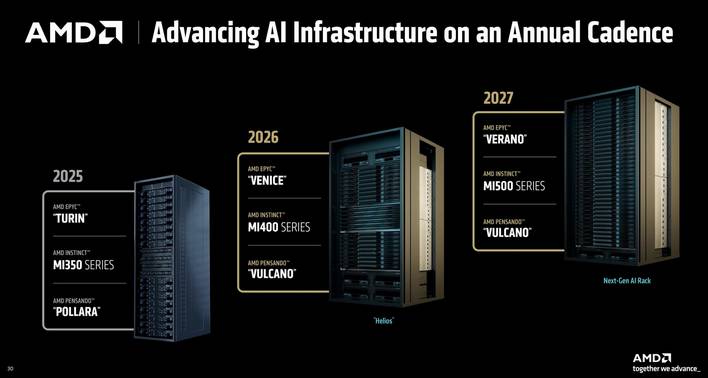

A myriad of AMD’s partners, from Meta, to Microsoft, and Oracle also discussed their successful Instinct MI300 series deployments and expressed commitments to future products, like the MI350, and the next-generation MI400 series and AI rack codenamed “Helios”.

AMD Instinct MI350 Series Details

The AMD Instinct MI350 series will consist of the MI350X and MI355X. Both of these AI accelerators are fundamentally similar and feature the same silicon and features set, but the MI350X targets lower power air-cooled systems, whereas the MI355X targets denser liquid-cooled configurations. As such, the chips operate at different frequencies and power levels, which will ultimately affect performance, but we’ll get to that in just a bit.

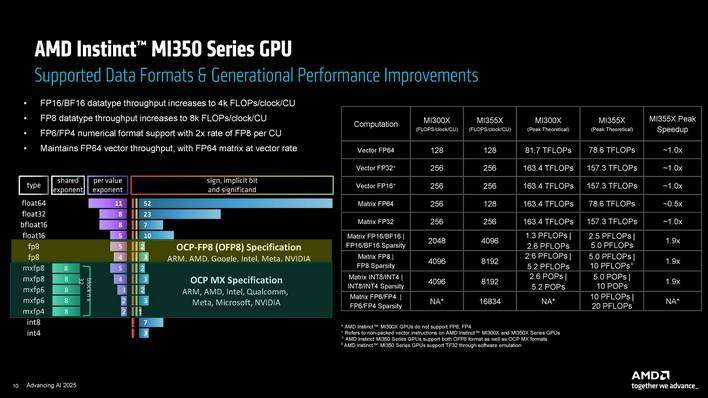

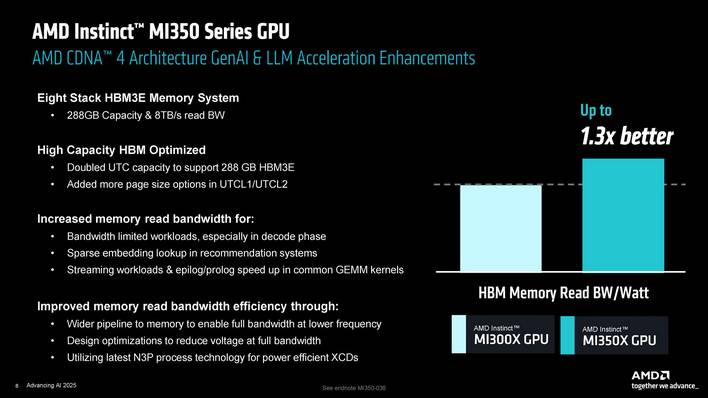

We’ve disclosed some preliminary details regarding the MI350 series (the MI355X in particular) in the past but have additional information to share today. To quickly recap our previous coverage, the MI350 series compute dies are manufactured using a more advanced 3nm process node compared to the MI300 series, the accelerators feature 288GB of faster HBM3e memory, and they support new FP4 and FP6 data types. The MI350 series also leverages the newer CDNA 4 architecture, which is better suited to today’s more diverse AI and ML-related workloads.

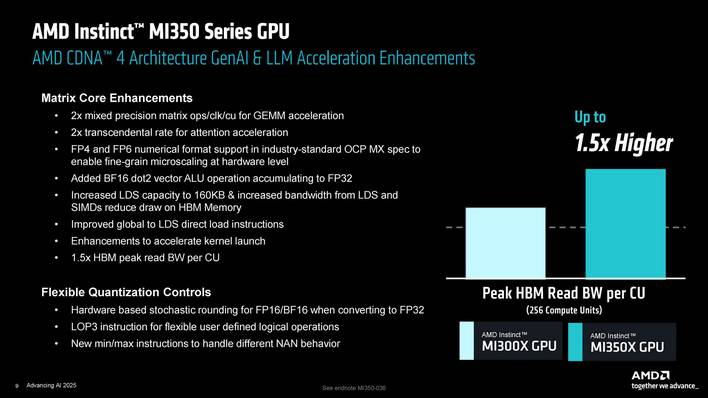

The CDNA 4-based Instinct MI350 series features enhanced matrix engines, with support for new data formats and mixed precision arithmetic. The multi-chiplet SoCs also feature Infinity Fabric and package connectivity enhancements, all of which culminate in improved performance and energy efficiency. More specifically, changes to the compute engines, memory configuration, and to the SoC architecture optimize memory utilization and energy use, which in turn keep the cores better fed to optimize utilization, overall performance and efficiency. All told, AMD claims its team has doubled the throughput with the MI350 series versus the previous gen with less than double the power. AMD also reduced the uncore power in the design, which affords additional power for the compute engines, again for higher performance.

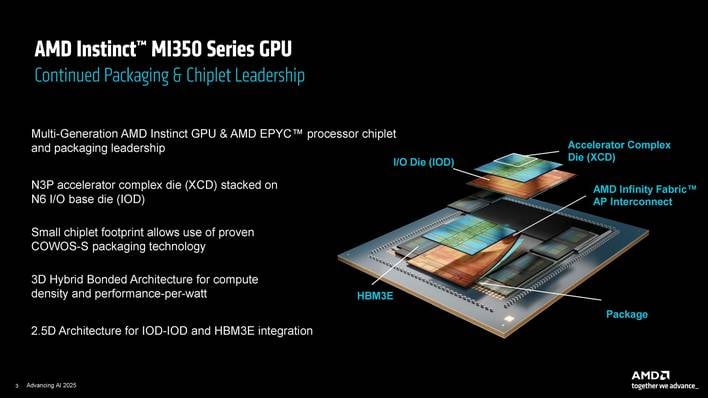

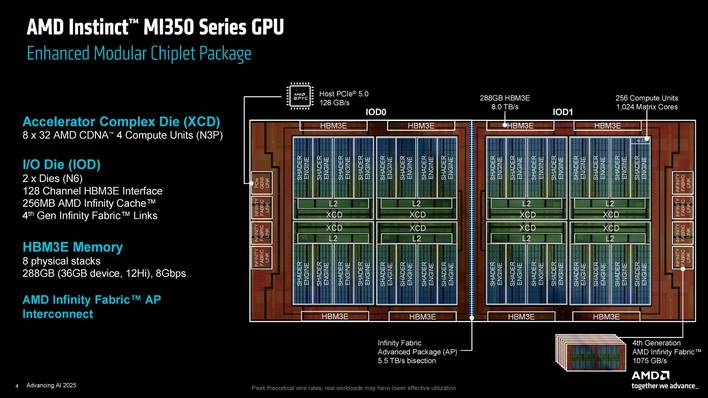

In terms of their actual configuration, MI350 series SoCs are built using eight XCDs, or Accelerator Complex Dies, which sit atop dual IO dies that are situated in the middle layer in the 3D stack. A CoWoS interposer houses the IO dies and HBM stacks, and the compute chiplet stacks are 3D hybrid bonded on top of the IO dies. As previously mentioned, the XCDs are manufactured on TSMC’s advanced 3nm process (N3P), but the IO dies are manufactured using a 6nm process.

Each of the eight XCD features 32 CDNA 4-based Compute Units, for a grand total of 256 CUs and 1,024 matrix engines. And the IO dies feature a 128 channel HBM3e interface, 256MB of Infinity Cache, and a total of seven 4th Generation Infinity Fabric links offering 1,075GB/s of peak bandwidth. An Infinity Fabric AP (Advanced Packing) link connects the two IO together and offers a massive 5.5TB/s of bidirectional bandwidth.

Along with the XCDs and IO dies, there are eight physical stacks of HBM3e memory, 36GB per stack (12-Hi), for a total capacity of 288GB. The memory operates at 8Gbps, which results in 8.0TB/s of peak bandwidth.

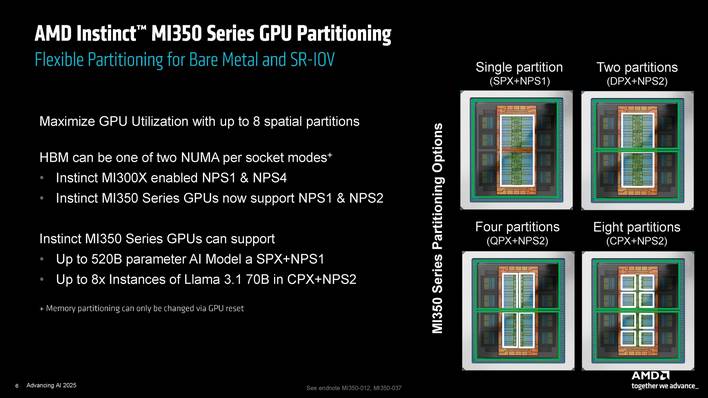

AMD also made changes to the ways the GPUs and memory can be partitioned. The MI350 series GPUs can be split into up to 8 spatial partitions and the HBM can operate in one of two NUMA per socket modes – NPS1 or NPS2. The MI300 series supported NPS1 or NPS4 memory configurations.

There are also power benefits to using HBM3e. HBM3e offers up to 1.3x better memory read bandwidth per watt versus the previous gen and the architecture offers 1.5x higher peak HBM read bandwidth per CU. Due to the higher bandwidth overall, and the fewer total compute units versus the MI325X (304 CUs vs 256), more bandwidth is available per CU, which helps for workloads that are bandwidth bound.

AMD Instinct MI350 Based Rack Level Solutions

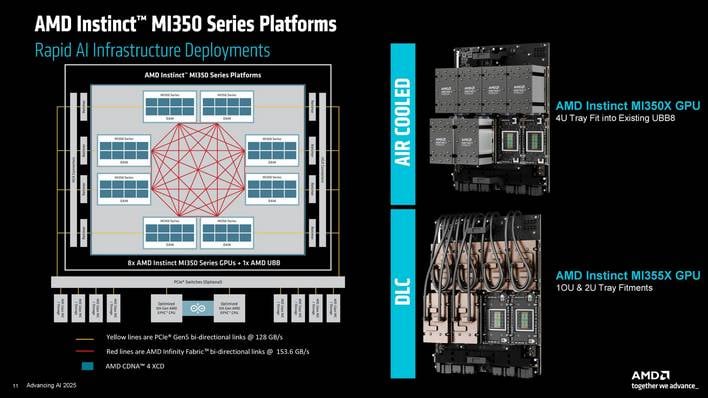

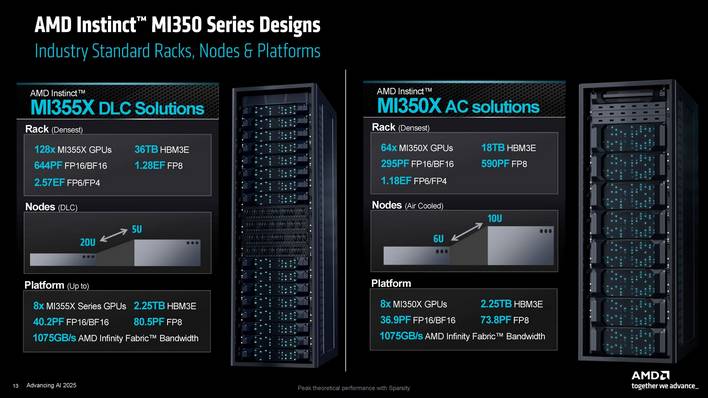

The obvious goal for AMD is to sell as many MI350 series accelerators as possible, supported by other AMD silicon. To that end, the company also introduced new rack scale solutions that leverage AMD EPYC processors and AMD Pensando network technology, along with the MI350 series.There are two main rack scale solutions coming down the pipeline, the MI355X DLC and MI350X AC. The MI355X is the Dense Liquid Cooled solution, whereas the MI350X is the air-cooled option.

The MI355X DLC can pack up to 128 MI35X accelerators, in 8 2U chassis, racked 16 high. The MI350X AC packs 8 MI350X accelerators into each 4U chassis, for a total of 64 per rack.

The MI355X DLC operates at 1.4kW, while the MI350X operates at 1kW. To achieve that reduction in power, the MI350X’s compute units run at about 10% lower clocks, which are reflected in the theoretical peak performance numbers in the slide. We’re told, however, that the real-world performance difference will likely be about 20% depending on the particular workload.

AMD Pensando Networking Technology Updates

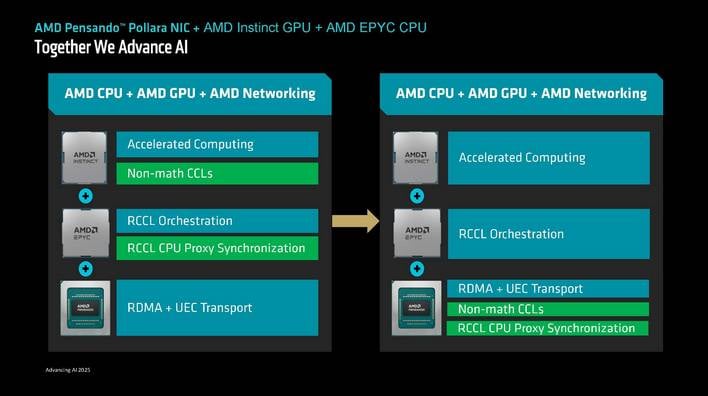

Fast and reliable network connectivity is paramount in today’s AI data centers. The front-end shuttles data to an AI cluster, and the backend handles data transfers between compute nodes and clusters. If either the front or backend are bottlenecked, the compute engines and accelerators in the system aren’t optimally fed data, which results in lower utilization and potentially lost revenue or diminished quality of service.

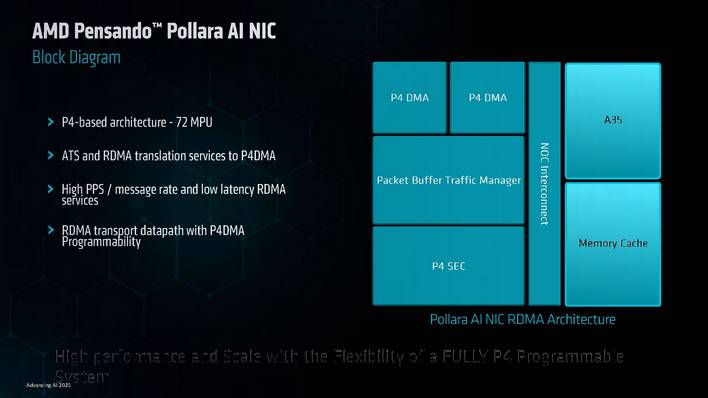

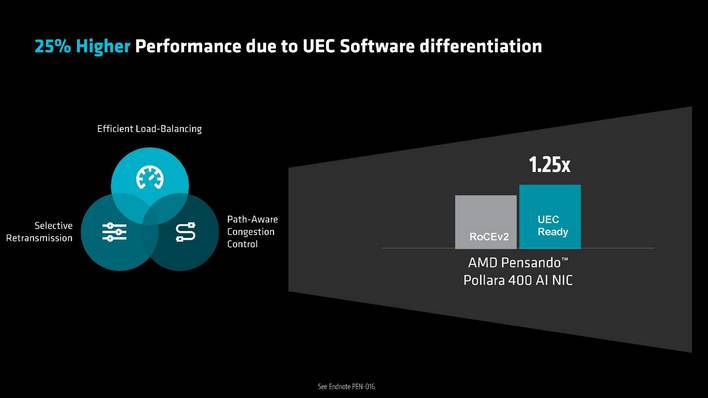

To accelerate and manage the front and backend networks, and offload the CPUs in a system, AMD offers Pensando Salina DPUs for the front-end and the Pensando Pollara 400 AI Network Interface Card, the industry’s first Ultra Ethernet Consortium (UEC) ready AI NIC, for the back end.

The AMD Pensando Pollara 400 AI NIC, which is now shipping, is powered by the AMD P4 Programmable engine. The AMD Pensando Pollara 400 supports the latest RDMA software and offers a number of new features to optimize and enhance the reliability and scalability of high-speed networks.

For example, the Pensando Pollara 400 supports path aware congestion control, to more efficiently route network traffic. It also supports fast lost packet recovery, which can more quickly detect lost packets and resent just that single packet to optimize bandwidth utilization. And it supports fast network failure recovery as well.

AMD Updates Its ROCm Open-Source Software Stack

In addition to showing off its latest hardware technologies, AMD made some big announcements regarding its ROCm software stack. For the uninitiated, ROCm is AMD’s software stack, which includes drivers, development tools, and APIs for programming the company’s GPUs.

Along with introducing ROCm 7, AMD said it is re-doubling its efforts to better connect with developers and introduced the AMD Developer Cloud and Developer Credits.

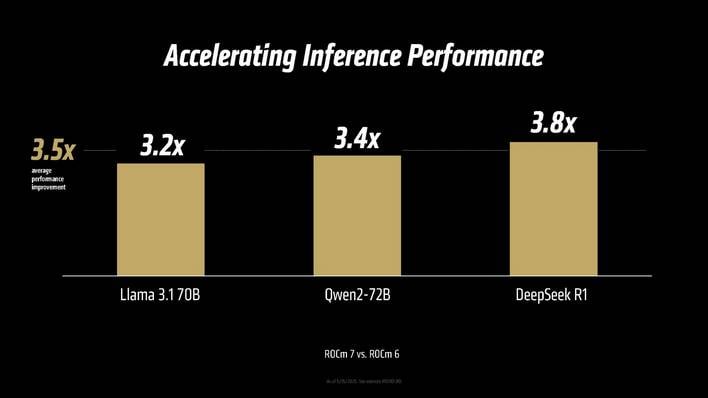

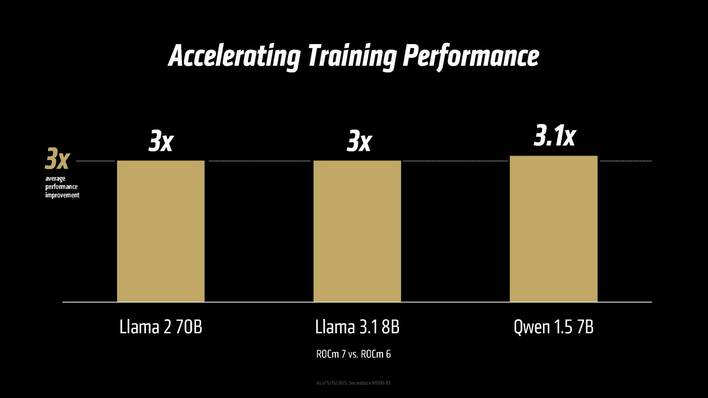

ROCm 7 expands support for more AMD GPUs, especially on the client, fully supports both Linux and Windows, and incorporates all of the optimizations and performance improvements introduced over the life of the ROCm 6.

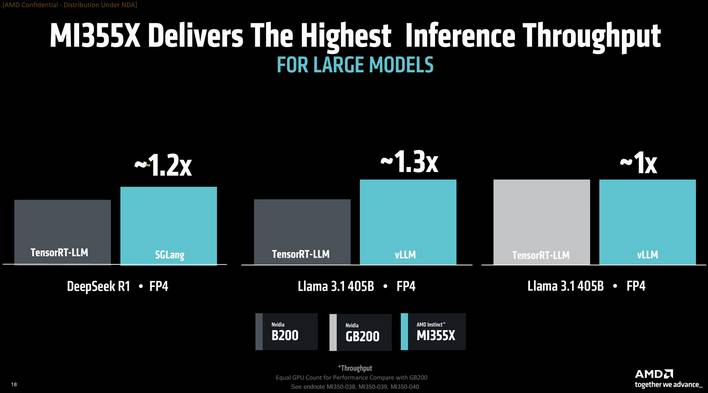

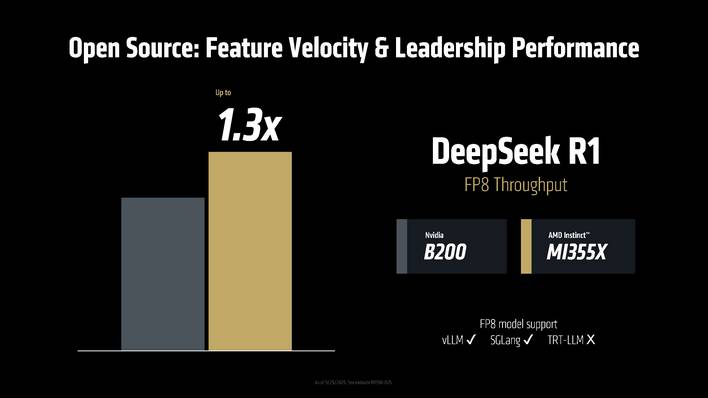

Version 7 offers roughly 3X the training performance over ROCm 6 and the open-source nature of the software affords faster optimization for emerging models. To that end, it showed the MI355X’s FP8 throughput with DeepSeek R1 outpacing the NVIDIA B200 by up to 30%.

AMD Looking To The Future

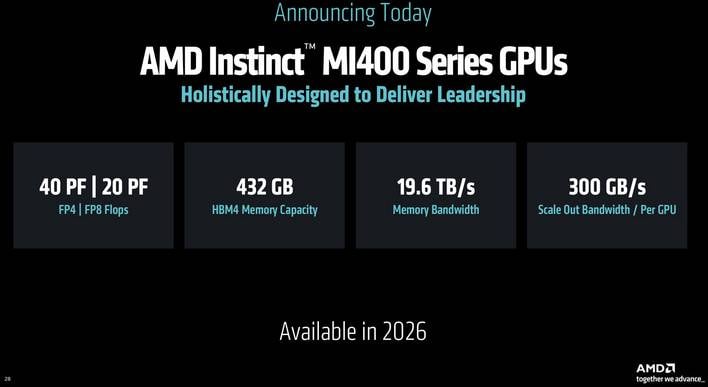

AMD isn’t standing still, of course. Dr. Su also revealed some new details regarding the next gen MI400 series, Zen 6 based EPYC “Venice” CPUs, and Pensando NICs codenamed “Vulcano”.The MI400 will be based on a totally new architecture, support new data types, and offer massive increases in bandwidth and compute. AMD is claiming up to 40PF and 20PF of FP4 and FP8 compute, respectively, a huge 432GB of HBM4 offering a whopping 19.6TB/s of peak bandwidth, and 300GB/s of scale out bandwidth.

We’ll have to wait a while for more details regarding MI400, Zen 6, and the Pensando “Vulcano” AI NIC, but rest assured, they’ll offer more compute, more bandwidth, the latest IO, and future software innovations help tie it all together.

AMD made a multitude of bold claims at its latest Advancing AI event and revealed quite a bit about its future plans and vision. Like NVIDIA, AMD’s not just making chips. The company now offers AI solutions spanning low-power clients all the way up to the rack level, and it will obviously expand its offerings moving forward. We suspect most of AMD’s disclosures this year will be well-received and look forward to what the company has in store for next year.