GALAX Reveals NVIDIA GeForce RTX 4080 12GB Has More Than Memory Cut From 16GB Model

Memory packages come in specific capacities at particular data channel widths. You can't make a GPU with a 256-bit bus using 12GB of GDDR6X memory, because GDDR6X packages are 32 bits wide. That means that, to fill a 256-bit bus, you have to have 8 packages—and 12 does not divide evenly into 8. Therefore, it was clear immediately after the announcement that the 12GB card should have reduced performance compared to the 16GB card.

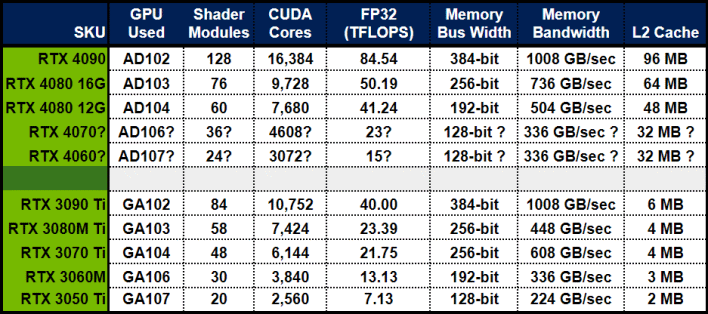

So, alright, it has a narrow memory bus for a $900 GPU. That is fair enough when it also has less memory, right? There's still more going on beneath the cold plate than just that, though. The chart above, which originates from a Chinese-language press release put out by AIB vendor GALAX, clearly lays out the specifications for the three upcoming GPUs. Most of this was already shared by NVIDIA on its website; GALAX is offering small bumps to the boost clocks, but otherwise it's all in order.

If you look at the two RTX 4080 models, you can clearly see that they are not using the same GPU core. The RTX 4080 16GB card is based on AD103—just as rumored—and the RTX 4080 12GB card is based on the smaller AD104. If those model numbers don't mean anything to you, the short version is that "AD" refers to the Ada architecture, "10" means that it's the first revision of both Ada and these GPUs, and then the final digit tells you where in the product stack that GPU falls, with bigger numbers meaning smaller GPUs.

This situation was sussed out by leakers and enthusiasts before the GPUs even launched, but it's still disappointing to see. The RTX 4080 12GB doesn't just lose a quarter of its memory and memory bus, it also drops more than a quarter of its shader cores, dropping to 7680 "CUDA cores" versus the RTX 4080's count of 9728. Plus, it is going to be using slower memory than the RTX 4080 16GB, too. That card will get exotic 23 Gbps GDDR6X memory, setting it further apart from the RTX 4080 12GB model weighing in at 21 Gbps.

The reaction of the hardware enthusiast community to the naming of the two RTX 4080 cards, as well as the overall high pricing of the Ada Lovelace GPU family, has been vitriolic in spots. The Reddit thread for the Project Beyond event on the /r/NVIDIA subreddit has over 4000 comments, and a great many of them are expressing either disappointment at the pricing or frustration with the naming scheme.

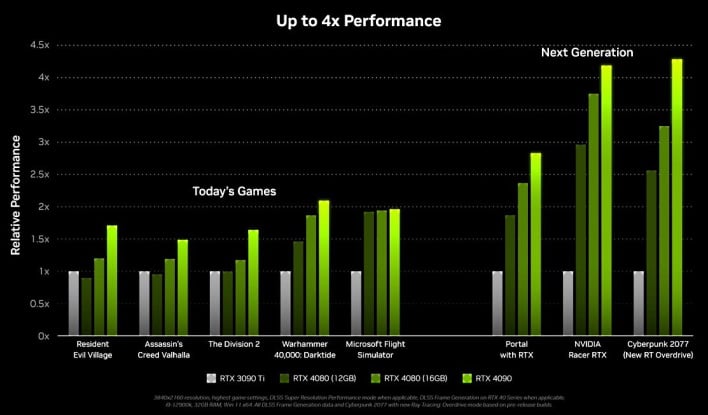

On the one hand, it's easy to point out that the GeForce RTX 3090 Ti was priced at $2,000, and the RTX 4080 12GB card is supposed to match that product in performance. From that perspective, $899 seems rather cheap. On the flip side, we recall not so long ago when the GeForce GTX 1080 was just $549 at launch—and the GeForce GTX 1070 was $379.

It's hard not to look at the pricing of current GeForce GPUs and feel disappointed. $600 used to get you a top-of-the-range graphics card, but now you're looking strictly at previous-generation products if you want to spend less than $900. Frankly, we'll be surprised if those cards are actually available for under $1000 in the first month of release.

We haven't had the chance to run our own testing on these cards yet but glancing at the numbers above—provided by NVIDIA—it does look like there's a sizable performance gap between the RTX 4080 12GB and 16GB cards. In fact, it reminds us of the gap that we have seen between the GeForce RTX 3080 and RTX 3070. Or the GeForce RTX 2080 and 2070—Ahem.

As for why NVIDIA would make such a decision, we have a hunch. As much as users have complained about the price of the "x80" GeForce card going up (the RTX 4080 16GB's $1199 is nearly double the price of the RTX 3080's $699 MSRP), they'd surely clamor even more loudly about an "x70" card with an $899 price tag.

Of course, what actually matters at the end of the day is the real performance. If the RTX 4080 12GB is faster than anything else on the market short of its own bigger siblings, then perhaps the price is justified to a certain degree. We won't know for sure until we do our own testing, but we'll let you know what we think once we do.