Microsoft Unveils Maia 200 AI Accelerators To Boost Cloud AI Independence

At its core, Maia 200 is an AI chip from Microsoft's own silicon team that is engineered specifically for running inference on big language models and other generative AI workloads. Unlike the first Maia (Maia 100), which was more of an early foray, Microsoft is purposely comparing this one against the competition right up front.

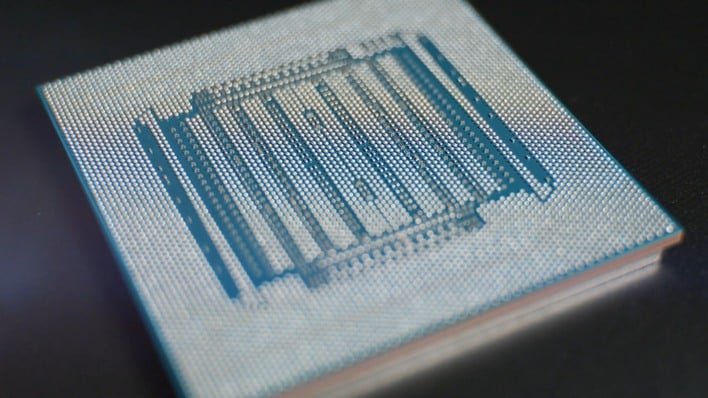

Maia 200 pushes some impressive numbers for inference workloads. It's fabricated on TSMC's 3 nm process with over 140 billion transistors, and it supports low-precision FP8 and FP4 tensor compute, purportedly hitting approximately 10 petaFLOPS at FP4 and circa 5 petaFLOPS at FP8. These are the formats modern large models tend to run in for speed and efficiency. Microsoft says it has 216 GB of HBM3e memory delivering ~7 TB/s of bandwidth, plus 272 MB of on-chip SRAM to squeeze out more performance by keeping data close to the compute units.

Azure claims approximately 30% better performance-per-dollar with Maia 200 than the previous-generation hardware in its fleet. On the system side, Maia 200 uses a high-bandwidth Ethernet-based scale-up fabric and a custom memory/data movement layout to keep big models fed and efficient in big clusters.

Microsoft is explicitly positioning Maia 200 against other cloud silicon; specifically, Amazon's Trainium 3, against which Maia 200 supposedly delivers about 3× the FP4 performance, and Google's TPU v7, where Maia's FP8 performance is apparently competitive or ahead. Those are the generational rivals AWS and Google are pushing to challenge Nvidia with, and while Microsoft isn't showing hard comparisons vs Nvidia's GPUs in its own press release, the underlying goal is clear: reduce long-term dependence on Nvidia for inference.

Of course, Nvidia's Blackwell family remains the gold standard for mixed AI workloads (especially training + inference), but the cloud market is big enough for multiple players, especially right now. Microsoft's launch is part of that broader trend.

Microsoft says that Maia 200 is already being deployed in Azure's US Central region (Iowa) with additional regions lined up, and the megacorp is previewing a Maia SDK with PyTorch support, Triton compiler tools, and optimized kernels to make it easier for devs to tune models. Naturally, if you want to play with it, you'll have to log in to Azure, because it's not sold as a discrete product; it's just for Azure's infrastructure.

According to The Information (via Reuters), Microsoft's new AI chip (internally code-named Braga) was originally expected in mid-2025, but got pushed into 2026 after design changes reportedly demanded by OpenAI, stability issues in simulations, and higher turnover among chip designers. Those kinds of delays aren't unusual in the world of custom silicon; it's fiendishly hard to spec, simulate, and tape out these beasts. They do mean Microsoft is catching up to the broader cloud AI silicon race rather than sprinting well ahead of it. We'll have to wait for independent benchmarks before we can declare if Maia 200 is a major step forward or not, though.