Dell Announces PowerEdge AI Servers Fueled By AMD Instinct MI355X GPUs And EPYC CPUs

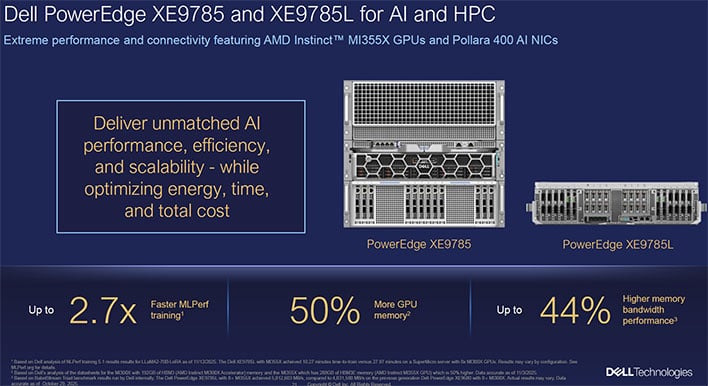

The highlights here are the PowerEdge XE9785 and especially its liquid-cooled sibling, the XE9785L. These machines pack in a pair of AMD EPYC CPUs along with fully eight of AMD's Instinct MI355X GPUs per node. In the liquid-cooled variation, 'per node' is just 3 rack units, meaning that you can pack in a massive 128 MI355X GPUs in a single standard 42U rack. That gives you more theoretical performance and more memory per rack versus both AMD's last-generation MI300X chips, as well as versus NVIDIA's Grace Blackwell NVL72 machines.

Of course, the advantage of Grace Blackwell is NVLink, which allows the entire rack to operate as a single "domain," or effectively, as a single GPU. This is huge for training frontier models like the ones created by OpenAI, but it's simply not necessary for most typical AI use-cases. The 288GB of HBM3E on each MI355X is more than enough to load and run inference on very, very large models, and the new chips' support for FP6 and FP4 precision means that they can offer huge performance gains on quantized models. (AMD's next-generation MI450 processors are expected to integrate NVLink-style shared domain functionality.)

The air-cooled XE9785 is much larger at 10 rack units, but 10U is still pretty modest considering the performance on offer: five FP8 PFLOPS per GPU, meaning 40 PFLOPS from a single Poweredge XE9785 machine, assuming power and thermal limits don't come into the picture. Both machines make use of AMD Pensando Pollara 400 NICs and Dell's own PowerSwitch AI fabric, including the new PowerSwitch Z9964F-ON and Z9964FL-ON, with the L indicating—you guessed it—liquid-cooling for the high-speed networking hardware.

If you bleed Intel blue, Dell's also introducing the PowerEdge R770AP, which are air-cooled servers that are based on Intel's P-core-only Xeon 6 6900-series CPUs with up to 128 Redwood Cove P-cores in each of its two sockets. These are the full-fat chips with twelve-channel memory, and should be quite competitive with AMD's EPYC processors in a lot of tasks. If you need massive AI compute, these machines can be configured with Instinct accelerators too—necessary given that Intel doesn't offer anything in this performance class right now.

Besides the new server hardware, Dell's also talking up its Automation Platform, which it says "delivers smarter, more automated experiences by deploying validated, optimized solutions with a secure framework." We don't know what that means, but the company says that "software-driven tools like the AI code assistant with Tabnine and agentic AI platform with Cohere North are now automated," which will apparently help you in some way. More comprehensible are the improvements to Dell's PowerScale networked storage management solution that now offers parallel NFS support as well as AI-optimized data search.

These announcements come out of the SC25 annual supercomputing conference happening all this week in lovely St. Louis, Missouri. Dell's hosting a booth there, and invites folks interested in the new machines to come check them out in person at the show.