AMD Unleashes EPYC Bergamo And Genoa-X Data Center CPUs, AI-Ready Instinct MI300X GPUs

AMD's Bergamo, An EPYC Cloud Native Processors For The Data Center

Bergamo can scale up to 128 cores per socket (256 threads with SMT), which translates to 33% more cores than Genoa in the same footprint. It also uses the same SP5 socket and maintains the top-end 360W nominal TDP (400W max configurable). In fact, Bergamo is functionally identical to Genoa in many ways with 12-channel DDR5 memory, 128 PCIe Gen 5.0 lanes, maximum 2P socket scalability, and a chiplet layout using a central IO die built on TSMC N6 and flanking Core Complex Dice (CCD) built on TSMC's N5 fab process. Bergamo has other pretty significant key differences, though.

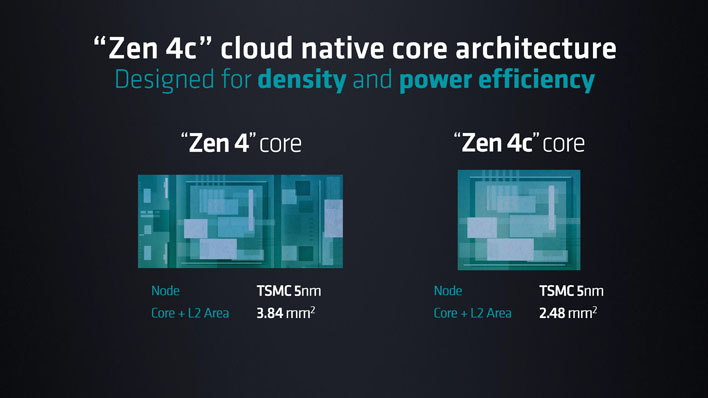

New EPYC Bergamo processors are built using a lightweight variant of the Zen 4 architecture, called Zen 4c, for throughput efficiency. These can be thought of as Efficiency cores to the Zen 4 Performance core, so to speak, in Intel terms perhaps, but the comparison is not quite analogous. Unlike Intel’s Efficiency desktop CPU cores which drop support for instructions like AVX-512 and have other significant changes, the AMD Zen 4c design implements the full x86 ISA of Zen 4, but occupies 35% less area and delivers better performance-per-watt.

The increased density of Zen 4c is achieved by making a few other concessions, namely reduced L3 cache and relaxed clock speeds. L1 and L2 cache allotments remain the same at 32KB and 1MB per core, respectively, but L3 cache is halved per Core Complex (CCX). The CCX still consists of up to 8 cores each, though each CCD can now fit two CCXs (16 cores) thanks to the reduced footprints. Compared to the Genoa EPYC 9654, a fully-enabled 128-core Bergamo chip can span to 128MB of L2 cache (vs 96MB on Genoa), but L3 is reduced to 256MB overall (vs 384MB on Genoa). While the CCDs are different, the central IO die is the same as Genoa.

The lower clock frequency targets allowed AMD’s architects to pack the chip’s features more densely as well. High-frequency designs are more sensitive to path layouts around the chip which can increase congestion. Zen 4c therefore requires less segmentation than Zen 4, which means there is less unusable dead space within the core layout, despite using the same TSMC N5 node. Frequencies are not dramatically lower for Bergamo, but the few-hundred MHz reduction (varies per SKU) does go a long way in terms of efficiency and power consumption.

As you may have already surmised, the new CCX math means Bergamo will need fewer CCDs overall. Only eight 16-core CCDs are needed to reach 128-cores, compared with twelve 8-core CCDs needed to reach 96-cores for Genoa. This should not have any major ramifications, but may result in less inter-core latency on average as hops between CCDs are less likely.

AMD says Bergamo allows up to 2.1x the container density per server compared to Intel's top Xeon Platinum SKU and up to double the Java operations per Watt. These kinds of metrics are very important for large scale hyperscale deployments, where running more instances efficiently per rack can significantly improve TCO and reduce overall costs.

It also took aim at Arm-based Ampere. In one comparison to a dual-socket system with Ampere Altra Max M128-30 processors, AMD says its 2P EPYC 9754S platform requires 58% fewer servers to deliver the same amount of NGINX requests per second. This translates to 39% less power consumption, 39% lower operational expenses, and 28% lower total cost of ownership (TCO).

The Bergamo product stack will consist of just three SKUs, all focused on exceptionally high core counts. The top-end AMD EPYC 9754 features the full outfit of 128 cores and 256 threads. There is an EPYC 9754S variant which disables SMT entirely. This can be preferable for certain customers that need core exclusivity for either security or performance consistency reasons. The remaining EPYC 9734 has one core per CCX disabled (16 total) and runs at slightly lower power.

Genoa-X With 3D V-Cache For Technical Computing Workloads

If you have already read through our Genoa coverage then you already know most of the story behind Genoa-X. These chips are comprised of up to 96 of the same Zen 4 cores (not Zen 4c), but are further equipped with up to 1.1GB of additional L3 V-Cache in the same vein as AMD Ryzen X3D processors for desktops, or last-gen EPYC Milan-X CPUs.

The 3D V-Cache does not tangibly benefit most general-purpose workloads, but it can significantly accelerate technical computing workloads including computational fluid dynamics (CFD), weather simulation, financial modeling, and other similarly memory-constrained workloads like databases.

As an example of 3D V-Cache's value, AMD presented figures showing up 73% faster Register Transfer Level (RTL) verification in Synopys VCS software. This is an Electronic Design Automation (EDA) program used in chip design, and an exceptionally compute-intensive process.

Genoa-X platforms will be available from various OEMs starting next quarter. Its lineup consists of the 96-core EPYC 9684X, 32-core EPYC 9384X, and 16-core EPYC 9184X. In a reversal from what we see in desktop X3D chips, these SKUs all feature clock speeds that are the same, or higher, than their Genoa counterparts. Case in point, the 16-core EPYC 9124 can reach a maximum of 3.7GHz while the EPYC 9184X can achieve 4.2GHz – granted its default TDP is significantly higher at 320W vs 200W.

AMD capped off its fourth generation EPYC presentation with another teaser for Siena, which is coming later this year. Siena is designed for telco and edge uses in a cost-optimized package, but we will have to wait for more information.

AMD Instinct MI300A And MI300X Accelerators For AI Workloads

Moving on to AI, AMD is both targeting training and inference workloads across its ecosystem – the data center, edge, and endpoints. Any approach necessitates both hardware and software advancements, and AMD is no exception.

Starting with software, AMD is continuously optimizing its ROCm software stack. It is a set of libraries, compilers and tools, and runtime with optimizations for AI and HPC now in its fifth generation. It works with frameworks like PyTorch and TensorFlow to lower the barrier to get started with AI workloads, easily migrate existing workloads, and accelerate them to even greater levels.

Generative AI and LLMs require a significant increase in both compute and memory capabilities. AMD has previously shown its Instinct MI300A accelerator at CES, the first data center APU accelerator for AI and HPC. Its CDNA 3 GPU architecture joins three Zen 4 chiplets (24 Genoa cores) with shared 128GB of unified HBM3 memory accessible to both the GPU and CPU sides of the chip. Dr. Lisa Su says it delivers 8x more performance and 5x better efficiency than the prior-gen Instinct MI250X accelerator.

It is now joined by the AMD Instinct MI300X. This new chip replaces the three Zen 4 chiplets with two more CDNA 3 chiplets for an all-GPU approach. It also expands memory capacity to 192GB of HBM3 with 5.2TB/s of memory bandwidth and 896 GB/s of peak Infinity Fabric bandwidth. This further optimizes the approach for LLMs and AI.

Putting this in context to its competition, AMD says this offers 2.4x more HBM density than NVIDIA’s H100 GPU at 1.6x the bandwidth. Memory performance is not the only factor in play in AI applications of course, though it can alleviate a significant bottleneck. AMD says this allows it to run enormous AI models entirely in-memory which vastly improves performance and reduces TCO as fewer GPUs are needed for the same results.

AMD demonstrated the Falcon-40B model from HuggingFace running on a single Instinct MI300X GPU accelerator. It was able to compose a poem about San Francisco in seconds, certainly many times faster than I could. This capability is largely credited to being able to fit the model entirely in memory, and AMD says it can potentially fit models up to 80 billion parameters on a single accelerator.

The AMD Instinct Platform groups eight Instinct MI300X modules together with a combined 1.5TB of HBM3 memory. This uses industry standards to streamline deployment, particularly at scale, with Open Compute Project (OCP) infrastructure. The Instinct Platform is poised to drive rapid adoption, particularly among open-source and open-standard focused organizations like HuggingFace.

The AMD Instinct MI300A is sampling to customers now, and the Instinct MI300X and Instinct Platform will begin in Q3. AMD anticipates production will ramp up in Q4.