Test-Driving NVIDIA's GRID GPU Cloud Computing

Screen resolution is slightly different, but you can't tell which of these is native and which is local to my own rig just by looking.

That's not to say every comparison is perfect. The H.264 compression algorithm that NV is using for this evaluation is occasionally prone to errors, particularly when the 10Mbit limit is hit. This Katy Perry playback, for example, shows compression artifacts on the remote server that aren't present on the local version.

We also took the system through a set of web browser benchmarks, opening pages remotely vs locally and timing the results. Performance here was more erratic, but the web page loads on GRID were typically 1.0 - 1.5s slower than on a local system (I visited websites I don't normally read to ensure my own browser wasn't pulling them out of cache). That's noticeable, but bearable -- and again, this is an evaluation test, not the production environment.

3D Applications? Welcome to "Just Works"

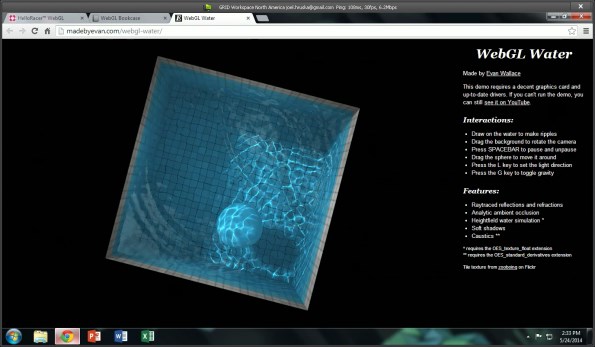

Meanwhile, the kinds of 3D applications that normally wouldn't work at all over a standard VDI work just beautifully here. NVIDIA's Ira face technology demo and A New Dawn sequences are both rendered at local quality (apart from occasional issues with the H.264 encoder and the 30 fps frame limit).

It's a bit anticlimactic to layer on screen shots of applications doing what they're supposed to in an unimpressed fashion, but that's what NVIDIA has delivered here. It's clear that the entire project is a demo -- it can take 4-5 minutes to boot up the test instance, there are limits on the display resolution, and the latency from NVIDIA's servers to my rural New York home clearly leaves something to be desired. When we talked to NVIDIA, the company claimed it set this capability up to allow users to test drive its vGPU technology and put eyes on the implementation without shelling out for a massive new server box.

Judging by what we've seen, that demo strategy will work. Home users and customers with light business needs aren't likely to move to virtualized environments, but this could be a real benefit for 3D-intensive applications where centralized management and deployment is a major advantage. Virtualizing these workloads has another fringe benefit -- you can put much louder, more powerful equipment in a remote installation than you can install right next to someone's workspace.

Judging by what we've seen, that demo strategy will work. Home users and customers with light business needs aren't likely to move to virtualized environments, but this could be a real benefit for 3D-intensive applications where centralized management and deployment is a major advantage. Virtualizing these workloads has another fringe benefit -- you can put much louder, more powerful equipment in a remote installation than you can install right next to someone's workspace. This is an impressive debut for virtualization technology -- more impressive than I honestly expected. I don't expect it to reinvent the industry overnight, but this kind of remote deployment capability could be a strong selling point for corporations that have a highly mobile workforce that needs the ability to access sophisticated workstation software, applications, models and data sets on a regular basis.