NTSB Tells Tesla To Tackle Basic Autopilot Safety And Stop Misleading With Full Self Driving Claims

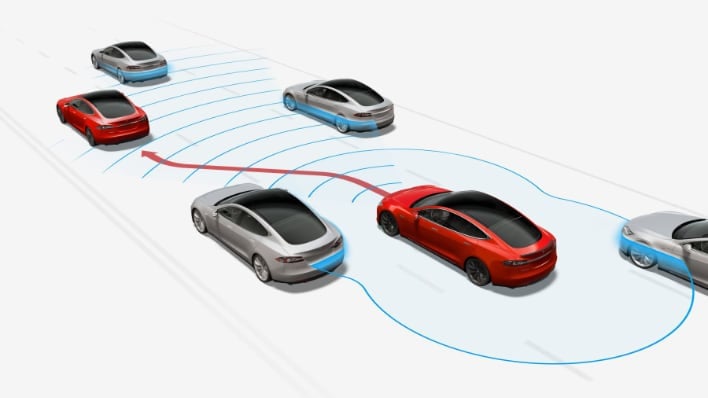

The ladder is a $10,000 option and encompasses several features, including the ability to navigate from highway on-ramp to off-ramp, make automated lane changes, automatically pull into a parking space, and more. To take things a step further, the Full Self Driving Beta allows a Tesla to navigate city streets on its own in addition to on the highway.

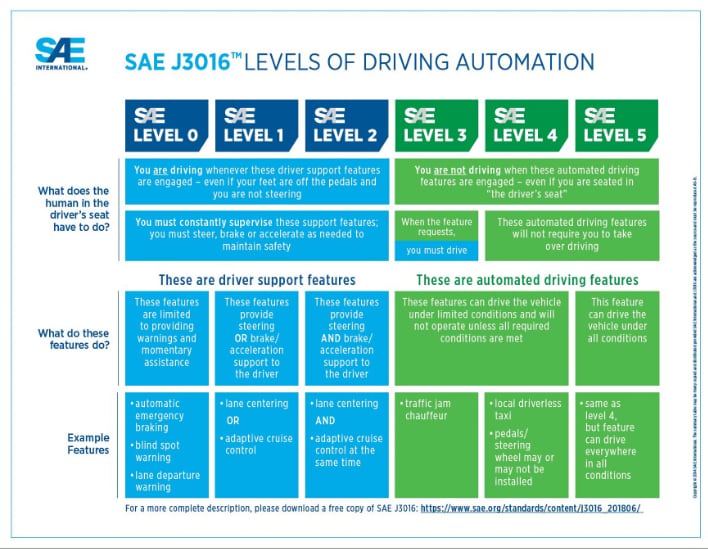

Despite labeling its $10,000 option as Full Self Driving, the head of the National Transportation Safety Board (NTSB), Jennifer Homendy, thinks that Tesla is putting the cart before the horse. For starters, even Tesla's advanced Full Self-Driving feature is "only" a Level 2 autonomous driving system. Furthermore, according to the Society of Automotive Engineers (SAE), "You must constantly supervise these support features; you must street, brake or accelerate as needed to maintain safety."

For this reason, Homendy chided Tesla, stating in a WSJ interview that Tesla's actions are "misleading and irresponsible" to use the term Full Self Driving. She went on to add that Tesla has "clearly misled numerous people to misuse and abuse [its] technology."

heres that video of the tesla going rogue that twitter keeps removing pic.twitter.com/jBIa2wSwEj

— shoe (@shoe0nhead) September 16, 2021

Beyond that, Homendy added that Tesla's methods for testing and developing its autonomous driving systems are far from ideal. "Basic safety issues have to be addressed before they're then expanding it to other city streets and other areas." She also expressed frustration with the fact that Tesla customers are being used as beta testers for autonomous software on public streets and highways. Beta testing software that takes even limited control away from the driver on public roadways has the potential to be a lot more dangerous than a consumer downloading a beta copy of iOS 15 or Android 12 on their smartphone.

In keeping with the NTSB chief's comments on safety issues that need to be addressed, the National Highway and Transportation Administration (NHTSA) is already investigating Tesla over nearly a dozen accidents involving Autopilot crashing into stationary emergency vehicles.

"With the ADAS active, the driver still holds primary responsibility for Object and Event Detection and Response (OEDR), e.g., identification of obstacles in the roadway or adverse maneuvers by neighboring vehicles during the Dynamic Driving Task (DDT)," wrote the NTHSA last month. "Most incidents took place after dark and the crash scenes encountered included scene control measures such as first responder vehicle lights, flares, an illuminated arrow board, and road cones. The involved subject vehicles were all confirmed to have been engaged in either Autopilot or Traffic Aware Cruise Control during the approach to the crashes."

Both the NTSB and the NHTSA are looking into future regulations that could potentially curb Tesla's (and other auto manufacturers') ability to market such systems with a propensity for failure to customers. "Doing investigations after the fact, that's a tombstone mentality," said Homendy. "You can proactively address potential future crashes and future deaths by taking action, by issuing regulations, performance standards aimed at saving lives."