NVIDIA and Meta have formed a multi-year partnership that represents a total platform commitment spanning CPU and GPU hardware, networking solutions, and NVIDIA's Confidential Computing platform for end-to-end encryption of data in a secure enclave. The sheer size of the deal—unconfirmed scuttlebutt puts it north of $100 billion based on Meta's CapEx guidance jumping from $72 billion last year to $135 billion in 2026, with NVIDIA accounting for the "vast majority" of that expansion—is notable in and of itself, but there are major implications that extend beyond just the financial aspect.

On the surface, this is about Meta building a massive AI infrastructure with hyperscale data centers optimized for data training and inference. Meta is looking to execute on its long-term AI roadmap and NVIDIA is playing a key role in that effort.

Digging beneath the surface, however, reveals a move that underscores NVIDIA's ability to provide a total platform solution that extend beyond just its AI accelerators. This is a total platform commitment.

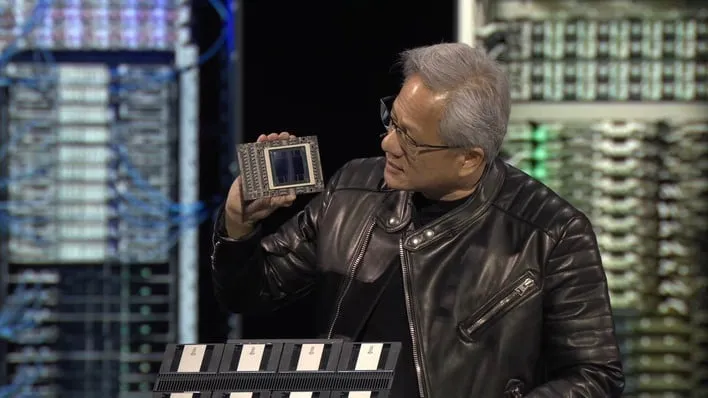

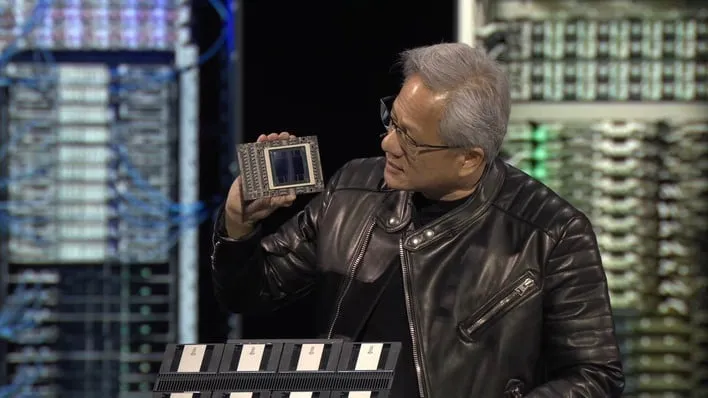

"No one deploys AI at Meta’s scale — integrating frontier research with industrial-scale infrastructure to power the world’s largest personalization and recommendation systems for billions of users," said Jensen Huang, founder and CEO of NVIDIA. "Through deep codesign across CPUs, GPUs, networking and software, we are bringing the full NVIDIA platform to Meta’s researchers and engineers as they build the foundation for the next AI frontier."

That's not to say NVIDIA's GPUs are playing an insignificant role here. On the contrary, Meta will deploy millions of

Blackwell Ultra and

Rubin GPUs for its AI infrastructure build out. But that's only part of the complete platform that Meta is tapping into.

NVIDIA's Arm-based

Grace CPUs (and

Vera CPUs later down the line) and Spectrum-X Ethernet switches for Meta's Facebook Open Switching System platform are also part of the deal. According to NVIDIA, this deal represents the first large-scale Grace-only deployment. NVIDIA is now competing directly for data center compute instead of just on the accelerator side, which puts pressure on x86 incumbents Intel and AMD, as well as Arm and RISC-V players. It can't be overstated that Grace and Vera are displacing x86 in this specific scenario, and you can bet that other companies outside of Meta are taking note.

"We’re excited to expand our partnership with NVIDIA to build leading-edge clusters using their Vera Rubin platform to deliver personal superintelligence to everyone in the world," said Mark Zuckerberg, founder and CEO of Meta.

The Confidential Computing angle shouldn't be overlooked, either. Meta is adopting NVIDIA's Confidential Computing for WhatsApp private processing for real-time inference on user data while maintaining data confidentiality and integrity. And in time, this will expand beyond WhatsApp to "emerging use cases across Meta's portfolio" to support privacy-enhanced AI at scale.

So yes, the

expanded partnership will see Meta built a massive AI infrastructure with NVIDIA's hardware, but the real story is much deeper than that.