NVIDIA To Fuel Metaverse, Cars, And Robots With Powerful Omniverse Updates, Thor Superchip, Grace CPU And More

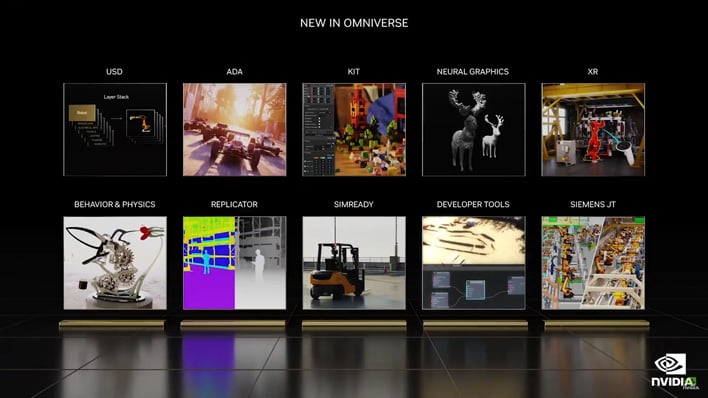

Huang’s talk flowed from introducing the Ada Lovelace gaming GPUs to the topic of fully simulated worlds in Omniverse, a digital platform for industries that will enable the Metaverse. Jensen explains how diverse industrial fields can leverage Omniverse for everything from design and development collaboration, to marketing and delivery in a single pipeline.

The Omniverse is a simulation engine that interacts with objects based on Pixar’s universal scene description (USD) format. Described as “the HTML of 3D”, USD allows for a cohesive way to create, manage, and parse the immense amounts of 3D data involved—all in real-time. The Omniverse serves as a platform for companies to build and maintain their own diverse set of applications.

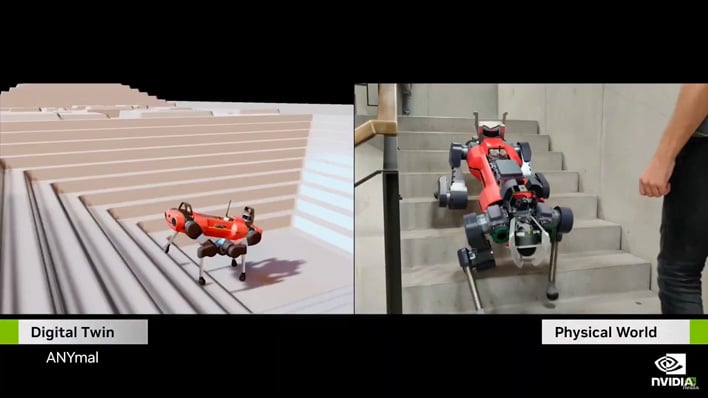

For many off these applications, it is used to create “digital twins” of the real world. Digital twins can scale from molecules for pharmaceutical research to objects for prototyping or even municipal landscapes to tune autonomous driving behaviors. Jensen stated that in the near-future, everything that gets created will have a digital twin at some point.

This vision become more feasible through NVIDIA’s new Omniverse Cloud service. The Omniverse Cloud is a suite of software and hardware distributed in datacenters around the world. It links together with Omniverse applications running locally on edge devices as needed. Because the Omniverse Cloud service offloads rendering, it allows developers to stream immersive and accurate experiences to a broad range of endpoint devices.

Huang used an example from Rimac Automobili who is leveraging the Omniverse Cloud to create a configurator application. This configurator allows shoppers to select options and view the changes in real-time from the actual “ground truth” engineering files without having to pre-render all of the possible options. This marries design, engineering, and marketing pipelines all to a single source of data using Omniverse Nucleus Cloud as its database engine.

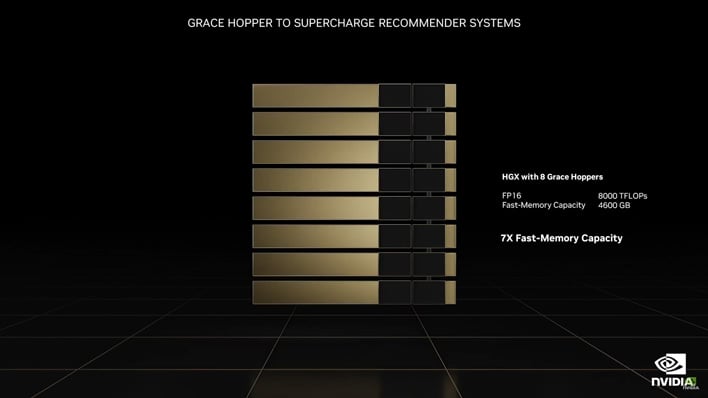

The Omniverse Cloud is built on NVIDIA’s HGX and OVX platforms. NVIDIA HGX is tuned for AI workloads while NVIDIA OVX is geared for visuals and real-world simulation.

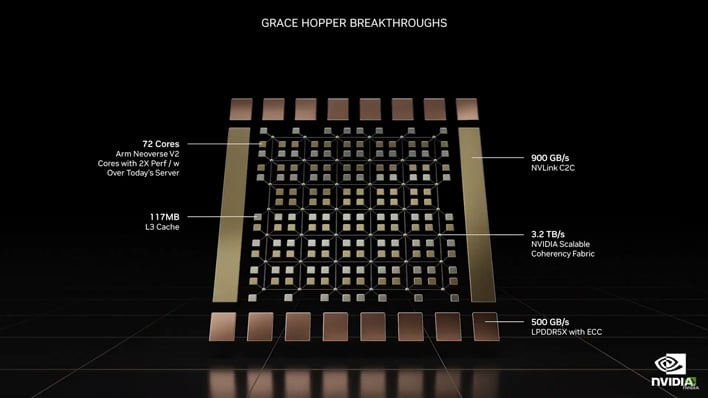

The HGX platform is bolstered by new Grace CPU and Grace Hopper Superchips. As disclosed by Arm, the Grace CPU is built using 144 Neoverse V2 cores and features a blistering 1 terabyte per second of memory bandwidth. The Grace CPU is ideal for high performance compute workloads as a result.

The Grace Hopper Superchip combines the Grace CPU with Hopper GPU architectures using NVLink-C2C to tackle the most complex AI workloads. The coherent memory model operates seven times faster than PCIe Gen 5 at around 900 GB/s, and all-told provides 30x more aggregate system memory bandwidth than DGX A100 before it.

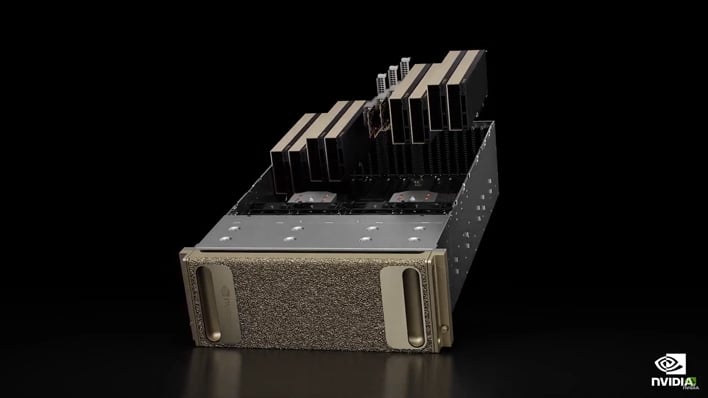

Conversely, the NVIDIA OVX system leverages new Ada Lovelace L40 GPUs to tackle digital twin workloads. The base unit is the NVIDIA OVX server which can combine up to eight L40 GPUs in a 4U rack chassis. These can be aggregated into an OVX POD consisting of 4-16 OVX servers with “optimized network fabric and storage architecture” to scale capabilities. This culminates in the 32 server OVX SuperPOD for handling massive simulations with low-latencies in real-time.

The L40 GPU offers a whopping 48GB of GDDR6 memory (ECC-enabled) to handle large data sets. NVIDIA states that the CUDA cores support 16-bit math (BF16) for mixed precision workloads. The L40 also integrates third-generation RT cores and fourth-generation Tensor cores to chew through design and data science models alike. Of course, this tier also grants access to NVIDIA virtual GPU software to support more powerful remote virtual workstations.

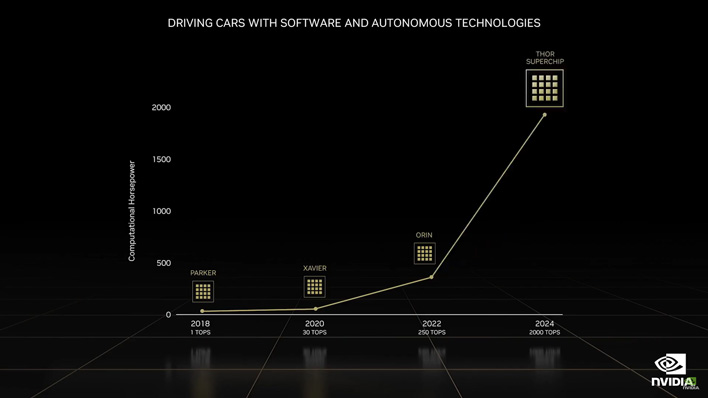

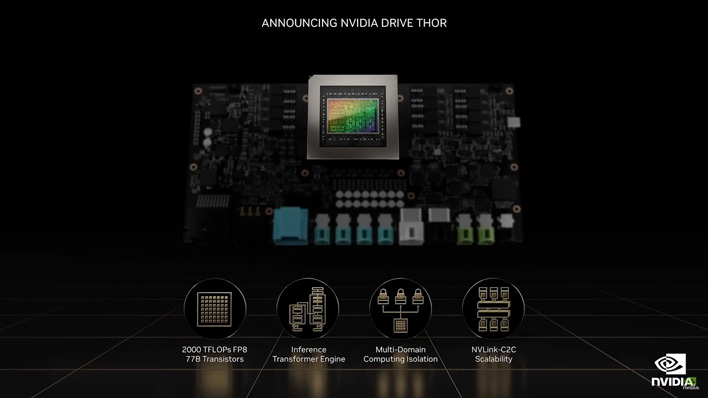

Finally, NVIDIA's CEO unveiled the new Drive Thor platform to serve as a centralized computer for virtually all automotive needs. It combines the functionality of several traditionally discrete chips onto a single SoC with its multi-domain computing support. NVIDIA says this helps simplify autonomous vehicle design, lowers costs, and reduces weight and overall complexity.

The Drive Thor is a potent processor, supplanting the previously planned 1000 TOPS Atlan. The Drive Thor doubles the Atlan’s projected compute power to deliver 2000 TOPS. The Drive Thor features many other capabilities and safety features. It houses an inference transformer engine for the complex AI workloads autonomous driving requires. And its NVLink-C2C interconnect further allows the system to run multiple operating systems simultaneously, due it its inherent ability to balance and distribute work across the high-speed interface with minimal overhead.

NVIDIA reports robust industry support for its Omniverse ecosystem. The company says there are already 150 connectors to the Omniverse and it supports open software platforms from numerous companies worldwide. The Omniverse Cloud operates in effect like a content delivery network does, but for graphics. NVIDIA has dubbed it a Graphics Delivery Network (GDN). The Omniverse Cloud Infrastructure is available now in a SaaS model with AWS, and will be available on additional cloud platforms in the near future.