NVIDIA Omniverse Replicator And AI Avatars Aim To Help Us Pioneer The Metaverse

NVIDIA's Omniverse isn't exactly like the metaverse that Zuck talks about, though. Rather than being structured like a video game with a player character that you control, Omniverse is more akin to professional collaboration and screen-sharing, but without the screen. It's like cooperatively editing content, except the content is a 3D space chock-full of AI and machine-learning elements, described to the virtual environment by Pixar's Universal Scene Descriptor (USD) format.

Next up was the DRIVE Concierge AI platform. If you've ever wished you could have Alexa or Cortana in your car, well, that's the idea. Finally, Huang demonstrated Project Maxine, an avatar intended to be used alongside video conferencing applications. Maxine cleans up background noise in the remote caller's chat, and then translates her English speech into German, French, and Spanish with the same voice and intonation.

All of the demonstrations come complete with dynamically-animated 3D avatars, powered by NVIDIA Video2Face and Audio2Face, AI-driven facial animation and rendering packages. It's not hard to imagine how avatars like this, with a little more development, could quickly become a part of everyday life.

One of the biggest deciding factors in the quality of an AI is the size of its training set, and having the ability to train robots in a physically-simulated virtual space that very closely matches reality will allow researchers and engineers to make high-quality AIs with consistent performance much more quickly. Actually, NVIDIA says it is already using this technique for its autonomous vehicle neural networks, and that it "has saved months of development time."

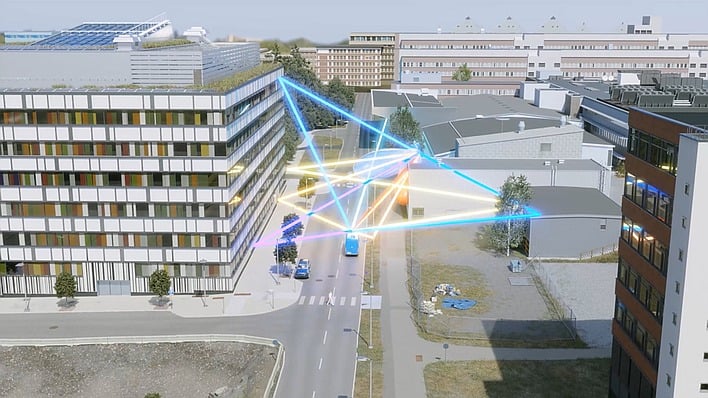

Replicator currently comes in two forms, one for NVIDIA DRIVE Sim, intended for training autonomous vehicles, and the other for Isaac Sim, intended for training "manipulation robots". As NVIDIA notes, besides allowing AI to learn about the environment in a simulated space, the virtual nature of the "digital twin" allows AIs to test and learn about "rare or dangerous" conditions that can't be tested in reality, like driving partially out of your lane.

NVIDIA hasn't given any timeline for broader availability of Avatar, but the company says that Omniverse Replicator should be available to developers "to build domain-specific data-generation engines" next year.