NVIDIA GeForce RTX 2080 Performance Unveiled, DLSS AI-Powered Anti-Aliasing Spikes FPS At High IQ

What’s interesting about DLSS is that it uses Turing’s Tensor cores to enable a deep learning-powered AI technique to dramatically improve image quality and remove jagged edge artifacts in games, with a minimal performance hit. Traditional anti-aliasing, or AA, can lean heavily on GPU processing resources and memory bandwidth, and may consume large amounts of frame buffer memory as well. Anti-aliasing often requires a frame to be rendered and re-rendered multiple times in order to smooth out edges of objects in a scene and the end result can be a costly performance hit. NVIDIA notes that its new DLSS anti-aliasing technique, however, uses machine learning of scenes and images (called inferencing) to improve image quality without the large performance hit associated with some traditional AA methods. To use NVIDIA's words, “powered by Turing’s Tensor Cores, which perform lightning-fast deep neural network processing, GeForce RTX GPUs also support Deep Learning Super-Sampling (DLSS), a technology that applies deep learning and AI to rendering techniques, resulting in crisp, smooth edges on rendered objects in games."

We were able to witness DLSS in action in Epic's Unreal Engine demo called Infiltrator. Infiltrator is an impressive demo to behold in and of itself, but at 4K with traditional TAA (Temporal Anti-Aliasing) enabled, it can be a beast of a GPU crusher, even on something like a GeForce GTX 1080 Ti. However, with DLSS enabled and Turing's Tensor cores doing the work with machine learning to clean things up, frame rates can sail back up to levels well north of 60 FPS at 4K even in something as graphically intense as Infiltrator. Check out our hands-on demo in action…

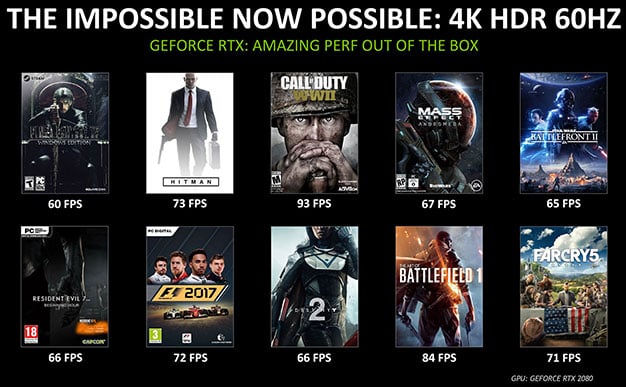

Taking performance claims one step further, NVIDIA allowed us to show you the following slide detailing GeForce RTX 2080 performance versus a GeForce GTX 1080, with both DLSS enabled and disabled...