You've Heard Of Moore's Law For CPUs, Now Check Out NVIDIA's Huang's Law For GPUs

If you're unfamiliar with Moore's Law, it was first postulated by legendary engineer and Intel co-founder Gordon Moore, who predicted that the number of transistors in an integrated circuit would roughly double every two years. This meant squeezing more transistors into increasingly smaller spaces, and offering additional features or higher performance. Building smaller chips with more features and performance also historically drove prices down over time.

Ever since the early 2010s however, the semiconductor industry has been struggling to keep pace with Moore's Law. Today’s most advanced process nodes aren’t typically offering the traditional benefits and cost savings of previous updates, and there are fewer fabs at the cutting edge. For an industry that's benefits so greatly over the years by the effects of Moore's Law, it's an existential crisis.

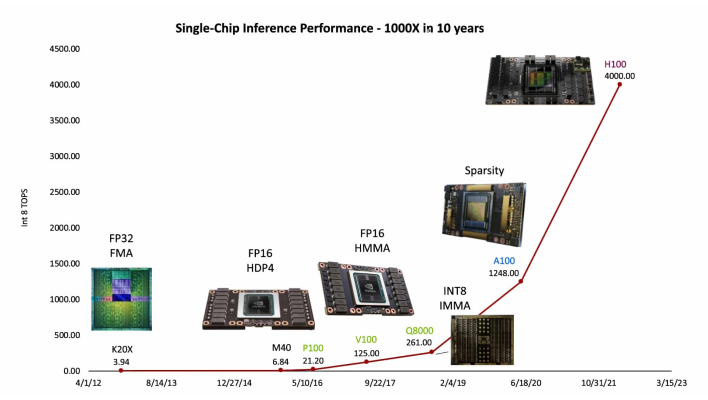

NVIDIA doesn't see the supposed end of Moore's Law as an insurmountable problem, however, as the company sees AI as the future of GPUs. At Hot Chips 2023, NVIDIA's Chief Scientist Bill Dally proposed Huang's Law, named after the company's CEO, which predicts 1000X performance gains every ten years thanks to AI. That's not a totally theoretical number; NVIDIA estimates that its latest H100 Hopper GPU is a little over 1000 times as fast as the K20X Kepler GPU from the early 2010s.

The premise of Huang's Law is that packing more transistors into a smaller area isn’t the only way to improve performance, power, and cost, thanks to AI’s every increasing role in the design and optimization of its GPUs.

Whether Huang's Law rings true remains to be seen, but it is clear that AI is playing an ever increasing role in chip design and optimization, and EDA tools providers like Synopsys and Cadence have made similar predictions.