One of the things that makes a performance test a good "benchmark" is repeatability, both in terms of "getting similar results every time" and also how easy it is to repeat the benchmark. For example, there are some games that simply aren't suited to being used as benchmarks, either because they aren't consistent, or because

it simply takes too long or it is too cumbersome to test them.

MLCommons' Director of Product Management is none other than Scott Wasson, known for his work with both Intel's and AMD's graphics divisions but more famously for founding

The Tech Report, whereupon he pioneered 'Inside the Second' benchmarking—a method that

still forms the foundation of PC game testing to this day. As such, Scott knows his stuff when it comes to what makes a good benchmark.

MLCommons

already has tens of thousands of performance benchmark results for AI hardware, but the nonprofit has to this point largely been involved with industry and academia. Today, however, marks the release of MLPerf Client 0.5, which is MLCommons' initial release of its first public benchmark. Right now, MLPerf Client is somewhat limited, in that it only supports 64-bit Windows platforms, it's command-line only, and it includes four tests based on a single model.

At this point in time, MLPerf Client 0.5 also has a pretty limited selection of hardware it will actually run on. The group has validated the benchmark for AMD Radeon RX 7900 parts, AMD Ryzen AI SoCs, Intel Arc discrete GPUs,

Intel Core Ultra Series 2 processors, and GeForce RTX 4000-series GPUs. It supports DirectML via ONNX Runtime for everything, but the Intel hardware also gets a specialized OpenVINO path. Participants are also able to optimize the models for their hardware, but must meet strict guidelines for accuracy.

Click To Enlarge

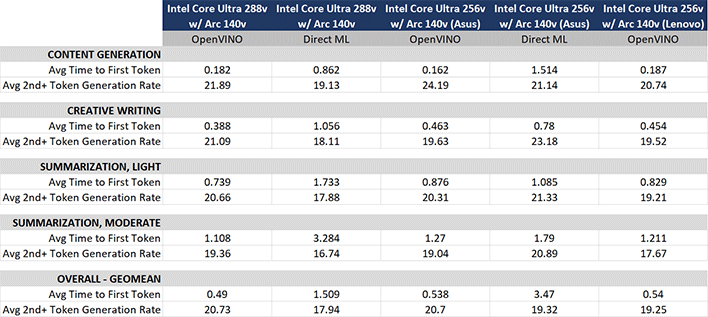

Click To EnlargeIndeed, we ran the MLPerf Client 0.5 release on on an array of hardware we had at hand and have some results to share. Uniquely, MLCommons is not providing official reference results the way it has with its other benchmarks. Instead, the MLPerf Client 0.5 benchmark is free and open source; anyone can run over to Github to download it and try it out.

Click To Enlarge

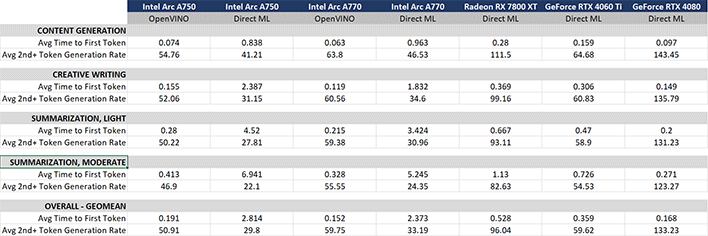

The Radeon RX 7800 XT and GeForce RTX 4060 Ti are technically not supported configurations; MLCommons only specifies the Radeon RX 7900 series discrete GPUs at this time, although the benchmark completed fine and actually gave excellent results, at least for throughput. Meanwhile, the benchmark authors caution that GeForce RTX 4000-series GPUs should have at least 12GB of onboard memory, but our 8GB GeForce RTX 4060 Ti card likewise completed the benchmark without issue.

If you're interested in trying out MLPerf for yourself, you should be able to hit the

MLCommons Github and download it today. Simply extract the application to a directory and open a terminal window there, then run "mlperf-windows.exe -c <configname>" where <configname> is the name of the JSON file matching your hardware vendor. If all goes well, the benchmark will download 7-10GB of data before testing, after which it will output some numbers that you can compare against our data above.