Intel Unveils 4th Gen Xeon Scalable Sapphire Rapids Processor Line-Up With Full Specs

Intel’s approach with this release is to offer a wide variety of “purpose-built, workload-first acceleration” paired with specifically tuned software. It says the advantages include better total cost of ownership and better sustainability through better management of power and CPU resources.

“The launch of 4th Gen Xeon Scalable processors and the Max Series product family is a pivotal moment in fueling Intel’s turnaround, reigniting our path to leadership in the data center and growing our footprint in new arenas,” said Sandra Rivera, Intel executive vice president and general manager of the Datacenter and AI Group. “Intel’s 4th Gen Xeon and the Max Series product family deliver what customers truly want – leadership performance and reliability within a secure environment for their real-world requirements – driving faster time to value and powering their pace of innovation.”

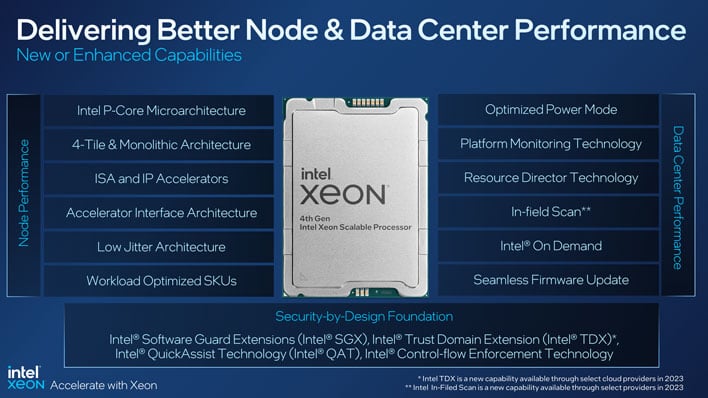

Sapphire Rapids Architecture Highlights

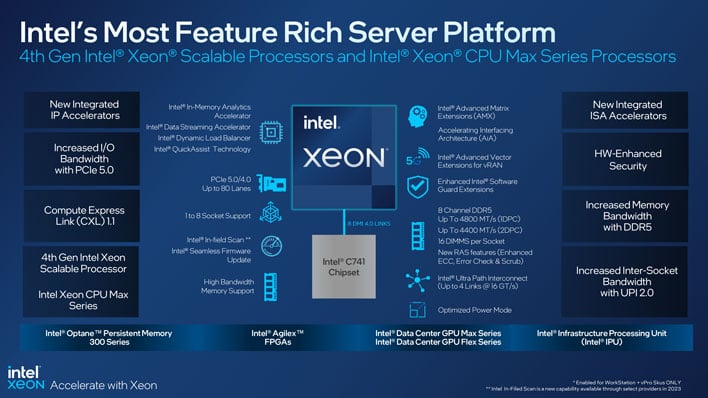

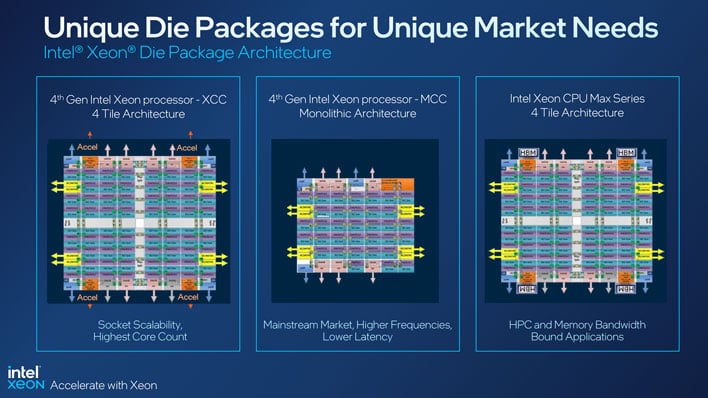

4th Gen Xeon Scalable processors are built on the Intel 7 manufacturing process and will offer improved per-core performance over the prior generation, in addition to higher density—scaling up to 60 cores per socket. Unlike Alder Lake and Raptor Lake on the consumer side, these processors use Golden Cove “P-Cores” exclusively.As a tiled architecture, Intel has flexibility to build out different packages for various market needs. The Extreme Core Count (XCC) and Max Series layouts appear similar as both utilize 4 tiles. However, the Max die package trades some accelerator links for high bandwidth memory links to drive big data applications. The Medium Core Count (MCC) die culls back to a single tile for more mainstream workloads and to push higher frequencies at lower latency.

The platform supports DDR5 memory and PCIe 5.0 plus Compute Express Link (CXL) 1.1 to move high-throughput data. Compared to AMD’s 4th Gen EPYC “Genoa” processors with 12-channel DDR5, though, these only support 8-channels of DDR5 with 16 DIMMs per socket. Intel can make up for this with its Xeon CPU Max Series products, but more on that later.

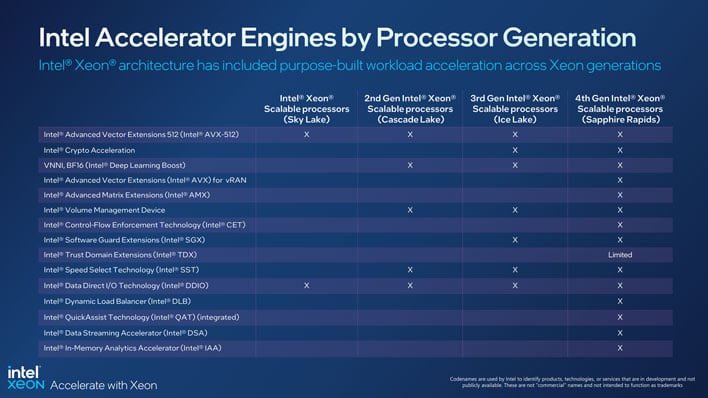

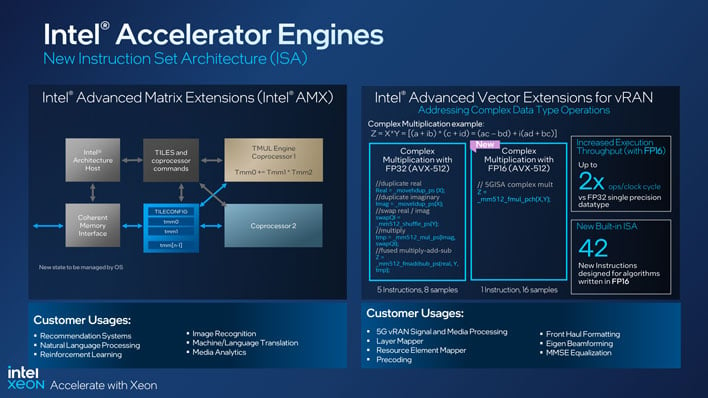

Intel 4th Gen Xeon Accelerator Engines

The 4th Gen Xeon Scalable Processors can pack an array of different accelerators to benefit particular workloads above and beyond general compute. These include:- Intel Advanced Matrix Extensions (Intel AMX)

- Intel Data Streaming Accelerator (Intel DSA)

- Intel In-Memory Analytics Accelerator (Intel IAA)

- Intel Dynamic Load Balancer (Intel DLB)

- Intel Advanced Vector Extensions (Intel AVX) for vRAN

- Intel Advanced Vector Extensions 512 (Intel AVX-512)

- Intel QuickAssist Technology (Intel QAT)

- Intel® Crypto Acceleration

A few of these accelerators, such as Intel AVX and AVX-512, will be familiar to our readers. Intel AMX operates in a similar vein to speed up deep learning training and inferencing workloads to benefit ML applications like natural language processing, recommendation systems, and image recognition.

Intel QAT is designed to offload data encryption, decryption, and compression tasks from the processor cores. This approach can free up CPU resources to tackle other workloads simultaneously. Likewise, Intel DSA handles data movement and transformation operations to benefit storage, networking, and other data-intensive workloads.

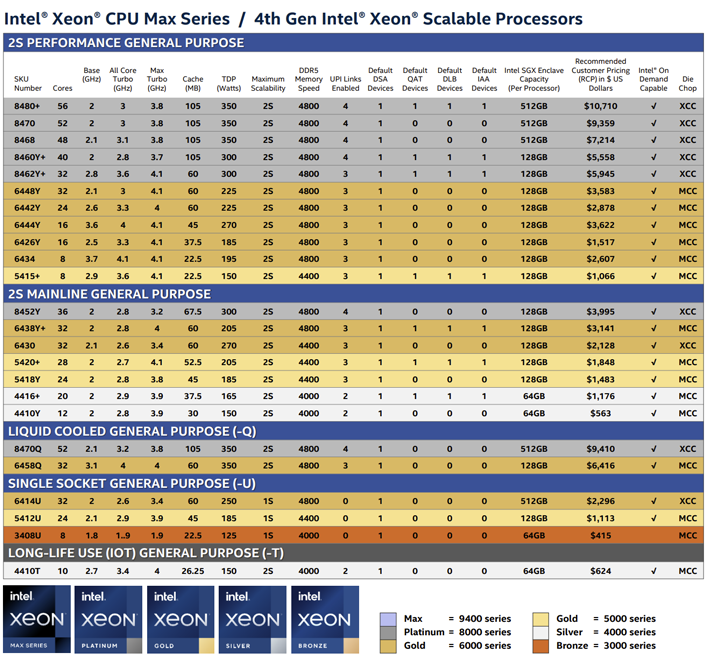

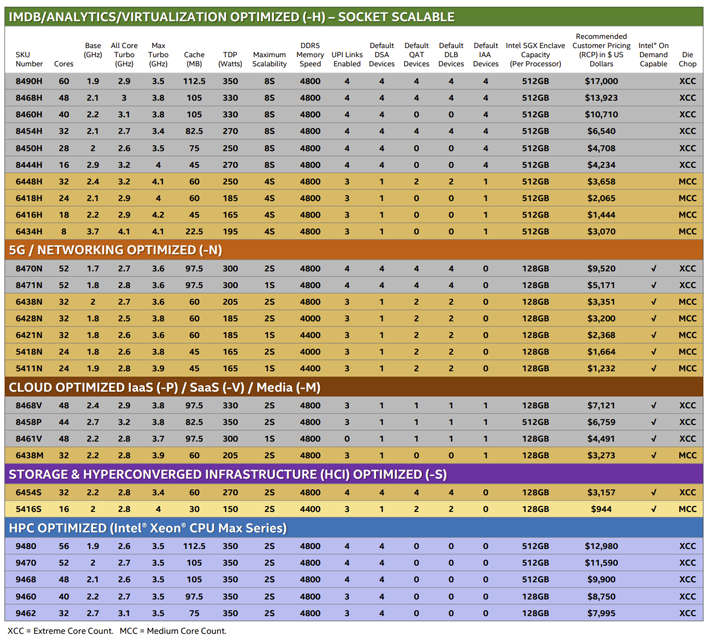

4th Gen Xeon Scalable Family Segmentation

In all, Intel is releasing over 50 SKUs. As we’ll see in the SKU listings below, the available accelerators in a given model vary depending on the chip’s intended segment and how it falls within its stack. Intel has defined segments that include General Purpose (with several sub-divisions), IMDB/Analytics/Virtualization Optimized, 5G/Networking Optimized, Cloud Optimized, Storage & Hyperconverged Infrastructure (HCI) Optimized, and High-Performance Compute (HPC) Optimized.The 4th Gen Xeon Scalable family is divided into Max (9000), Platinum (8000), Gold (6000 and 5000), Silver (4000), and Bronze (3000) tiers. Higher tiers can offer more features or capabilities (like larger SGX enclaves) than lower rungs. Platinum processors can support up to 8 sockets in a system, Gold up to 4, and Silver up to 2, although these capabilities are also model-dependent. Really, only the -H processors in the IMDB/Analytics/Virtualization Optimized umbrella can scale beyond two sockets.

The HPC optimized SKUs are potentially the most interesting, and is where the Xeon CPU Max series comes in. These processors incorporate 64GB of on-package high bandwidth memory (HBM2e) which benefits workloads like HPC and AI with high data throughput demands. The HBM stack can be used inline with traditional system DRAM, as tiered memory, or even standalone without any DIMM modules at all.

Intel On Demand

With this generation, Intel is pushing its On Demand service to allow customers to expand the processor's accelerator capabilities as needed down the road. It contends that some of its customers have been asking for ways to shift from capital expenditures (capex) to operational expenditures (opex) where possible to help with budgetary demands. We are not accountants, but the idea of having to pay to unlock functionality that already exists in the silicon that was purchased is sure to raise an eyebrow. To be clear, customers can purchase the licensing from the get-go, if desired, but it must be taken into account.At any rate, we are working on our own performance review of these 4th Gen Xeon Scalable Processors as we speak, and hope to have it completed in the coming days. Stay tuned to see how it fares against AMD's 4th Gen EPYC Genoa processors.