Live Intel 4th Gen Xeon Benchmarks: Sapphire Rapids Accelerators Revealed

Intel 4th Gen Xeon Scalable Accelerators Detailed

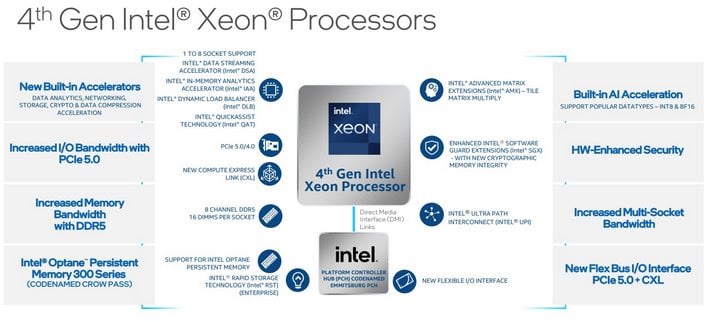

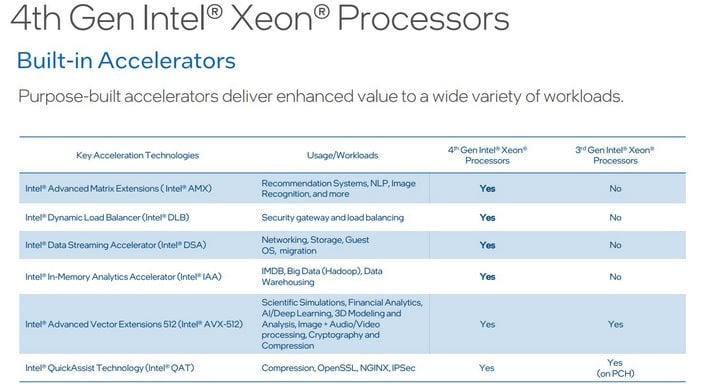

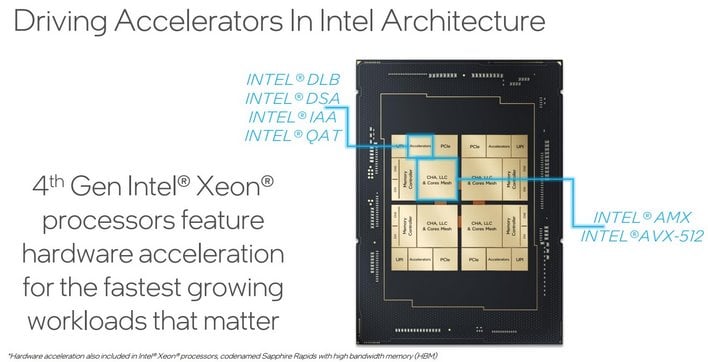

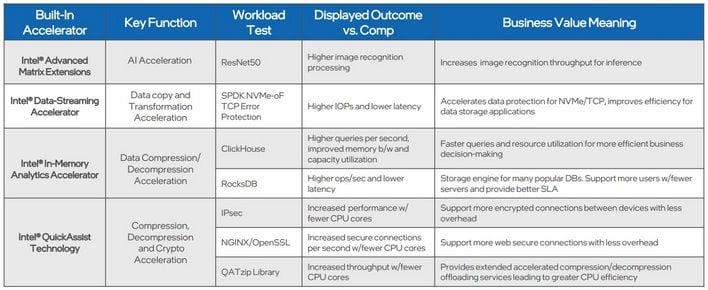

With Sapphire Rapids, it’s not just about the chips’ enhanced Intel 7 process-built Golden Cove CPU cores. This new Intel 4th Gen Xeon Scalable server CPU microarchitecture has dedicated hardware accelerators on board that can provide significant performance uplifts in an array of common data center workloads and applications.

While Intel wasn’t demonstrating general purpose compute workloads on these pre-production 4th Gen Xeon chips and servers in our demo, the company was specifically showcasing the performance gains that utilizing these specific on-board engines can offer to these common data center workloads and tasks. So, you might say this was a very controlled head-to-head versus AMD EPYC, but the accelerators in action on the workloads employed are also key to advancing data center server performance, performance-per-watt metrics and TCO.

Intel 4th Gen Xeon Scalable Sapphire Rapids 2P Server

- Node 1: 2x pre-production 4th Gen Intel Xeon Scalable processors (60 core) with Intel Advanced Matrix Extensions (Intel AMX), on pre-production Intel platform and software with 1024GB DDR5 memory (16x64GB), microcode 0xf000380, HT On, Turbo On, SNC Off.

- Node 2: 2x AMD EPYC 7763 processor (64 core) on GIGABYTE R282-Z92 with 1024GB DDR4 memory (16x64GB), microcode 0xa001144, SMT On, Boost On.

Intel 4th Gen Xeon Scalable Accelerator Benchmarked

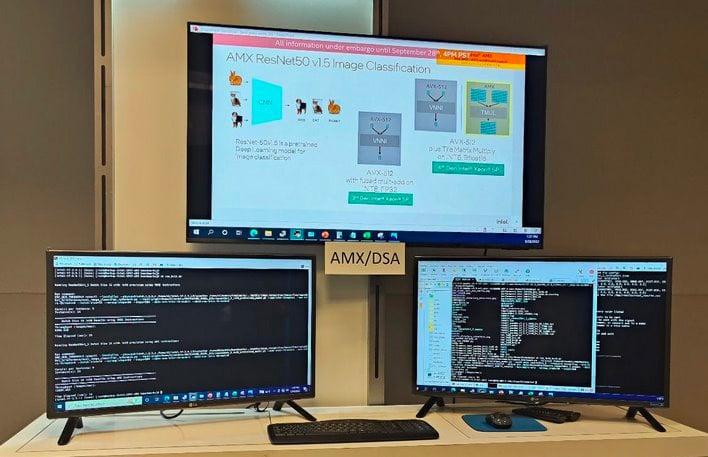

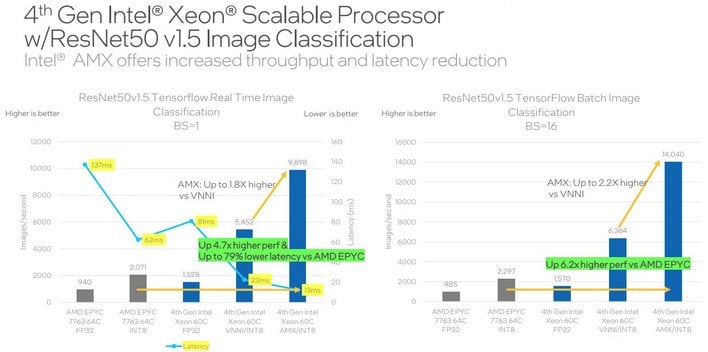

Utilizing a pretrained deep learning AI model for image classification, Intel demonstrated performance gains with just AVX-512 and Intel VNNI (Vector Neural Network Instructions) AVX-2 acceleration, and then again with AVX-512 and Intel AMX (Advanced Matrix Extensions) performing a Tile Matrix Multiply to accelerate things further. Here are the results…ResNet50v1.5 Tensorflow AI Image Classification Performance With Intel AMX

As you can see, there’s a dramatic speed-up in number of images processed per second, and a huge reduction in latency with Intel VNNI employed alone with INT8 precision. However, kick-in the 4th Gen Xeon’s AMX matrix multiply array and the test showed an approximate 6X lift in performance. We watched these tests running live and can in fact verify those results, at least for the servers in the rack that were being tested in front of us.

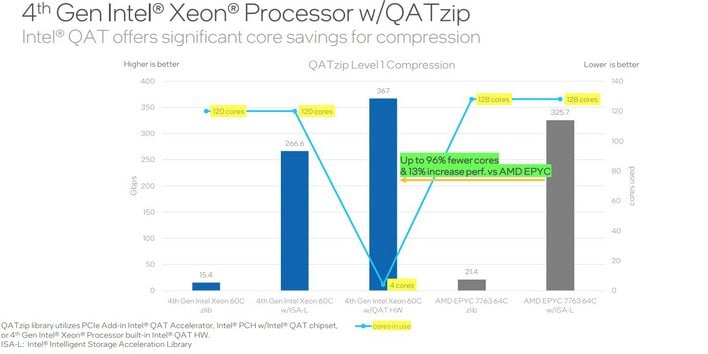

QATzip Level 1 Compression Acceleration Benchmarks

At first glance, the accelerated QATzip compression workload appears to be only marginally faster than the AMD EPYC configuration utilizing 120 cores with ISA-L (Intelligent Storage Acceleration Library). But what the numbers show is that when utilizing Intel QuickAssist Technology (QAT), the workload is mostly offloaded from the CPU cores. Only 4 cores and the QAT accelerator are utilized in the fastest 4th Gen Xeon Scalable configuration, freeing up 116 of the CPU cores for other tasks and VM availability.

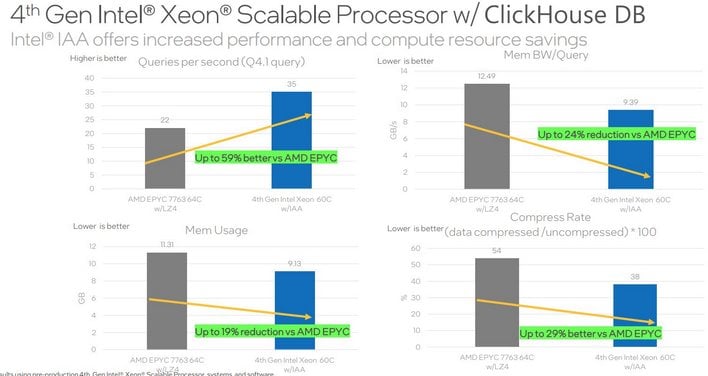

ClickHouse Big Data Analytics Benchmarks On Sapphire Rapids

ClickHouse is an Open-source column-oriented database management system for online analytical processing. It can run on Bare metal, in the Cloud (AWS, AliCloud, Azure, etc.), or containerized in Kubernetes. It is linearly scalable and can be scaled up to store and process massive amounts of data. These results were generated using a Star Schema Benchmark, focused on Query Q4.1, which has the highest CPU utilization...There are multiple results represented here, showing the 60-core 4th Gen Xeon Scalable system utilizing IAA outperforming the 64-core EPYC configuration in terms of queries per second and compression rate. The 4th Gen Xeon Scalable system also utilized less memory and less memory bandwidth during the benchmark, freeing up those resourced for other tasks.

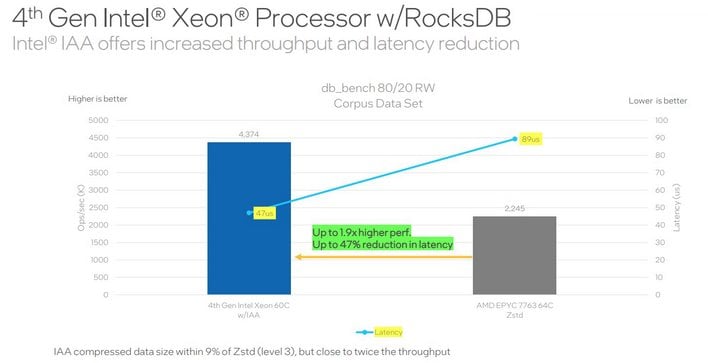

Intel 4th Gen Xeon IAA Accelerating RocksDB

RocksDB is an embedded persistent key-value store used as storage engine in many popular databases (MySQL, MariaDB, MongoDB, Redis, etc.). It's utilized in Facebook's hosting environment, for example, as well. For these benchmarks, RocksDB was modified to support compressors as plugins. Although not publicly available just yet, the code will be upstreamed to the RocksDB project...In this benchmark, the 60-core 4th Gen Xeon Scalable configuration utilizing IAA nearly doubles the performance of the 64-core EPYC system, while simultaneously offering much lower latency.

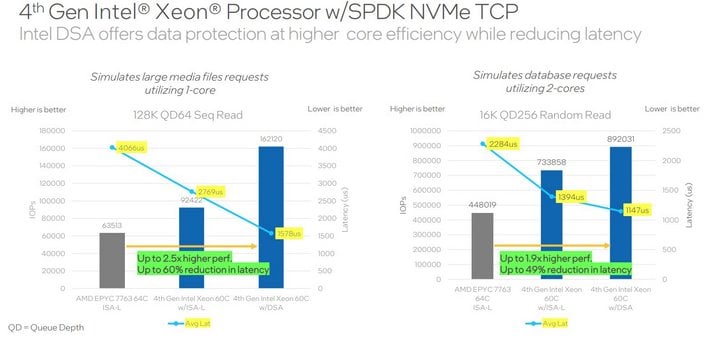

SPDK NVMe TCP Storage Performance With Data Protection

This next benchmark is illustrating Error Protection of NVMe TCP data at 200Gbps. For this test, the FIO submits I/O requests to a SPDK (Storage Performance Development Kit) NVMe/TCP target. The target reads data from NVMe SSDs and uses DSA or ISA-L for on the Intel processor and ISA-L on the AMD processor to calculate CRC32C data digest. Then the target sends I/O and CRC32C data digest to FIO...Whether utilizing a single core with 128K sequential reads (QD64) or two cores with 16K random reads (QD256), the Intel 4th Gen Xeon server offers significantly higher throughput, at much lower latencies than the EPYC server.

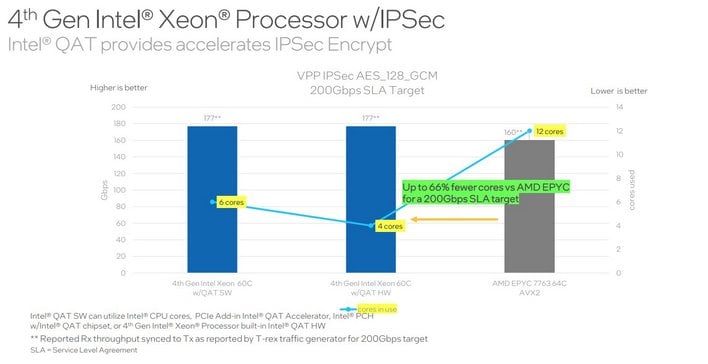

Intel 4th Gen Xeon Scalable IPSec Encryption Benchmarks

The DPDK IPsec-GW (security gateway) benchmark measures how much traffic the server can process per second using the IPsec protocol. Encryption is handled by software using Intel Multi-Buffer Crypto for IPsec library or offloaded to the Intel QAT accelerator. The Intel IPsec library implements optimized encryption/decryption operations using AES or VAES instructions, but note VAES instructions can not be utilized on the AMD system because AVX-512 is not available on 3rd Gen EPYC processors...At first glance, the bars in this chart don't show large disparities between the different configurations. However, the Xeon system is able to achieve somewhat higher performance, while utilizing far fewer cores.

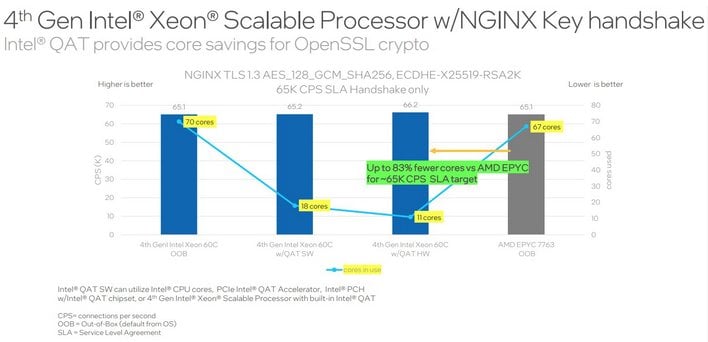

Intel Xeon NGINX Key Handshake OpenSSL Crypto Acceleration Tests

This NGINX TLS Key Handshake benchmark measures encrypted Web Server connections-per-second. No packets are requested by the clients, so only a TLS handshake is complete. The benchmark stresses the server’s compute, memory, and IO resources...Once again the testing shows the 4th Gen Xeon Scalable system offering comparable performance to the EPYC system, but when utilizing Intel QAT (SW or HW), the Xeon configuration is able to obtain that performance while taxing far fewer CPU cores.

All of these benchmarks were devised by Intel to clearly demonstrate the potential performance and efficiency benefits of the various accelerators available in its upcoming 4th Gen Xeon Scalable processors, otherwise known as Sapphire Rapids. The tests were run under controlled conditions and most of the details regarding Intel's 4th Gen Xeon Scalable processors being employed in the test servers were not divulged. With all of that in mind, we obviously have to temper our assessment of these numbers, but assuming everything shown holds up in the real world when we do get to independently verify these results, they certainly bode very well for Intel.

AMD will retain a CPU core density per socket advantage with its EPYC processors, but if these dedicated accelerators in 4th Gen Xeon Scalable processors negate that advantage and free up Intel CPU core resources for other tasks while accelerating these common workloads, this new Intel Data Center Group product offering could have major implications for tomorrow's data centers.