Arm Neural Super Sampling Set To Bring DLSS-Style Upscaling To Mobile GPUs

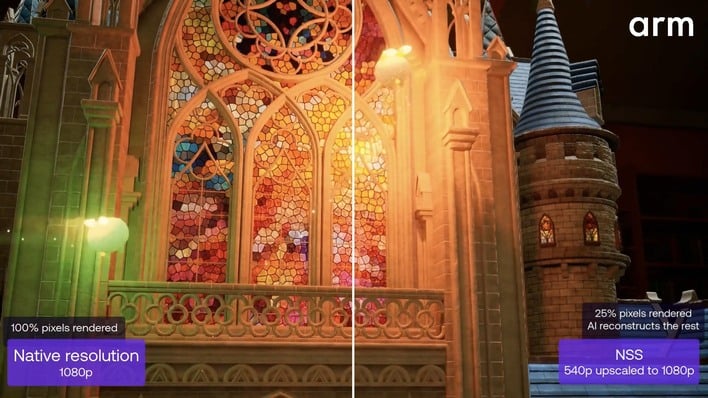

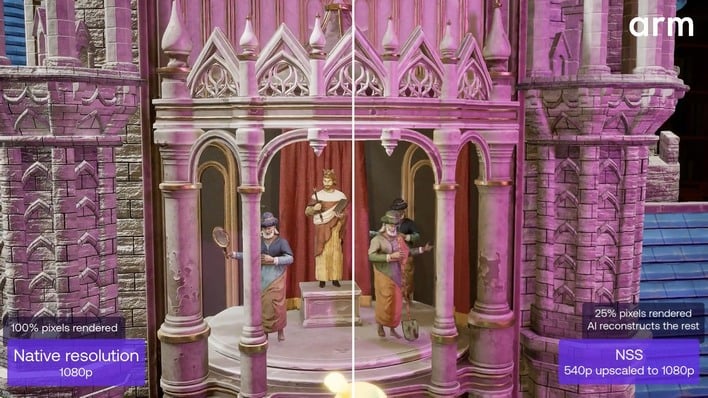

If that sounds a lot like NVIDIA's DLSS, AMD's FSR4, and Intel's XeSS, that's because it is a lot like those. The pitch is straightforward: render at a lower resolution (in Arm's go-to example, 540p internal → 1080p output), run the frame through a trained neural network, and output something that looks close to native while using far less GPU time.

Arm is framing this as an "industry first," but the "industry" here is very specifically mobile GPUs, as AI-assisted temporal upscaling has of course been a fixture of PC graphics for years. If we wanted to be contentious, we could argue that NVIDIA and Nintendo beat Arm to the punch even here, with the custom DLSS that the Nintendo Switch uses, but that's just pedantic.

The real hook for NSS is that, like that custom version of DLSS, it's tuned for mobile constraints and designed to run primarily on a dedicated on-chip neural accelerator instead of stealing time from the main GPU cores like with Arm's own Accuracy Super Resolution (ASR) that just launched in March of this year.

That flagship "540p to 1080p in 4ms" claim is worth unpacking. NSS doesn't run on shipping silicon yet; first hardware is slated for late 2026. That 4ms figure is based on simulation data and performance assumptions about the next-gen Mali GPUs' neural hardware. Right now, NSS is only running in emulation on desktop, and Arm is up-front that actual results will vary by resolution, hardware config, and workload.

Arm's demo video, a slow flyby of a high-detail "Enchanted Castle" scene, shows a clear improvement over older shader-based upscalers like Arm's own FSR2-based Accuracy Super Resolution (ASR) at the same low input resolution. Temporal artifacts like ghosting or smearing, common in those techniques, are essentially absent here. That said, the test case is very controlled: no rapid motion, no thin geometry whipping around, no chaotic particle effects. NSS still produces a noticeably softer and less detailed image than native, but on a 6-inch phone display, that tradeoff is likely impossible to spot.

Critically, dropping your render resolution to 25% of native can slash pixel shading cost by more than 70%, so in GPU-limited scenarios, spending ~4ms on an upscale pass can absolutely be worth it. The benefit is smaller if your bottleneck is elsewhere, and 4ms is still a big slice of a 16.7ms (60 FPS) frame budget. That's the balancing act developers will need to weigh, but this applies to DLSS and other technologies, too.

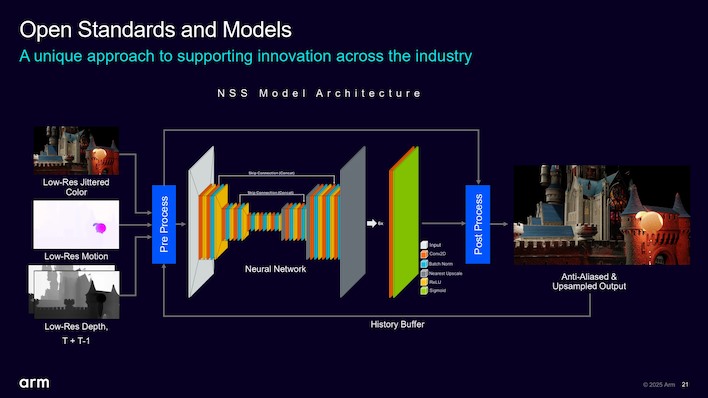

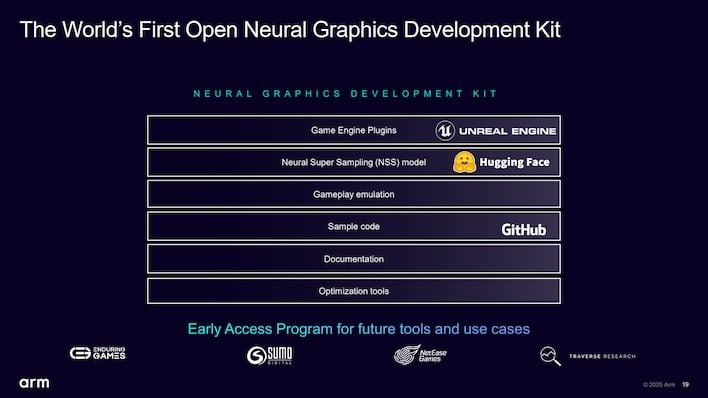

Speaking of DLSS, NVIDIA's latest DLSS 4 moved to a transformer-based model, but NSS uses a simpler CNN-based UNet architecture with recurrent feedback. In that sense, it's closer to DLSS 2 in design. Arm says the model is fully open, with architecture, weights, and training tools available on GitHub and HuggingFace, so developers can retrain it for their own content. Whether "open" here means much beyond hobbyist tinkering remains to be seen, but the SDK does ship with Unreal Engine integration, Vulkan ML extensions, and emulation layers to let studios start work a year before hardware ships.

According to Arm's slides, NSS is "just the beginning," with more neural technology on the way. The company specifically mentions Neural Frame Rate Upscaling (NFRU)—likely a frame generation technique—and Neural Super Sampling with Denoising (NSSD), which sounds a lot like NVIDIA's DLSS Ray Reconstruction and AMD's still-upcoming FSR Redstone, aimed at enabling real-time path tracing on mobile with fewer rays per pixel.

On paper, NSS looks like a significant step for mobile graphics, both in quality and in efficiency—provided that Arm's next-gen neural hardware delivers the performance the company is modeling today. The tech itself isn't new to the wider industry, but if it works as advertised on 2026-class mobile devices, it could give high-end mobile games a noticeable leap in fidelity, battery life, or both.