AMD Introduces Radeon Instinct Machine Intelligence And Deep Learning Accelerators

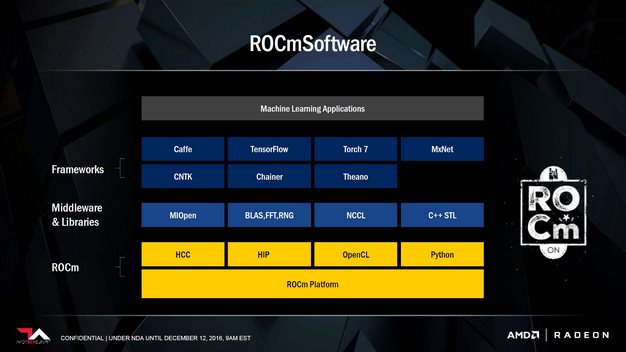

As its name suggests, the new Radeon Instinct line of products are comprised of GPU-based solutions for deep learning, inference, and training. The new GPUs are also complemented by a free, open-source library and framework for GPU accelerators, dubbed MIOpen. MIOpen is architected for high-performance machine intelligence applications, and is optimized for the deep learning frameworks in AMD’s ROCm software suite, which we recently talked about here.

During a recent visit with AMD, it was noted that we generate roughly 2.5 quintillion bytes of data every day – a quintillion is a 1 followed by 18 zeros. And it’s all different types of data – texts, video, audio, images, etc. With such a wide variety of data, different types of computing are required to process it all efficiently, like CPUs, GPUs, and custom ASICs and FPGAs. Our ability to generate data far exceeds our capacity to analyze it, understand it, and make decisions in real time, hence the need for ever faster and more powerful processors, with more bandwidth and access to more memory.

AMD hopes Radeon Instinct and its associated software will be the building blocks for the next wave of machine intelligence applications. “Radeon Instinct is set to dramatically advance the pace of machine intelligence through an approach built on high-performance GPU accelerators, and free, open-source software in MIOpen and ROCm,” said Raja Koduri, senior vice president and chief architect, Radeon Technologies Group, AMD. “With the combination of our high-performance compute and graphics capabilities and the strength of our multi-generational roadmap, we are the only company with the GPU and x86 silicon expertise to address the broad needs of the datacenter and help advance the proliferation of machine intelligence.”

The first products in the lineup consist of the Radeon Instinct MI6, the MI8, and the MI25. The 150W Radeon Instinct MI6 accelerator is powered by a Polaris-based GPU, packs 16GB of memory (224GB/s peak bandwidth), and will offer up to 5.7 TFLOPS of peak FP16 performance. Next up in the stack is the Fiji-based Radeon Instinct MI8. Like the Radeon R9 Nano, the Radeon Instinct MI8 features 4GB of High-Bandwidth Memory (HBM), with peak bandwidth of 512GB/s -- it's got a nice small form factor too. The MI8 will offer up to 8.2 TFLOPS of peak FP16 compute performance, with a board power that typical falls below 175W. The Radeon Instinct MI25 accelerator will leverage AMD’s next-generation Vega GPU architecture and has a board power of approximately 300W. All of the Radeon Instinct accelerators are passively cooled, which is to say they don’t have any on-board fans of their own, but when installed into a server chassis you can bet there will be massive amounts of air blown across their heatsinks.

Astute readers may have noticed that the Radeon Instinct model numbers are roughly based on the compute capabilities of each card. Assuming that rings true for the MI25, it should offer more than triple the performance of the MI8, at approximately 25 TFLOPS. The “2X Packed Math” spec could account for half of that increase, however. The high-bandwidth cache and controller most likely refer to the HBM2 configurations that will be used on next-gen Vega-based GPUs, but details weren’t given. There was also some speculation that the MI25 was packing two GPUs due to that 300W board power, but we have no confirmation as of yet.

Like the recently released Radeon Pro WX series of professional graphics cards for workstations, Radeon Instinct accelerators will exclusively be built by AMD. All of the Radeon Instinct cards will also support AMD MultiGPU (MxGPU) hardware virtualization technology, that conforms to the SR-IOV (Single Root I/O Virtualization) industry standard. They’ll also offer 64-bit PCIe addressing with Large Base Address Register (BAR) support for multi-GPU peer-to-peer communications.

AMD is working on next-gen interconnect technologies that surpass today’s PCIe Gen3 standards as well, with the goal to further enhance performance for future machine intelligence applications. AMD is collaborating on a number of open source high-performance I/O standards too, which support a variety of server CPU architectures including X86, OpenPOWER, and ARM AArch64. AMD also reiterated that it is a founding member of CCIX, Gen-Z and OpenCAPI, and that they’re working towards a future 25Gbit/s phi-enabled accelerator and rack-level interconnects for Radeon Instinct.

A handful of AMD's partners were also on hand to talk about future servers that will feature Radeon Instinct. Inventec has a rack planned that will pack 120 Radeon Instinct MI25 Vega-based GPUs, and offer up to 3 PetaFLOPS of GPU compute performance. Specifications and features for other powerful servers were shown as well. The Falconwitch server pictured above (lower-left) will reportedly offer up to 400 TeraFLOPS of GPU compute performance. For comparison, NVIDIA's DGX-1 offers up roughly 170 TeraFLOPS.

Both Radeon Instinct hardware and MIOpen software are slated for release in the first half of 2017. As we get closer to the official launch, we're sure to have more information, especially in regards to AMD's next-generation Vega GPU architecture.

On a side note, AMD is hosting a live-streaming event tomorrow (12/13) at 3PM CST, dubbed "New Horizon", during which the company will be giving a sneak peek of its upcoming Zen CPUs. There may be more information revealed during that event -- AMD fans should set aside some time to watch.