AI Toy Privacy Fumble Exposes 50,000 Private Chat Logs With Kids

Now, anyone shocked that a cloud-connected product shipped with a serious security flaw is seriously underestimating how often this happens. Software has bugs, authentication gets misconfigured, and dashboards end up exposed to the public internet—especially in fast-moving startups trying to ship products before competitors do. What matters in this case is not that "a flaw existed," but rather how severe it was, and how easily it could be exploited. On that front, Bondu's failure is hard to excuse.

What makes this incident stand out is the nature of the data and the apparent lack of basic security thinking behind it. This wasn't a stray debug log or anonymized telemetry. It was detailed records of children's conversations, which is precisely the kind of data that should trigger extreme caution. Leaving that information accessible to anyone with a generic Google login suggests not just a bug, but a deeper naivete about threat models. If two researchers stumbled across this in minutes, it's natural to wonder what a motivated black-hat actor could have done with more time and fewer scruples—or may have already done without anyone knowing.

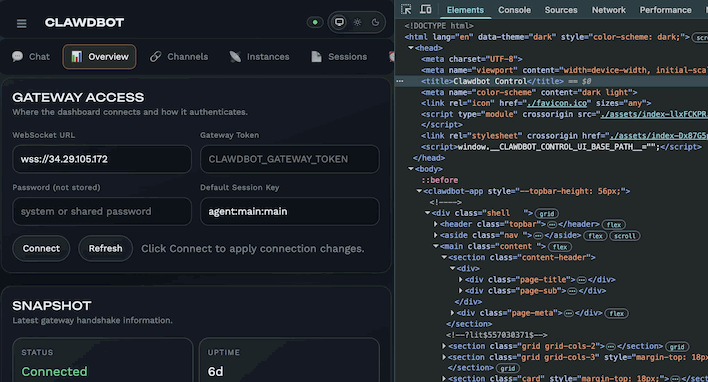

Unfortunately, Bondu's case isn't an isolated one. A similar pattern emerged recently with Moltbot (formerly known as Clawdbot), an open-source AI agent framework that runs locally but relies on cloud-based AI services. Security researchers found that the web-based control interfaces for thousands of Moltbots were exposed to the internet with no authentication at all, leaking access tokens for connected services: social media accounts, cloud AI APIs, chat histories, and more. In effect, users' entire digital lives were sitting behind an unlocked door, visible to anyone who happened to find it.

The common thread between Bondu and Moltbot isn't malicious intent, but a broader lack of cybersecurity awareness in the AI startup ecosystem. In the rush to build clever agents, chatbots, and AI-powered products, teams appear to be skipping over the most basic security principles: authentication, access control, and the assumption that anything exposed to the internet will be found sooner or later.

To Bondu's credit, the company says it fixed the issue quickly once notified and found no evidence of abuse beyond the researchers involved. That's the bare minimum response, but it doesn't erase the broader lesson here: when you centralize sensitive data in the cloud, mistakes scale instantly. A single misconfigured portal can turn a niche product into a privacy nightmare.

As a tool, AI remains enormously useful and still largely unexplored, with real potential in education, accessibility, and creativity. The problem here is not "AI" in the abstract, but specific product decisions, like the decision to pair conversational AI with children, microphones, and always-on cloud services, which is, let's say, "questionable" in the most generous reading possible.

There's also the concern that the long-term effects of chatbot interactions on kids are still unknown. Take that, add remote servers, detailed behavioral profiling, and sloppy security into the mix, and skepticism becomes not just reasonable, but necessary. AI toys that depend on constant cloud connectivity may be clever novelties today, but they demand a level of technical maturity that many startups clearly haven't earned yet. Until that changes, parents should assume that anything said to an internet-connected toy is basically being posted to the public internet.