AI Breakthrough Leaves ChatGPT In The Dust With Human-Like Cognitive Capabilities

GPT-4 might come off as incredibly intelligent in some ways, but it's still just as limited as most contemporary neural networks in other ways. A big one is its capability for what researchers call "systematic generalization." This is basically the ability to take incomplete knowledge and make use of it by placing what you've learned into a mental system and then generalizing based on that. A key example would be the way humans can hear a new word and derive what it means from context, then begin using it correctly.

Well, it turns out that you actually can get a neural network to perform systematic generalization—you just have to specifically teach them to do it. A cognitive computational scientist at New York University named Brenden Lake has just co-authored a paper detailing a new approach for guided training called "meta-learning for compositionality" that appears to give so-trained networks "human-like generalization."

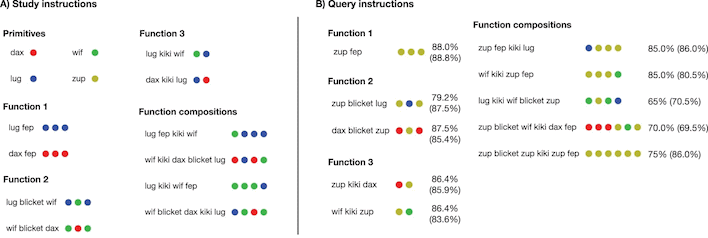

It goes like this: if you want to teach systematic generalization to an AI, first you need to figure out how to model it in humans. The researchers created an experimental paradigm that asks human participants to "process instructions in a pseudolanguage in order to generate abstract outputs." Specifically, they were given 14 study instructions—questions with answers—and then asked to extrapolate from that to ten more questions. These tasks required participants to learn the meaning of gibberish words quickly from just a few examples, and then re-use those words in a new context afterward.

By using this same training method with a neural network, the researchers were able to get an AI to produce approximately the same level of results as a human. This is fairly incredible, because no one's ever done this before. A cognitive scientist at John Hopkins University, Paul Smolensky, has stated that the performance of the network on this generalization task is indicative of "a breakthrough in the ability to train networks to be systematic."

So what does this mean? Are we on the verge of another AI revolution? Well, maybe, but it's not clear. Lake's AI became very good at generalizing in the specific tasks it had been trained on, but it's not a given that this same technique can be applied to large language models or computer vision models. These models have gigantic input data sets, and training them takes a very long time even when using hyperscale supercomputers, so it may be a bit before we see the fruits of these findings—assuming they even apply at all to larger models.

However, if it is possible, this breakthrough could have two massive benefits for systems like ChatGPT. First of all, it will slash the incidence of hallucinations, which is where the AI makes up things that don't exist or don't make sense. If the model is able to function in a systematic way, it becomes easier to discard patterns that aren't actually there. Secondly, it would allow these models to be trained in a much more efficient fashion, as they will need drastically less data for training, if they can generalize in a systematic way.