NVIDIA Jetson AGX Orin Dev-Kit Eval: Inside An AI Robot Brain

Fast forward to 2022 and NVIDIA hasn't let off the gas. In March, the company pulled back the curtain on the Jetson AGX Orin, the latest platform for robotics and AI based on the company's Ampere GPU technology. While COVID-related lockdowns overseas delayed the company in getting its hardware into the hands of developers, those units are shipping now and we've got one of the developer kits in-house. So, without further ado, let's meet the Jetson AGX Orin.

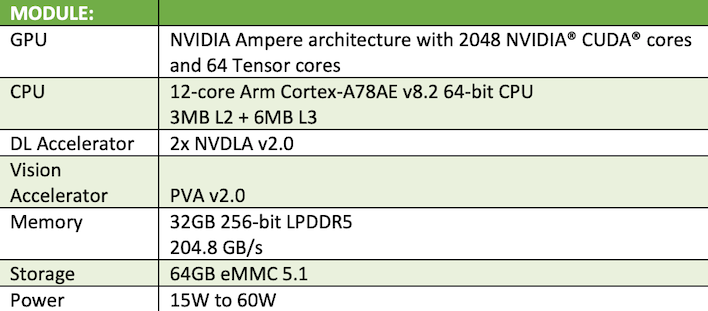

Jetson AGX Orin Developer Kit Specs

We've covered the specs of the various Jetson AGX Orin kits in our previous coverage, but the gist is that there's approximately one GeForce RTX 3050 notebook GPU's worth of CUDA and tensor cores to do most of the heavy lifting. The GPU horsepower rides alongside a pair of NVIDIA's Deep Learning Accelerators and a dozen Cortex A78AE cores in a package boasting up to a 60 Watt power budget. All of that is backed by 32GB of unified LPDDR5 memory with over 200GB/s of bandwidth. If that sounds from a high level a lot like Apple's M1 Pro or Max, let's just say that Cupertino didn't invent shared memory architectures.

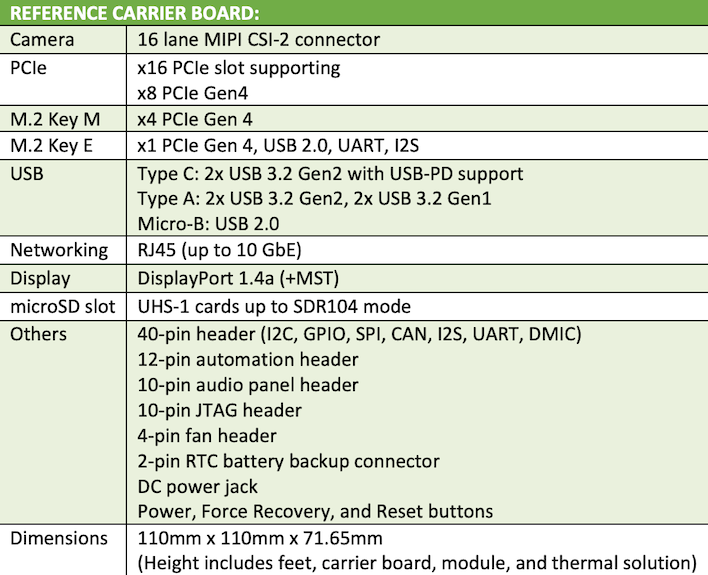

The production kit has plenty of IO for cameras, microphones, and other sensors, and the dev kit goes all out providing all of them. We've got 10Gbps USB 3.2, DisplayPort, 10 Gigabit Ethernet, a 40-pin GPIO array, and headers for automation, audio, and JTAG programmers. The micro SD slot and two M.2 slots make room for tons of additional storage and wireless connectivity. There's also a PCI Express Gen 4 slot with eight lanes of connectivity and an x16 form factor. Since the Jetson AGX Orin developer kit only has 64 GB of eMMC storage on board, this could be a good place to stash some additional storage, for example.

The Jetson AGX Orin developer kit can run headless attached to a Linux PC via one of the USB-C or micro USB ports or as a standalone Linux box. We chose to just run it as its own self-contained PC; there's plenty of memory and CPU resources, and it comes with a full installation of Ubuntu Linux 20.04 LTS ready to roll. We're going to talk in depth about our experience with Orin shortly. Whether using the Jetson AGX Orin as a tethered appliance attached to another Linux PC or as a standalone development environment, everything we needed to get started was right in the box.

Working With The Jetson AGX Orin Developer Kit

All of this is fun to read and write about, but NVIDIA sent us a kit, so we needed to dive in and check it out. As you can see in the photos above, the Jetson AGX Orin dev kit is tiny. It's about 4.3 inches square and three inches tall, about the size of one of Intel's diminutive NUCs, but a bit on the taller side. The size is helped by having an external power supply, which connects to the USB-C port right above the barrel connector, which can be used instead for power with an alternative power supply. Plug in a keyboard, mouse, and DisplayPort monitor (or an HDMI display with a DP to HDMI adapter) and power it up to get started.To help us test various features, NVIDIA also included a USB headset and 720p webcam, although these are not included as part of the developer kit normally. These pieces of hardware were important, however, as one of the demos we'll show off soon is looking for these specific hardware IDs.

The Jetson AGX Orin comes with Ubuntu Linux 20.04 preinstalled right out of the box, so the initial startup sequence will be familiar to Ubuntu veterans. After we picked our language, location, username, and password, we were presented with the Gnome 3 desktop that Ubuntu has used stock ever since retiring its Unity interface. NVIDIA included some helpful shortcuts on the desktop that open documentation links in the browser and example code folders in Gnome's file browser. But before we could get started, we needed to upgrade to NVIDIA Jetson Jetpack 5, which is around a 10 GB download on its own, and add our own code editor.

Since Microsoft added support for Arm64 Linux distributions in 2020, it's become a popular choice among Jetpack developers, whether they prefer C++ or Python. This can be downloaded directly from Microsoft's Visual Studio Code site on the Other Downloads page, or via apt-get at the command line. The VS Code Marketplace has all the language support extensions needed for both of the supported languages.

The first time we opened a project, Code prompted us to install everything we were missing, so it was a pretty painless setup process.

After installing Jetpack 5 and downloading NVIDIA's benchmark tools and code samples, we only wound up with around 15GB utilized of the built-in 64 GB eMMC storage. Developers who want to work right on the system will want to keep that in mind, since it doesn't leave tons of room for data and projects, particularly data used to do inference on visual AI models. Remember that the Jetson AGX Orin can run in headless mode connected to another Linux PC over USB, so that's one way to work around this limitation, or just use an external USB drive for storing projects. The 10Gbps USB-C port should be fast enough that most developers won't notice any slowdown.

Exploring The Jetson AGX Orin Demos

The other side of the development sample coin is demos. In addition to the code samples NVIDIA provides, there's a host of fully functional demonstrations that developers can dig into in order to see how the company's trained AI models react to live input. We dug into each to see how they functioned and to explore practical use cases. The biggest and potentially most impactful demo NVIDIA provided us with was NVIDIA Riva Automatic Speech Recognition (ASR). This one was best served by capturing the machine learning model at work in a video, so that's what we've embedded below.The ASR demo provided us with a blank terminal window, and as we talked, it started detecting our speech and transcribing it in real time. There are loads of other speech recognition software tools on the market, but this is just one part of the human-robot interface. Pair ASR with conversational AI and text-to-speech synthesis and you've got a robot that can not just talk to you, but hold a conversation, something NVIDIA is particularly proud of. We'll take a look at that on the next page, actually. Incidentally, this demo isn't exactly hardware agnostic; this particular ASR application was built for the specific headset NVIDIA sent us, but the source code can obviously be expanded for additional hardware support.

As you can see in the video, the transcription wasn't absolutely perfect, but NVIDIA says it's enough to let a service robot suss out a user's intent. The video is unscripted, and that's intentional. Most conversations aren't scripted ones, even if you've put a fair bit of thought into what you want to say. You can see that as I started and stopped, the AI started and stopped along with me, and in that regard the transcription was a pretty fair assessment of what I had to say. It's fun to watch in the video as the AI tries to figure out what I mean; words flash into and out of the terminal window as I go and it starts to detect meaning.

There are a lot of solutions on the market, but this one in particular was fun to watch as it worked. This is a pretty big deal because automated speech recognition in a low power budget is a big part of what will make robots easier to interact with. Pulling meaning and context from sentences is hard enough for humans sometimes, so training an AI is a pretty monumental task. Many companies have solutions, and NVIDIA's is not the first, but as our video -- which intentionally avoids talking about technology -- demonstrates, it's still not exactly a solved problem yet. It's getting better, though.

Which brings us to NVIDIA's AI-model-adaptation framework TAO, or "Train, Adapt, Optimize" toolkit. That means the model can be adapted by developers to quickly get up and running. As an example, NVIDIA provides the Action Recognition Net model, which has been trained to identify certain actions corresponding with specific exercises, like walking and running, as well as detecting when a person falls. This model was trained on a few hundred short video clips depicting these actions from different angles. NVIDIA has provided a tutorial on extending the model to identify additional actions, like doing a pushup or a pullup.

We followed along and could then deploy the model on the Jetson AGX Orin itself or on NVIDIA's DeepStream, which has A100 instances on Azure. This is where the difference between a datacenter accelerator instance still towers over even the upgraded Jetson. The Jetson was fast enough to run the model and test that the changes we made were right, whereas the DeepStream instances are ridiculously fast at poring over video files and identifying actions performed in them. It gave us a taste of the model enhancement workflow afforded by TAO and get a feel for the develop-deploy-test workflow provided by Jetson AGX Orin and DeepStream in tandem.

Playing with the tools is fun and all, but these pieces of kit are made for serious work. Next up, let's take a look at some practical implications and see what kinds of conclusions we can draw from our time with the kit.