All Eyes On Me: Researchers Develop Clever Way To Detect Otherwise Convincing Deepfakes

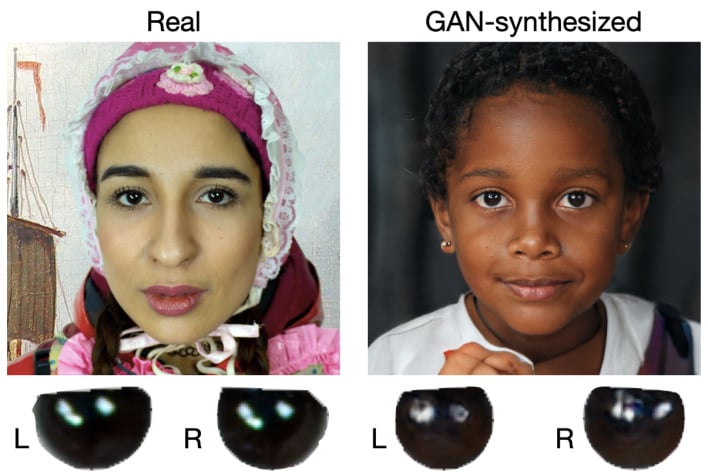

One of the most popular ways of generating human faces is using a generative adversary network (GAN) model. As the researcher's paper explains, these models can "synthesize highly realistic human faces that are difficult to discern from real ones visually." In fact, the images you see above are what the researchers used, and they came from a website called thispersondoesnotexist.com which generates faces using the "StyleGAN2" model.

After training the AI, the researchers found that the method for detecting generated images was rather effective. The only issues encountered were when images were not in the portrait configuration or images where light in the eyes was not present. Moreover, the researchers believe that with "non-trivial" manual post-processing, the inconsistencies can be mitigated.

Ultimately, the researchers hope that they will not have to worry about these problems with further training and investigation. Perhaps this, alongside other methods of detecting edited or generated images, can help sniff out deepfakes.