Enabling VRR On AMD's Radeon GPUs Shown Reducing Idle Power Consumption By Up To 81%

It turns out that all they might need to do is enable variable refresh rate (VRR) display support. German hardware site ComputerBase.de elected to re-test a bunch of GPUs' power consumption after changing to a new 4K 144-Hz monitor and noticed that it drastically reduced the idle power consumption of the Radeon card in their test system.

The change was the result of the new display defaulting to FreeSync operation because of AMD's driver, despite that the Windows "Variable refresh rate" setting was toggled off. ComputerBase investigated and found that enabling VRR reduced power consumption on both RDNA 2 and RDNA 3 Radeon cards.

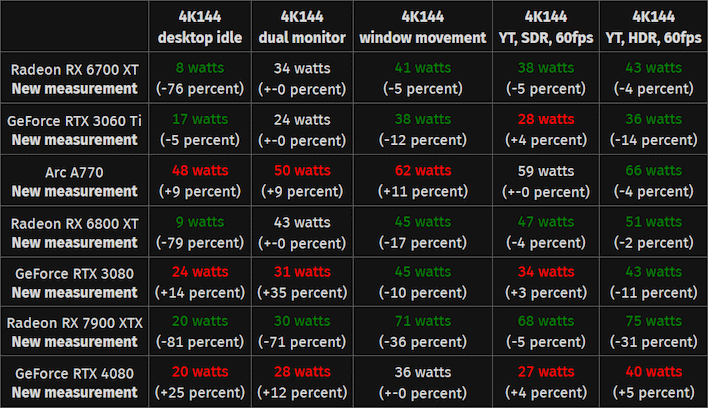

The results are pretty shocking. Both the Radeon RX 7900 XTX and Radeon RX 6800 XT saw drastic drops in their power usage when idle at the desktop with a single 144-Hz UHD monitor connected. The RDNA 3 card also saw sharp reductions in power consumption in every other test that they performed, as well, albeit not as extreme as the 81% drop in the single-monitor scenario.

Of course, as ComputerBase points out, this still puts AMD's biggest GPU well ahead (in terms of power used; not a good thing) of competitor NVIDIA's GeForce RTX 4080, which offers similar performance in most games. AMD's operating at a process disadvantage, though; the RX 7900 XTX is quite a bit larger in both die area and memory size compared to the AD103 GPU in the RTX 4080.

The real loser in ComputerBase's new testing is the Arc A770, which not only sees higher power consumption in the new testing with the latest driver, but also is just generally kind of thirsty compared to the similar-performing GeForce RTX 3060 Ti. Intel's ACM-G10 GPU is actually rather large, and likewise at a process technology disadvantage in this comparison. Hopefully Intel's next effort is more efficient.