When you plug in a USB or

Thunderbolt cable to your computer, that connection is often being routed to a small chip that then translates that data into a PCI Express signal for the final connection to your CPU. In that case, why don't we just use PCI Express for external cabling, too, and route it directly from CPU to device? This is a complex question that we aren't going to answer in full in this post, but part of the reason is simply because there wasn't really a good standard for external PCI Express cabling.

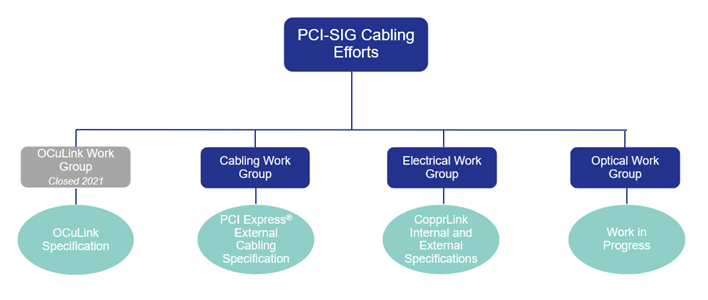

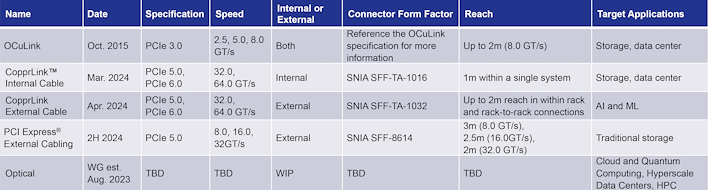

PCI-SIG created the OCuLink standard back in 2015, but it has really never seen use outside of certain servers until very recently, where some vendors have started to use it to connect handheld systems, laptops, and

mini-PCs with docking stations, external GPUs, and port replicators. This is a fascinating use case, but OCuLink was created with PCIe 3.0 in mind, and it was also intended to be used with optical carriers. PCI-SIG has already deprecated OCuLink in favor of its new standard, which is called CopprLink. (Yes, without the 'e'.)

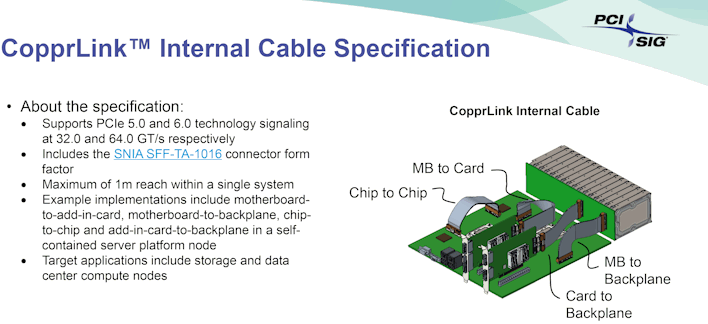

CopprLink, as you could guess from the name, drops the elements of the design tailored toward optical connections and focuses on regular old copper wires. The new cabling standard includes unique specifications for both

internal and external cables that can reliably carry PCIe 5.0 and PCIe 6.0 connections at up to 64 GT/s. The internal cables will work for just about any type of internal PCIe connection. That means that we're not just talking about PCIe riser cables here, but theoretically anywhere you'd use PCIe inside a machine: to connect motherboards and backplanes, or even to connect individual chips.

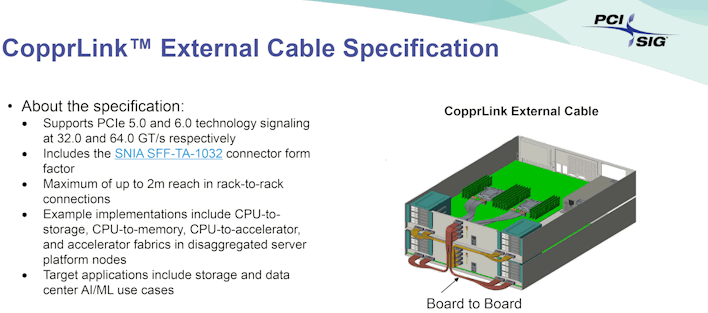

Meanwhile, the external connector serves as a replacement for the external OCuLink cables and supports longer distances—up to two meters, while retaining 64 GT/s functionality. The primary difference between the internal and external standards is that the external cable uses a more robust connector that can withstand cables being wiggled and bent. Both internal and external standards support configurations with four, eight, and sixteen lanes, meaning that we could be soon carrying

up to 128 GB/second (that's giga-BYTES, not bits) of data over a single external cable.

It's not hard to imagine the use cases for something like this; a direct PCI Express connection offers lower latency and higher throughput than even Thunderbolt 5. Of course, it also requires more work on the part of the hardware vendor to make use of it. Still, it'll be exciting if next-generation handhelds can make use of this standard to connect external devices using PCIe 5.0. A PCIe 5.0 x8 link

could give the same bandwidth as a PCIe 4.0 x16 connection to a GPU, or you could dedicate four lanes to a GPU and two lanes each to SSDs. That would make a hell of a docking station for a handheld PC.