Watch Intel Arc And Gaudi Smash Generative AI Workloads In These Slick Demos

The demos we saw fell broadly into two categories: remote, cloud-based generative AI using Intel's Gaudi AI accelerators, and local generative AI using Intel Arc GPUs, both integrated and discrete. Intel also showed us a brief demo of a Stable Diffusion AI workload running on a Meteor Lake Core Ultra processor's NPU, too.

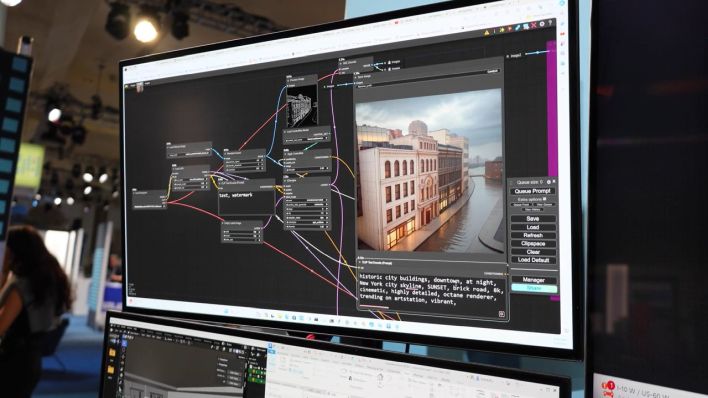

The most impressive demo was arguably the one running on a Meteor Lake laptop's integrated Arc graphics. Using the open-source community frontend ComfyUI for Stable Diffusion, Intel's Bob used a CAD realism demo based around ComfyUI and Autodesk Revit to generate a photorealistic image of a modern building with a customized design in just a few seconds. Intel stressed that all of the tools and software (besides Revit, obviously) were open-source and freely available, including the "Intel Extension for PyTorch," or IPEX.

At that same demo station, Bob also had a Dell XPS PC equipped with an Arc A770 16GB graphics card. The extra video RAM is important for AI workloads that can be thirsty for local memory on accelerators, and indeed, he demonstrated a much more impressive version of the same workload he was running on the Meteor Lake laptop nearby. The complex workflow completed in seconds; a legitimately impressive result considering the parameters.

Before that, another Intel employee showed us a couple of cool demos. The most straightforward was using a plugin for the GNU Image Manipulation Program, better known as the GIMP. This plugin used the processor's NPU for Stable Diffusion Ai image generation, and while the performance wasn't as good as the other machine using the Arc GPU, the NPU-based workload is much less likely to interfere with other tasks on the machine. It probably uses less power, too.

The other demo that David showed us was one where he took a few pictures of his own face, uploaded them to an Intel server sporting Gaudi 2 accelerators, and then had that server train a custom model that was able to generate images of David in various environments quite far removed from the conference center environment where he took the photos. It was a cool demo, and it was very impressive how quickly the Gaudi-equipped machine trained the model on David's photos.

Judging by the state of the market, it would be easy to assume that Intel's relatively late start on discrete GPUs (compared to AMD and NVIDIA) means that Chipzilla has limited offerings for AI processing, but that's really not the case at all; besides the potent Gaudi accelerators and affordable Arc GPUs, Intel's CPUs are in high demand for AI, too. In fact, a huge portion of AI processing is still done on CPUs, and that's not likely to change. So saying, don't count Intel out of the AI race just yet.