We still have several months to go before Christmas rolls around, but

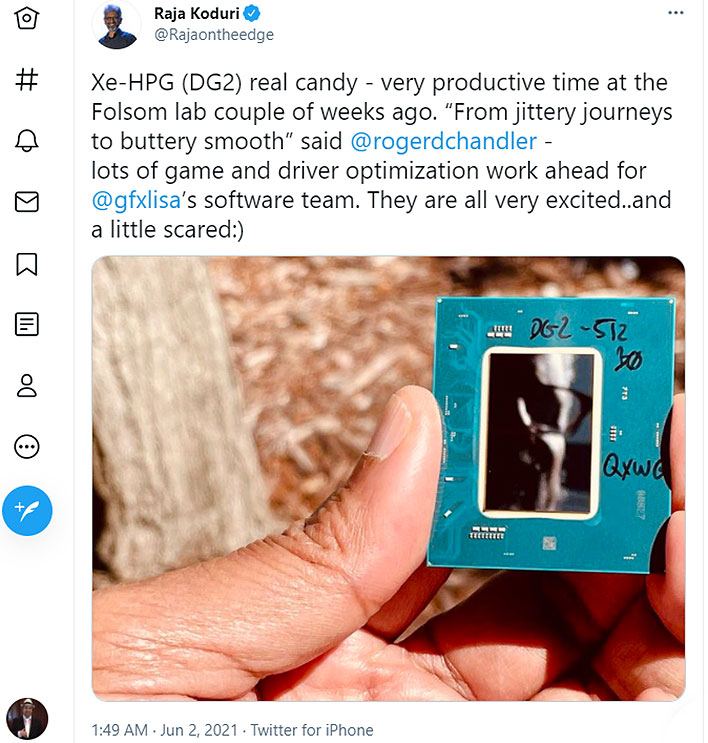

Intel's Raja Koduri has just given us a present all the same—over on Twitter, he posted a shiny photo of Intel's upcoming gaming GPU. And by shiny, I mean it is a high quality photo that shows Koduri's reflection in the rectangular integrated heat spreader (IHS) that sits atop the die.

This kind of snapshot is in stark contrast to the usual quality we get from leaked images, some of which can look like they were taken with a Fisher Price camera through a layer of Vaseline smudged on the lens. But it is not the quality of the photo that is the story here, it is the progress Intel is making on DG2, its upcoming discrete graphics solutions for gamers.

"Xe-HPG (DG2) real candy—very productive time at the Folsom lab couple of weeks ago. 'From jittery journeys to buttery smooth', said

@rogerdchandler—lots of game and driver optimization work ahead for

@gfxlisa

’s software team. They are all very excited..and a little scared," Koduri said, with a smiling emoticon for good measure.

If we are to read between the lines, it seems Koduri is suggesting that the bulk of work on the hardware side for Intel's Xe-HPG graphics chip is finished, and now the focus is shifting to driver development and software optimizations. Indeed, drivers and software are important factors, and can make or break the overall experience.

It's not clear which exact version of DG2 we are looking at in the photo. Intel is expected to launch several different variants, including versions with

96, 128, 256, 384, and 512 execution units (EUs). Here's a brief, unofficial rundown based on prior leaks and rumors...

-

SKU 1: 512 EUs, 1.1GHz-1.8GHz, 16GB GDDR6 (16Gbps), 256-bit bus, 100 TDP (mobile only)

- SKU 2: 384 EUs, 600MHz-1.8GHz, 12GB GDDR6 (16Gbps), 192-bit bus, 100 TDP (mobile only)

- SKU 3: 256 EUs, 450MHz-1.4GHz, 8GB GDDR6 (16Gbps), 128-bit bus, 100 TDP (mobile only)

- SKU 4: 196 EUs, 4GB GDDR6 (16Gbps), 64-bit bus

- SKU 5: 128 EUs, 4GB GDDR6 (16Gbps), 64-bit bus

Another rumor suggests the top-end

DG2 part will deliver performance somewhere between a GeForce RTX 3070 and GeForce RTX 3080. NVIDIA just launched Ti variants of both cards, so in essence we would be looking at performance in the neighborhood of a GeForce RTX 3070 Ti, if the information turns out to be on the money.

Intel's Xe-HPG Gaming GPU (DG2) Might Support AMD's FidelityFX Super Resolution Tech

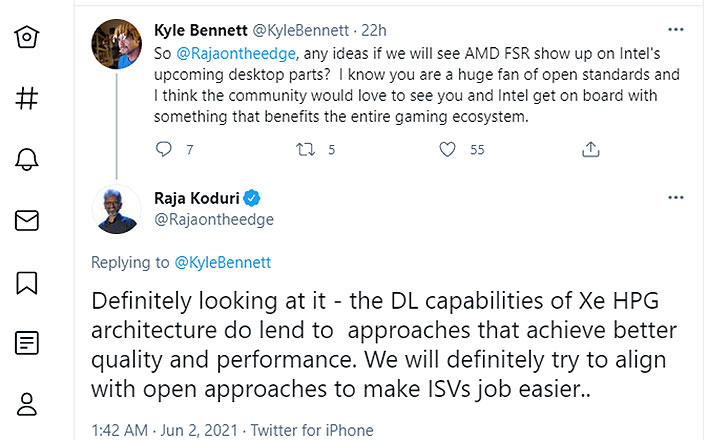

A photo of the actual DG2 graphics chip is not the only nugget Koduri served up. He also teased potential support for AMD's open source FidelityFX Super Resolution technology.

In response to a question about supporting the open source upscaling technology, Koduri said it is a possibility.

"Definitely looking at it—the DL [deep learning\ capabilities of Xe HPG architecture do lend to approaches that achieve better quality and performance. We will definitely try to align with open approaches to make ISVs [Independent Software Vendors] job easier..," Koduri wrote.

FidelityFX Super Resolution is basically AMD's answer to NVIDIA's Deep Learning Super Sampling (DLSS) technology. Both aim to upscale images with minimal loss to visual quality, with the ultimate goal of improve performance at higher resolutions. For example, upscaling a 1440p scene to 4K lessens the load on the GPU, versus rendering at 4K natively.

NVIDIA's latest

DLSS 2.0 technology works wonderfully in our experience (it's a big improvement over DLSS 1.0), and we're hoping FSR works just as well. According to AMD, turning FSR one results in twice the performance, on

average, versus native 4K rendering. That would be impressive if it holds true, and can do it while maintaining the same or similar image quality.

Being an open source technology, companies like NVIDIA and Intel are free to use it. NVIDIA is not real motivated, of course, because it is pushing its proprietary DLSS technology. But Intel might be more inclined, at least until if and when it develops a solution of its own. The company's plate is pretty full, though, so it makes sense to us that it would turn to an open source standard while focusing its efforts on its initial entry into the discrete GPU space.

We've reached out to Intel for more information about this and will provided an update when we hear back.