Intel Combats Growing Deepfake Threat With A Real-Time Detector That Looks At Blood Flow

Deepfake videos are produced using AI algorithms which can digitally stitch one person's face onto another's or even mimic their voice. They can be a fun novelty for memes, but are fast becoming a concern for information integrity. Imagine having someone send you a video of yourself saying something rude or doing something embarrassing, only for that event to have never actually taken place—then scale that effect up to world politics and the potential implications there-in.

“Deepfake videos are everywhere now. You have probably already seen them; videos of celebrities doing or saying things they never actually did,” says Ilke Demir, a senior staff research scientist at Intel Labs. South Park creators Trey Parker and Matt Stone notibly took this idea to its extreme with their show Sassy Justice.

Deepfakes are not just a concern for spreading misinformation about something that did not actually happen—a guilty party caught in the act could just as easily claim the footage is a deepfake to sow doubt of their guilt. Either way you slice it, it is important to have objective systems in place that can sort fact from fiction. Adobe has been proactive about developing its own detection methods to sniff out Photoshop jobs and deepfakes, but relies on detecting manipulations directly within the frame.

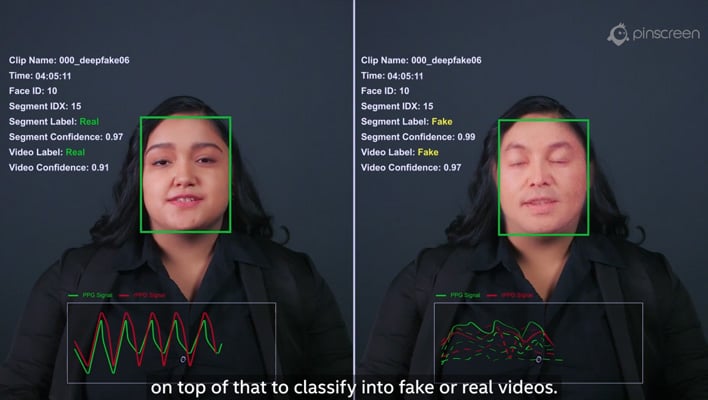

Intel's approach is different. Rather than scanning for "signs of inauthenticity," its approach is more akin to a liveliness detector. As blood flows through our bodies, our veins shift color in very subtle ways. The human eye is not particularly adept at spotting these changes, but they do pick up on video. This process is called photoplethysmography, or PPG.

FakeCatcher watches for these subtle PPG signals in video footage and converts them into spatiotemporal maps. That is, it watches for where the changes occur and how they change over time. Intel's AI engine is then able to compare these maps to its training set and instantly flag if something is amiss.

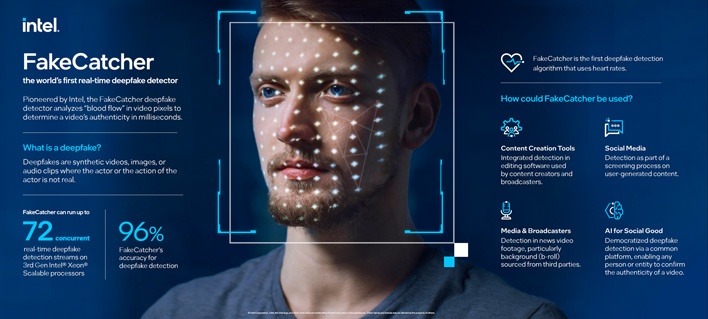

The Intel Labs team is powering FakeCatcher using Intel's 3rd Gen Xeon Scalable processors with up to 72 concurrent detection streams, and operates through a web interface. The AI models for face and landmark detection are built on OpenVino while the computer vision portions leverage Intel Integrated Performance Primitives and OpenCV with inferencing leaning on AVX-512 and Intel Deep Learning Boost.

96% accuracy in real-time is a game changer, if it holds up. Existing solutions, including Adobe's, are generally performed asynchronously. Suspect footage has to be sent to a server for processing which make take hours to render its judgement.

Demir will cover more about the technology driving FakeCatcher and its implications for Intel and the industry at large in a Twitter Spaces event this Wednesday, November 16th, at 2:30pm ET / 11:30am PT.