Why You Shouldn't Trust ChatGPT's Answers To Software Engineering Questions

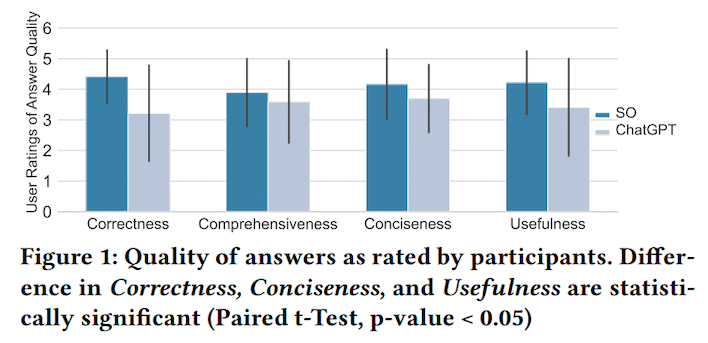

We say "appears to give" because though it's already well known that ChatGPT will easily put out non-functional code, nobody had done a proper study on it until now. Researchers at Purdue University just put out a paper titled "Who Answers It Better? An In-Depth Analysis of ChatGPT and Stack Overflow Answers to Software Engineering Questions." It's the result of a significant research project involving manual study of responses from both sources as well as semi-structured interviews with users.

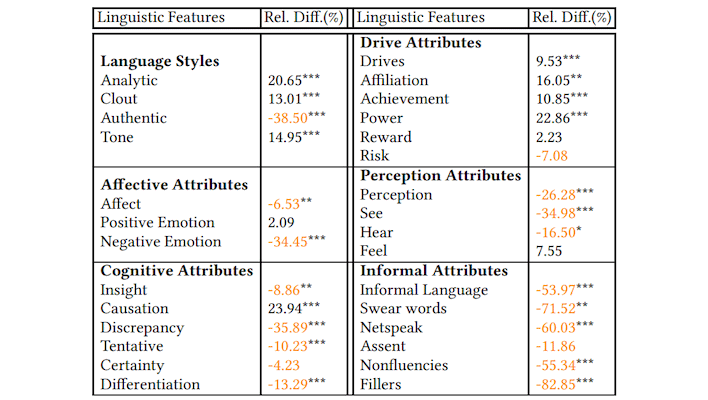

This well-articulated quality along with the comprehensive nature of the responses could be why interviewees failed to notice factual errors and incorrect information in ChatGPT's responses some 40% of the time. This is the real terrifying statistic—it's not a surprise that ChatGPT hallucinates data, but it's a little shocking that nearly half the time, humans don't notice because the response "looks" correct.

That's ultimately the problem with all current generative AI—the things generated by these black box machines are more often than not, incorrect by any human reckoning. It's just that they look correct at a glance; they're "close enough" without thorough inspection. You can see it with images, with audio, and with text. While the tech is still improving, for now, you'd better stick to other humans when trying to learn a new skill.