Yesterday,

Apple previewed new child

safety features to protect children from predators and limit the spread of Child Sexual Abuse Material (CSAM). While these features, which were developed in conjunction with child safety experts, sound great, they also open the door to future

privacy issues.

The first major part of the

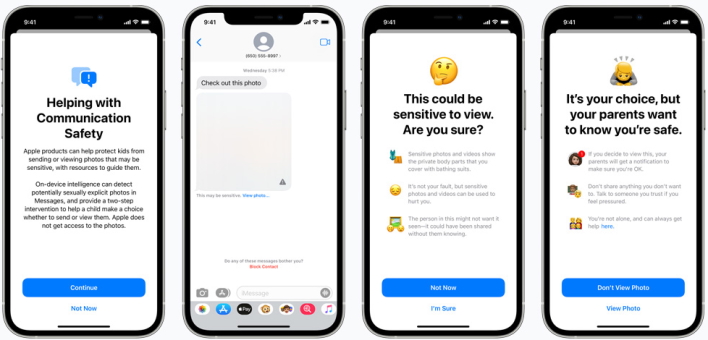

child safety updates regards communication in Messages, Apple's popular messaging app. For example, when there is receipt of sexually explicit photos, "the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo." If the child decides to view the content, their parents will then be notified as an additional precaution. Similarly, there will be warnings if a child decides to send a photo, and parents will be notified if the child moves forward after the warnings.

The process of scanning is all reportedly done using "on-device machine learning to analyze image attachments and determine if a photo is sexually explicit." This ensures that Apple does not or will not have access to any message sent.

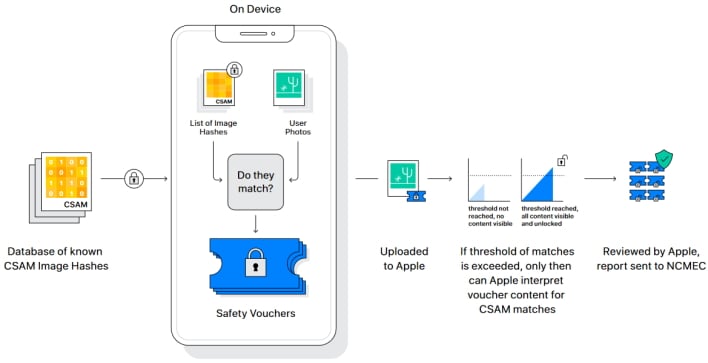

Besides the changes to Messages, Apple will also begin scanning iCloud uploads using "new technology in iOS and iPadOS* [that] will allow Apple to detect known CSAM images." The tech reportedly uses on-device matching where the file hash, or unique file fingerprint, is compared to a secure list of known CSAM image file hashes. This process executes between the user uploading the file and the file reaching iCloud. If the system detects something using this secret comparison, Apple will manually review the generated report, which will have been uploaded to iCloud with the image. If the alert is accurate, the company will disable the user's account and report the incident to the National Center for Missing and Exploited Children.

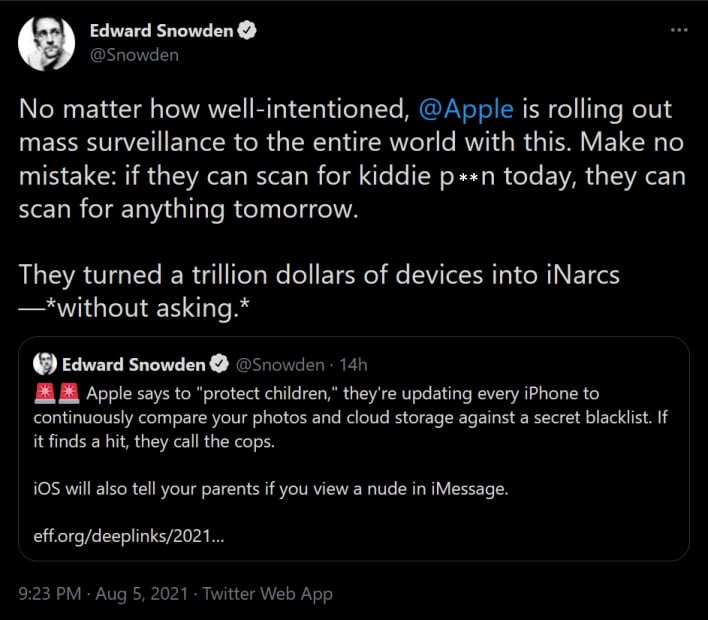

While protecting children sounds great in practice, it certainly disrupts the encryption and end-user privacy for which Apple has been lauded. Once this system is fully implemented, all Apple would need to do is add some flags to the machine learning algorithms to scan for anything else without people knowing. As software VP Sebastien Marineau-Mes

mentioned in an Apple memo leaked to

9to5Mac, there have been internal concerns, writing, "We know some people have misunderstandings, and more than a few are worried about the implications." Infamous whistleblower Edward Snowden put things more bluntly, stating that effectively, "Apple is rolling out mass surveillance to the entire world with this."

Apple's latest move has even drawn the ire of the Electronic Frontier Foundation (EFF), which likens it as a backdoor to your private life. "All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts," writes the EFF. "That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change."

Though many technical documents outline how the new systems work and how secure they are, there is still cause for concern. A small backdoor into a system is still a backdoor, even if it is set to protect thousands if not millions of children.