Apple Backpedals On Controversial Child Safety Tech After Harsh Privacy Concern Backlash

"Last month, we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material," said Apple in a statement. "Based on feedback from customers, advocacy groups, researchers, and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features."

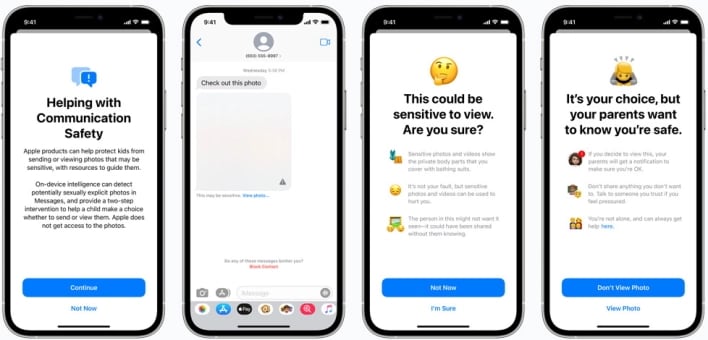

The company planned to take a multi-pronged approach, including monitoring iMessages using machine learning to identify sexually explicit images. However, the most controversial aspect of Apple's initiative involved iCloud Photos, which would detect unique "fingerprints" that match flagged images in the CSAM database. Once the threshold for identifiable images was reached (later specified as 30 or more), the account would be flagged for manual review by Apple moderators.

Many saw this as a blatant invasion of privacy, and Apple SVP Craig Federighi attempted to clear the air in early August. "We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi at the time.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion. It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

However, as we can see by Apple's current backpedaling, the damage was already done to its plans to combat CSAM. The company faced criticism from privacy-centric figures in tech like Edward Snowden and drew the ire of the Electronic Frontier Foundation (EFF).

"All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children's, but anyone's accounts," wrote the EFF shortly after Apple announced its new CSAM protections. "That's not a slippery slope; that's a fully built system just waiting for external pressure to make the slightest change."

Apple has not provided a timeline for when its revised CSAM protections will launch; the initiative was initially slated to launch later this year.