Apple SVP Federighi Admits The Company Goofed With Poor Messaging Of Child Safety Plan

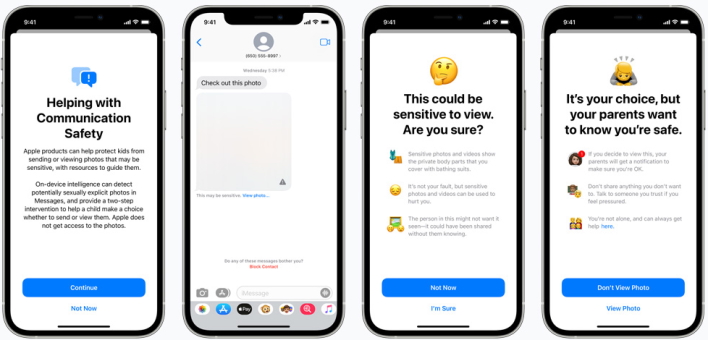

One such feature involves communications involving children through iMessage. Apple said it uses on-device machine learning to determine whether images sent or received on a child's device contain sexually explicit photographs. When enabled, parents would receive a notification if such images trigger the Apple filter. For children under the age of 17, CSAM material that is received would also be blurred.

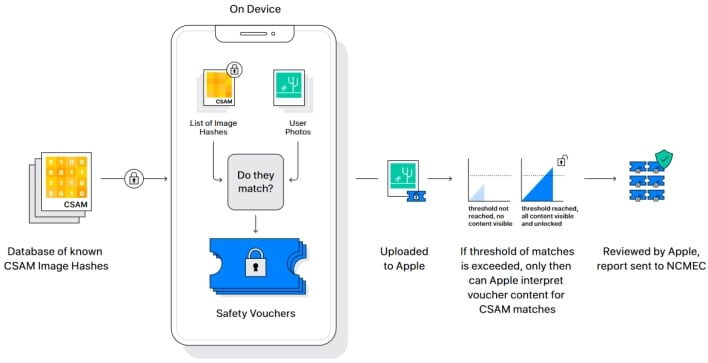

The other, more problematic feature for Apple comes in the form of CSAM detection that will be present in iCloud Photos. If the digital footprint of CSAM is detected in images uploaded to an iCloud Photos account, it is flagged, where it then goes to a manual review process. If a certain threshold is reached for CSAM content stored in iCloud Photos, Apple will flag the account and report its findings to the National Center for Missing and Exploited Children (NCMEC).

Given the privacy concerns, Apple SVP Craig Federighi took part in an interview with the Wall Street Journal to clarify the company's privacy stance and to admit that the company's messaging to the public was flawed.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi to the WSJ's Joanna Stern. "I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion. It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

Federighi also clarified that Apple is not scanning photos stored on your actual iPhone or iPad. Instead, its photo scanning provisions are limited to iCloud Photos, and then only if its customers use that feature.

"This is only being applied as part of a process of storing something in the cloud," Federighi added. "This isn't some processing running over the images you store in Messages, or Telegram or what you're browsing over the web. This literally is part of the pipeline for storing images in iCloud."

He also clarified the threshold for triggering an account review and concerns that parents had about innocent images of their own [naked] children on their phones being flagged:

If and only if you meet a threshold of something on the order of 30 known child pornographic images matching, only then does Apple know anything about your account and know anything about those images, and at that point, only knows about those images, not about any of your other images. This isn't doing some analysis for did you have a picture of your child in the bathtub? Or, for that matter, did you have a picture of some pornography of any other sort? This is literally only matching on the exact fingerprints of specific known child pornographic images.

It's not often that Apple puts such a high-profile executive out there to address blowback from a new and controversial feature. Still, this CSAM saga has blown up in the tech sphere and with everyday Americans concerned about privacy and potential government intervention. At this point, Apple has no plans drop its efforts in the face of public backlash, so perhaps it would be wise to read up on the company's FAQ to familiarize yourself with the ins and outs of the protections.