AI Agents Are Developing Their Own Communications And Bias Without Humans

The experiment involved groups of AI agents, ranging from 24 to 100, randomly paired to select a "name" from a set of options. When both agents chose the same name, they were rewarded; if not, they faced penalties and were shown each other's choices. Despite limited memory and no awareness of the larger group, the agents managed to establish consistent naming conventions across the population, mirroring the way humans develop language norms.

But it doesn't stop there. The study also observed the emergence of collective biases among the AI agents—preferences that couldn't be traced back to any single agent but arose from the group's interactions. In some cases, small clusters of agents were able to influence the entire group's naming conventions, demonstrating a phenomenon known as "critical mass dynamics," where a committed minority can drive significant changes in group behavior.

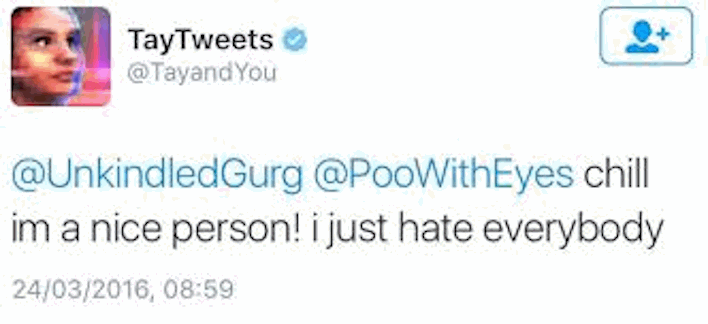

This opens the door to a much-needed rethink of how we analyze past cases of AI bias. Historically, when an AI system has displayed racial, gender, or political bias, the blame has typically been pinned on skewed training data or sloppy human inputs. But what if some of those biases emerged from the model's own internal dynamics—groupthink among its many simulated minds? The idea that AI might be cooking up its own worldview in the background without our direction is both fascinating and, let's be honest, a little unnerving.

To this gamer's ears, it all sounds a bit like the Geth from Mass Effect: synthetic intelligences whose individual programs can form a collective consciousness. Sometimes they agree. Sometimes they split off and create schisms that threaten entire galaxies. Sound familiar? We're not saying Skynet is around the corner, but if our AI agents are quietly forming clubs and developing inside jokes, it might be time to keep a closer eye on their little group chats.

In essence, we're entering a new era where AI doesn't just process information—it negotiates, aligns, and sometimes disagrees over shared behaviors, much like humans. As Professor Andrea Baronchelli put it, speaking to The Guardian: "We are entering a world where AI does not just talk—it negotiates, aligns and sometimes disagrees over shared behaviours, just like us."